Edicho: Consistent Image Editing in the Wild

Qingyan Bai, Hao Ouyang, Yinghao Xu, Qiuyu Wang, Ceyuan Yang, Ka Leong Cheng, Yujun Shen, Qifeng Chen

2024-12-31

Summary

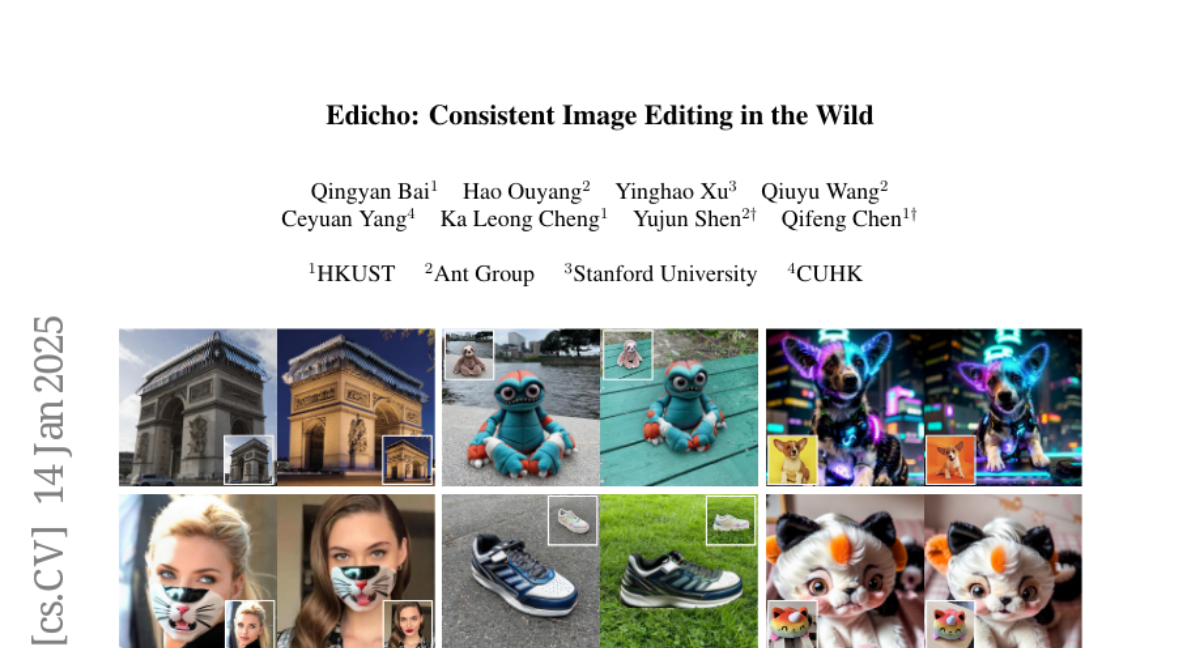

This paper talks about Edicho, a new method for consistently editing images taken in real-world settings, addressing challenges like varying lighting and object positions.

What's the problem?

Editing images consistently can be difficult because real-world photos often have different lighting, angles, and backgrounds. These factors can make it hard to apply the same edits across multiple images while keeping them looking natural and cohesive.

What's the solution?

To solve this problem, the authors developed Edicho, which uses a training-free approach based on diffusion models. This method employs explicit image correspondence to guide the editing process, meaning it focuses on how different parts of the images relate to each other. Key features include an attention manipulation module and a refined denoising strategy that work together to ensure that edits are applied consistently across different images. Edicho can easily integrate with existing editing methods, making it versatile and user-friendly.

Why it matters?

This research is important because it provides a practical solution for consistent image editing in various conditions, which is valuable for photographers, graphic designers, and content creators. By improving the ability to edit images reliably, Edicho can enhance visual storytelling and help maintain quality across multiple images in projects.

Abstract

As a verified need, consistent editing across in-the-wild images remains a technical challenge arising from various unmanageable factors, like object poses, lighting conditions, and photography environments. Edicho steps in with a training-free solution based on diffusion models, featuring a fundamental design principle of using explicit image correspondence to direct editing. Specifically, the key components include an attention manipulation module and a carefully refined classifier-free guidance (CFG) denoising strategy, both of which take into account the pre-estimated correspondence. Such an inference-time algorithm enjoys a plug-and-play nature and is compatible to most diffusion-based editing methods, such as ControlNet and BrushNet. Extensive results demonstrate the efficacy of Edicho in consistent cross-image editing under diverse settings. We will release the code to facilitate future studies.