A Diffusion Approach to Radiance Field Relighting using Multi-Illumination Synthesis

Yohan Poirier-Ginter, Alban Gauthier, Julien Phillip, Jean-Francois Lalonde, George Drettakis

2024-09-16

Summary

This paper presents a new method for relighting 3D scenes using a technique called diffusion, which allows for realistic lighting changes based on single-illumination data.

What's the problem?

Relighting 3D scenes is challenging because most data is captured under one type of lighting, making it hard to create realistic lighting effects for scenes with multiple objects. Existing methods often require complex calculations and can struggle with accuracy.

What's the solution?

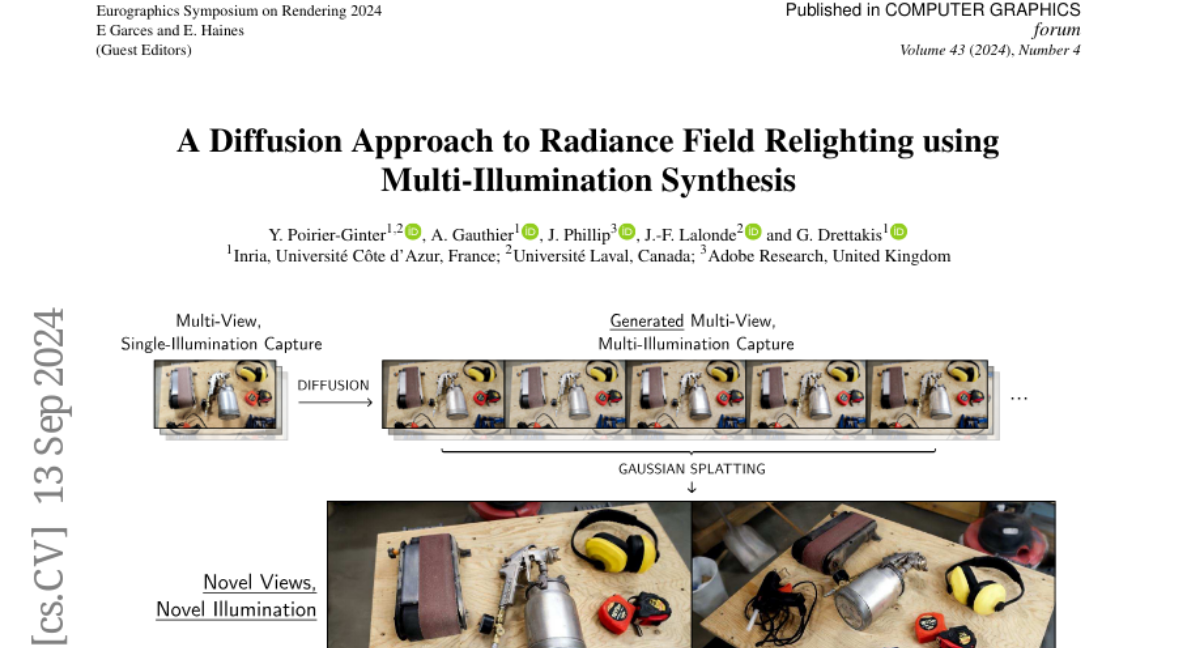

The authors introduce a method that uses a 2D image diffusion model to create a multi-illumination dataset from single-illumination data. They fine-tune this model to generate realistic lighting conditions and then use these conditions to create a relightable radiance field represented by 3D Gaussian splats. This approach allows for direct control over lighting direction and improves the consistency of the images across different views.

Why it matters?

This research is significant because it simplifies the process of changing lighting in 3D scenes, making it faster and more efficient. By improving how we can relight scenes, it has applications in areas like video games, film production, and virtual reality, where realistic lighting is crucial for creating immersive experiences.

Abstract

Relighting radiance fields is severely underconstrained for multi-view data, which is most often captured under a single illumination condition; It is especially hard for full scenes containing multiple objects. We introduce a method to create relightable radiance fields using such single-illumination data by exploiting priors extracted from 2D image diffusion models. We first fine-tune a 2D diffusion model on a multi-illumination dataset conditioned by light direction, allowing us to augment a single-illumination capture into a realistic -- but possibly inconsistent -- multi-illumination dataset from directly defined light directions. We use this augmented data to create a relightable radiance field represented by 3D Gaussian splats. To allow direct control of light direction for low-frequency lighting, we represent appearance with a multi-layer perceptron parameterized on light direction. To enforce multi-view consistency and overcome inaccuracies we optimize a per-image auxiliary feature vector. We show results on synthetic and real multi-view data under single illumination, demonstrating that our method successfully exploits 2D diffusion model priors to allow realistic 3D relighting for complete scenes. Project site https://repo-sam.inria.fr/fungraph/generative-radiance-field-relighting/