A Graph Perspective to Probe Structural Patterns of Knowledge in Large Language Models

Utkarsh Sahu, Zhisheng Qi, Yongjia Lei, Ryan A. Rossi, Franck Dernoncourt, Nesreen K. Ahmed, Mahantesh M Halappanavar, Yao Ma, Yu Wang

2025-05-30

Summary

This paper talks about how researchers looked at the way large language models organize and connect information, using graphs (which are like networks of points and lines) to better understand how these models store and relate knowledge.

What's the problem?

The problem is that while large language models know a lot, it's hard to see how their knowledge is structured and how different pieces of information are linked together inside the model, which makes it tricky to figure out what the model really understands.

What's the solution?

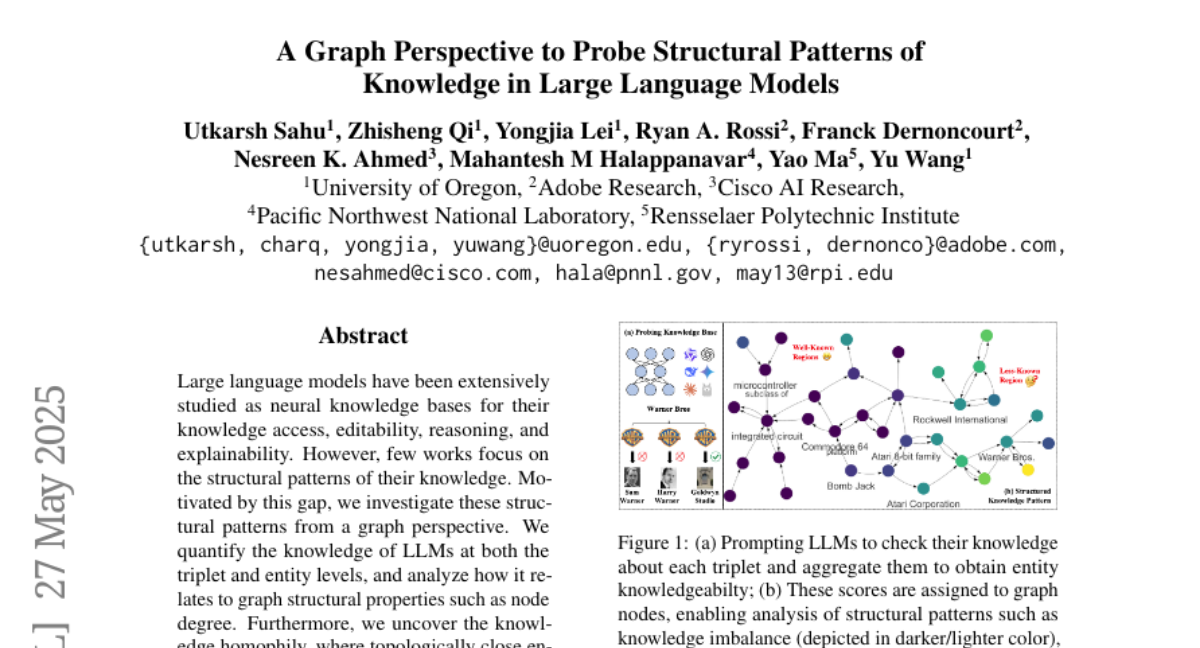

The researchers used graph theory to map out the connections between different facts and ideas in the language models, discovering that similar pieces of knowledge tend to group together, a pattern called knowledge homophily. They also built new graph-based tools to help estimate how much the model knows about certain topics.

Why it matters?

This is important because understanding how AI organizes knowledge can help us improve these models, make them more reliable, and ensure they give better answers, which benefits anyone who uses AI for learning, research, or problem-solving.

Abstract

The study explores the structural patterns of knowledge in large language models from a graph perspective, uncovering knowledge homophily and developing models for graph machine learning to estimate entity knowledge.