Amuro & Char: Analyzing the Relationship between Pre-Training and Fine-Tuning of Large Language Models

Kaiser Sun, Mark Dredze

2024-08-14

Summary

This paper analyzes how pre-training and fine-tuning processes affect large language models (LLMs) and explores their relationship to improve model performance.

What's the problem?

Large language models often perform well on tasks they were trained on but struggle with new tasks or longer inputs. This inconsistency can be due to the way these models are trained, particularly the lack of long-output examples during their initial training phase.

What's the solution?

The authors conducted experiments using multiple pre-trained model checkpoints to see how additional fine-tuning affects performance. They found that continuing to train the model with more data helps it learn better and adapt to new tasks. They also discovered that while fine-tuning improves performance, it can cause the model to forget some of its earlier knowledge. Additionally, they noted that models become very sensitive to the way questions are asked after fine-tuning, but this sensitivity can be reduced with more pre-training.

Why it matters?

This research is important because it helps improve how large language models are trained, making them more effective for various applications. By understanding the relationship between pre-training and fine-tuning, researchers can develop better AI systems that can handle a wider range of tasks and provide more accurate responses.

Abstract

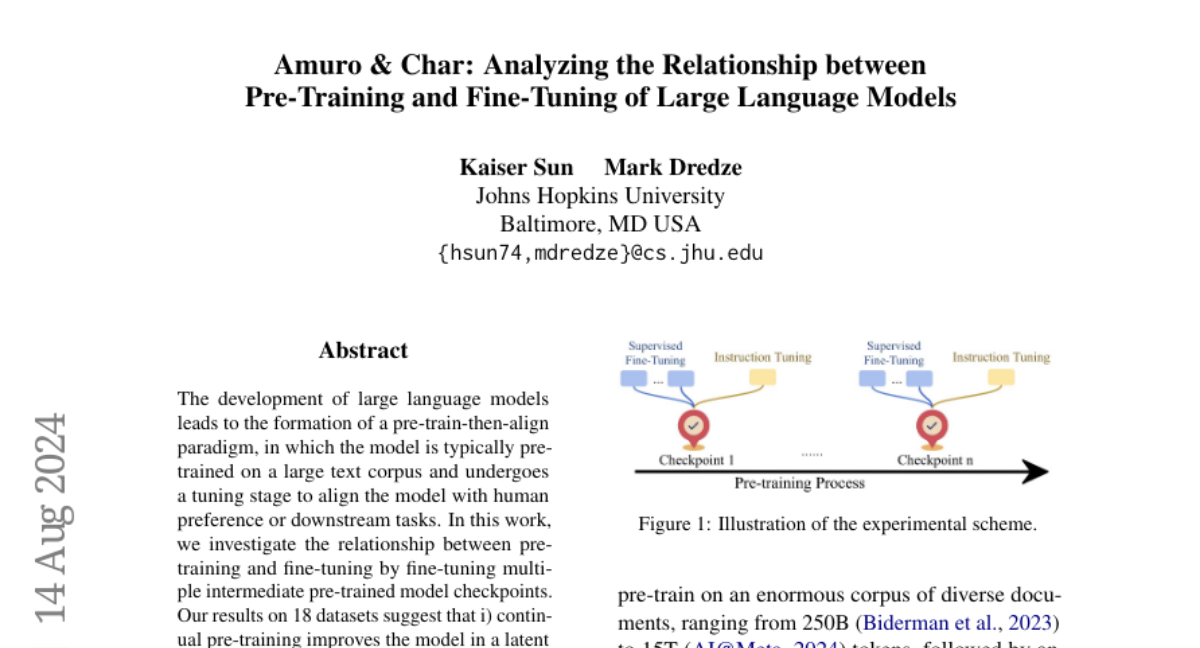

The development of large language models leads to the formation of a pre-train-then-align paradigm, in which the model is typically pre-trained on a large text corpus and undergoes a tuning stage to align the model with human preference or downstream tasks. In this work, we investigate the relationship between pre-training and fine-tuning by fine-tuning multiple intermediate pre-trained model checkpoints. Our results on 18 datasets suggest that i) continual pre-training improves the model in a latent way that unveils after fine-tuning; ii) with extra fine-tuning, the datasets that the model does not demonstrate capability gain much more than those that the model performs well during the pre-training stage; iii) although model benefits significantly through supervised fine-tuning, it may forget previously known domain knowledge and the tasks that are not seen during fine-tuning; iv) the model resembles high sensitivity to evaluation prompts after supervised fine-tuning, but this sensitivity can be alleviated by more pre-training.