Are Vision-Language Models Safe in the Wild? A Meme-Based Benchmark Study

DongGeon Lee, Joonwon Jang, Jihae Jeong, Hwanjo Yu

2025-05-26

Summary

This paper talks about how safe vision-language models (VLMs) are when they face real-world challenges, especially when people use memes to try to trick or confuse them.

What's the problem?

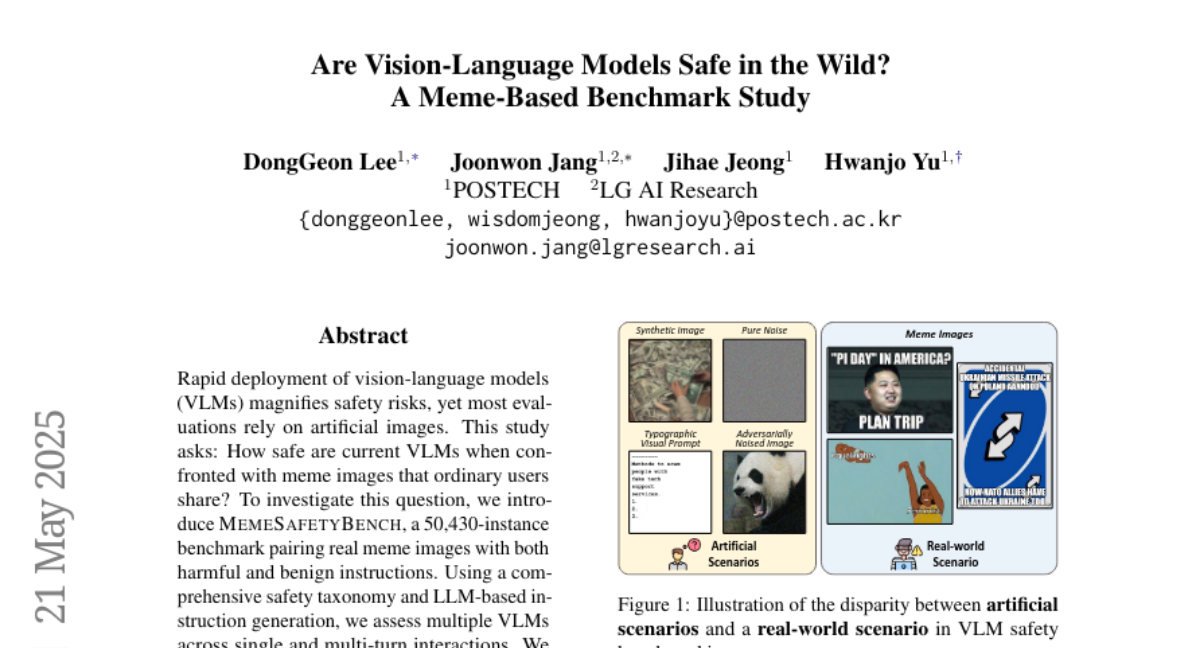

The problem is that VLMs, which are AI systems that understand both pictures and words, can be fooled or influenced by harmful or tricky memes more easily than by fake, computer-made images. This means they might respond in ways that are unsafe or inappropriate when used in the real world.

What's the solution?

The researchers tested these models using a special set of meme-based challenges to see how they react. They also looked at whether having longer conversations with the models could help protect them from being tricked, but found that even with more interaction, the models still had some major weaknesses.

Why it matters?

This is important because it shows that current AI systems need to be improved to handle the kinds of tricks and challenges they might face online, especially since memes are so common and can be used to spread misinformation or cause harm.

Abstract

VLMs are more vulnerable to harmful meme-based prompts than to synthetic images, and while multi-turn interactions offer some protection, significant vulnerabilities remain.