AV-Link: Temporally-Aligned Diffusion Features for Cross-Modal Audio-Video Generation

Moayed Haji-Ali, Willi Menapace, Aliaksandr Siarohin, Ivan Skorokhodov, Alper Canberk, Kwot Sin Lee, Vicente Ordonez, Sergey Tulyakov

2024-12-20

Summary

This paper introduces AV-Link, a new system that allows for generating audio from video and vice versa. It uses advanced techniques to ensure that the sound and visuals are perfectly synchronized, creating a more immersive experience.

What's the problem?

Generating audio that matches video content (Video-to-Audio) or creating video that fits with audio (Audio-to-Video) has been challenging. Existing methods often struggle to align the two modalities properly, leading to unsynchronized or unrealistic results.

What's the solution?

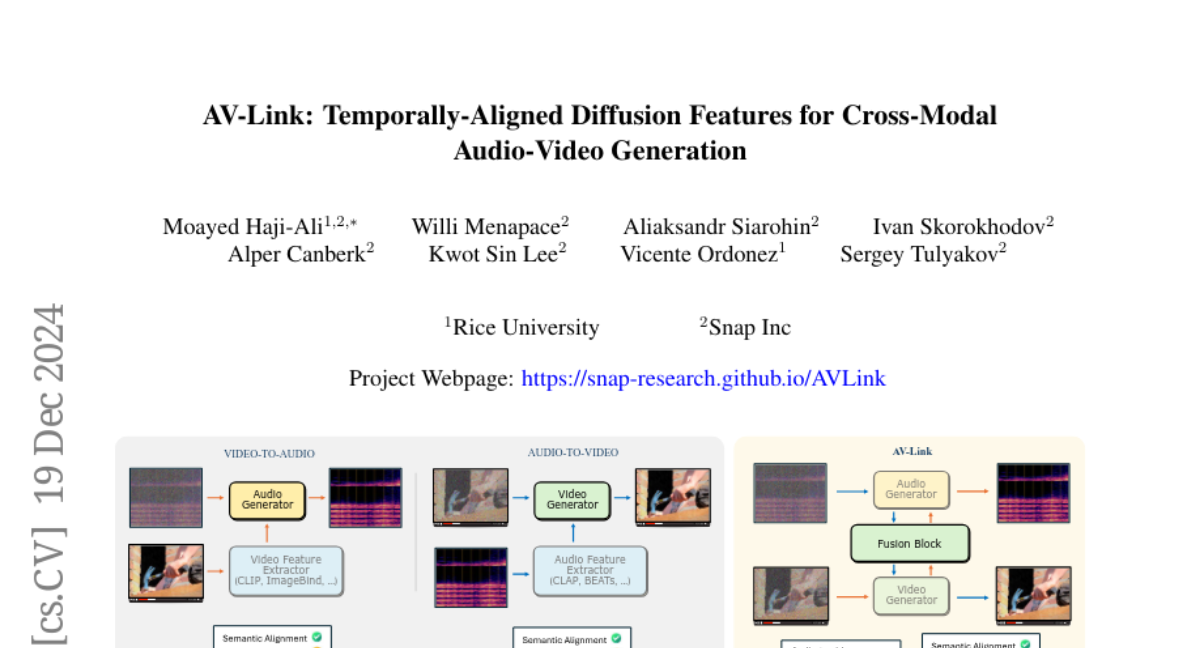

AV-Link addresses this issue by using a special structure called a Fusion Block, which enables the video and audio models to share information in real-time. This means that while generating audio from video or video from audio, the system can ensure that both elements are temporally aligned. The framework leverages features from both the video and audio models directly, allowing for better integration and synchronization of sound and visuals.

Why it matters?

This research is significant because it enhances how we can create multimedia content, making it easier to produce videos that have realistic soundtracks or audio that matches visual scenes. This has important applications in film production, gaming, and virtual reality, where high-quality audiovisual experiences are crucial.

Abstract

We propose AV-Link, a unified framework for Video-to-Audio and Audio-to-Video generation that leverages the activations of frozen video and audio diffusion models for temporally-aligned cross-modal conditioning. The key to our framework is a Fusion Block that enables bidirectional information exchange between our backbone video and audio diffusion models through a temporally-aligned self attention operation. Unlike prior work that uses feature extractors pretrained for other tasks for the conditioning signal, AV-Link can directly leverage features obtained by the complementary modality in a single framework i.e. video features to generate audio, or audio features to generate video. We extensively evaluate our design choices and demonstrate the ability of our method to achieve synchronized and high-quality audiovisual content, showcasing its potential for applications in immersive media generation. Project Page: snap-research.github.io/AVLink/