Baking Gaussian Splatting into Diffusion Denoiser for Fast and Scalable Single-stage Image-to-3D Generation

Yuanhao Cai, He Zhang, Kai Zhang, Yixun Liang, Mengwei Ren, Fujun Luan, Qing Liu, Soo Ye Kim, Jianming Zhang, Zhifei Zhang, Yuqian Zhou, Zhe Lin, Alan Yuille

2024-11-22

Summary

This paper presents MagicDriveDiT, a new method for generating high-resolution, long videos for autonomous driving applications using advanced video synthesis techniques.

What's the problem?

Current methods for creating 3D videos from images often struggle with maintaining consistency across different views and typically require multiple images of the same object. These limitations make it difficult to generate realistic and detailed videos needed for tasks like autonomous driving.

What's the solution?

MagicDriveDiT introduces a single-stage diffusion model that can generate 3D representations directly from a single view. It uses a technique called Gaussian splatting to create 3D point clouds, ensuring that the generated videos are consistent regardless of the viewing angle. Additionally, the model employs a mixed training strategy to enhance its ability to generalize across different scenes and objects. This approach allows it to produce high-quality videos that are faster to generate than previous methods.

Why it matters?

This research is important because it improves the technology used in autonomous driving by enabling the creation of realistic video simulations from just one image. By enhancing video generation quality and speed, MagicDriveDiT can help develop safer and more effective autonomous vehicles, which is crucial for the future of transportation.

Abstract

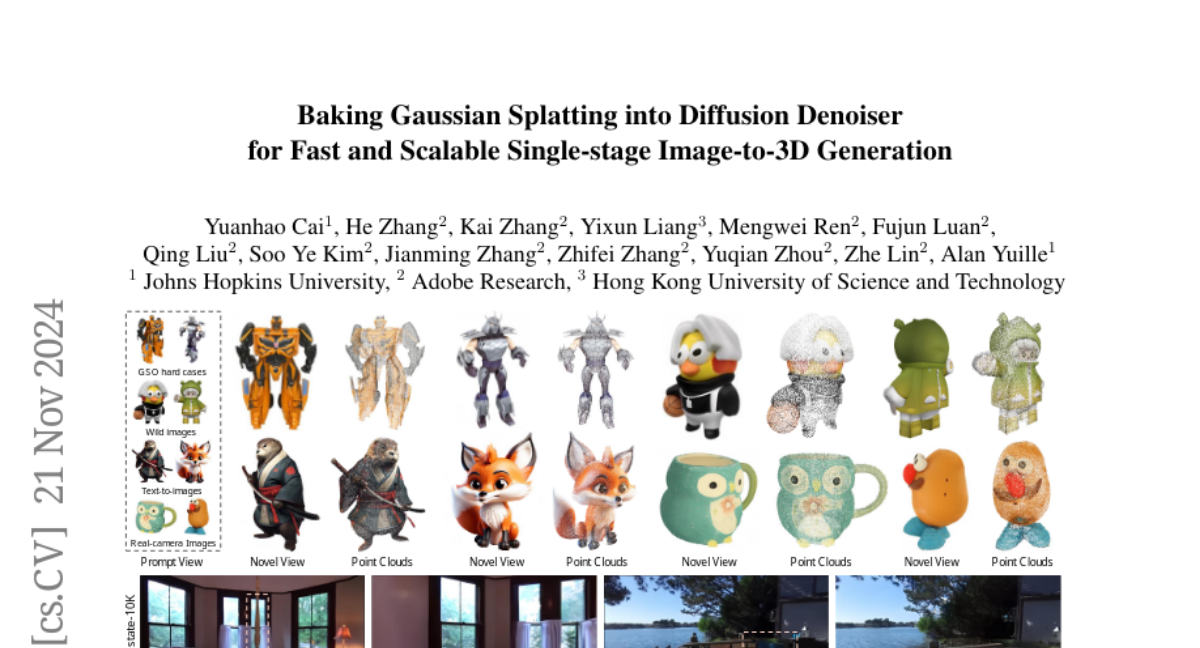

Existing feed-forward image-to-3D methods mainly rely on 2D multi-view diffusion models that cannot guarantee 3D consistency. These methods easily collapse when changing the prompt view direction and mainly handle object-centric prompt images. In this paper, we propose a novel single-stage 3D diffusion model, DiffusionGS, for object and scene generation from a single view. DiffusionGS directly outputs 3D Gaussian point clouds at each timestep to enforce view consistency and allow the model to generate robustly given prompt views of any directions, beyond object-centric inputs. Plus, to improve the capability and generalization ability of DiffusionGS, we scale up 3D training data by developing a scene-object mixed training strategy. Experiments show that our method enjoys better generation quality (2.20 dB higher in PSNR and 23.25 lower in FID) and over 5x faster speed (~6s on an A100 GPU) than SOTA methods. The user study and text-to-3D applications also reveals the practical values of our method. Our Project page at https://caiyuanhao1998.github.io/project/DiffusionGS/ shows the video and interactive generation results.