Benchmarking Multi-Image Understanding in Vision and Language Models: Perception, Knowledge, Reasoning, and Multi-Hop Reasoning

Bingchen Zhao, Yongshuo Zong, Letian Zhang, Timothy Hospedales

2024-06-19

Summary

This paper introduces a new benchmark called the Multi-Image Relational Benchmark (MIRB) that tests how well visual language models (VLMs) can understand and reason about multiple images. It aims to improve the evaluation of these models beyond just single-image tasks.

What's the problem?

Most existing tests for visual language models focus only on single images, which limits our understanding of how these models perform when they need to analyze and compare multiple images at once. This is a significant gap because many real-world applications require the ability to process and reason about several images together, such as in scenarios involving comparisons or complex visual relationships.

What's the solution?

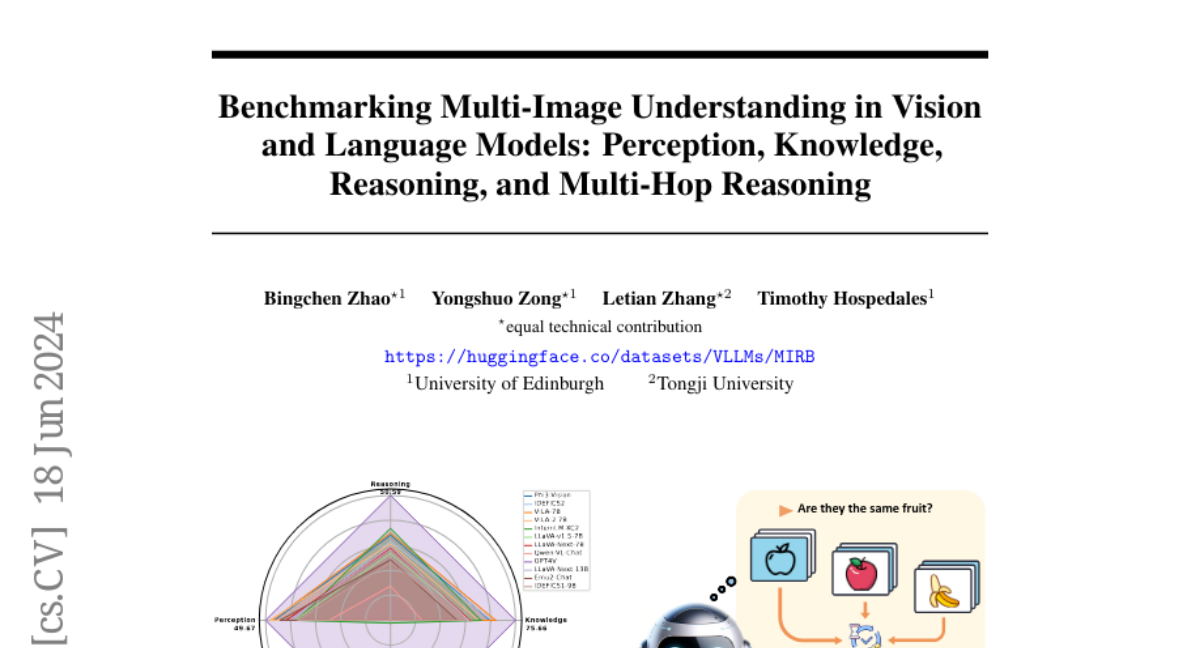

To address this issue, the authors created the MIRB, which evaluates VLMs on their ability to handle multiple images across four categories: perception (how well they see and interpret images), visual world knowledge (understanding context and information from images), reasoning (making logical deductions based on visual data), and multi-hop reasoning (connecting information across different images). They tested various open-source and closed-source models, including GPT-4V, and found that while some models performed well on single-image tasks, there was still a significant gap in their ability to reason with multiple images.

Why it matters?

This research is important because it highlights the need for better benchmarks that reflect the complexities of real-world visual tasks. By developing the MIRB, the authors provide a tool for researchers to improve VLMs, leading to more advanced models that can understand and interact with visual information more effectively. This could enhance applications in areas like autonomous vehicles, robotics, and augmented reality, where understanding multiple images is crucial.

Abstract

The advancement of large language models (LLMs) has significantly broadened the scope of applications in natural language processing, with multi-modal LLMs extending these capabilities to integrate and interpret visual data. However, existing benchmarks for visual language models (VLMs) predominantly focus on single-image inputs, neglecting the crucial aspect of multi-image understanding. In this paper, we introduce a Multi-Image Relational Benchmark MIRB, designed to evaluate VLMs' ability to compare, analyze, and reason across multiple images. Our benchmark encompasses four categories: perception, visual world knowledge, reasoning, and multi-hop reasoning. Through a comprehensive evaluation of a wide range of open-source and closed-source models, we demonstrate that while open-source VLMs were shown to approach the performance of GPT-4V in single-image tasks, a significant performance gap remains in multi-image reasoning tasks. Our findings also reveal that even the state-of-the-art GPT-4V model struggles with our benchmark, underscoring the need for further research and development in this area. We believe our contribution of MIRB could serve as a testbed for developing the next-generation multi-modal models.