BiGR: Harnessing Binary Latent Codes for Image Generation and Improved Visual Representation Capabilities

Shaozhe Hao, Xuantong Liu, Xianbiao Qi, Shihao Zhao, Bojia Zi, Rong Xiao, Kai Han, Kwan-Yee K. Wong

2024-10-21

Summary

This paper discusses BiGR, a new model that uses compact binary codes to improve how images are generated and represented, making it more efficient and effective in creating visual content.

What's the problem?

Generating high-quality images can be challenging because traditional models often struggle with how to represent and generate both the overall image and its details effectively. Many existing models use separate approaches for generating images and understanding them, which can limit their performance and efficiency.

What's the solution?

To solve this problem, the authors developed BiGR, which combines image generation and understanding into one model. It uses a binary tokenizer to create compact representations of images, along with a masked modeling mechanism to predict these binary codes. This approach allows BiGR to generate images more efficiently while maintaining high quality. The model also includes a method called entropy-ordered sampling, which helps in generating images quickly. The authors tested BiGR extensively and found that it performs well in generating realistic images and can adapt to various visual tasks without needing significant changes.

Why it matters?

This research is important because it enhances the capabilities of AI in generating and understanding images. By improving how models handle image generation, BiGR can be useful in many applications, such as graphic design, video game development, and virtual reality, where high-quality visuals are essential.

Abstract

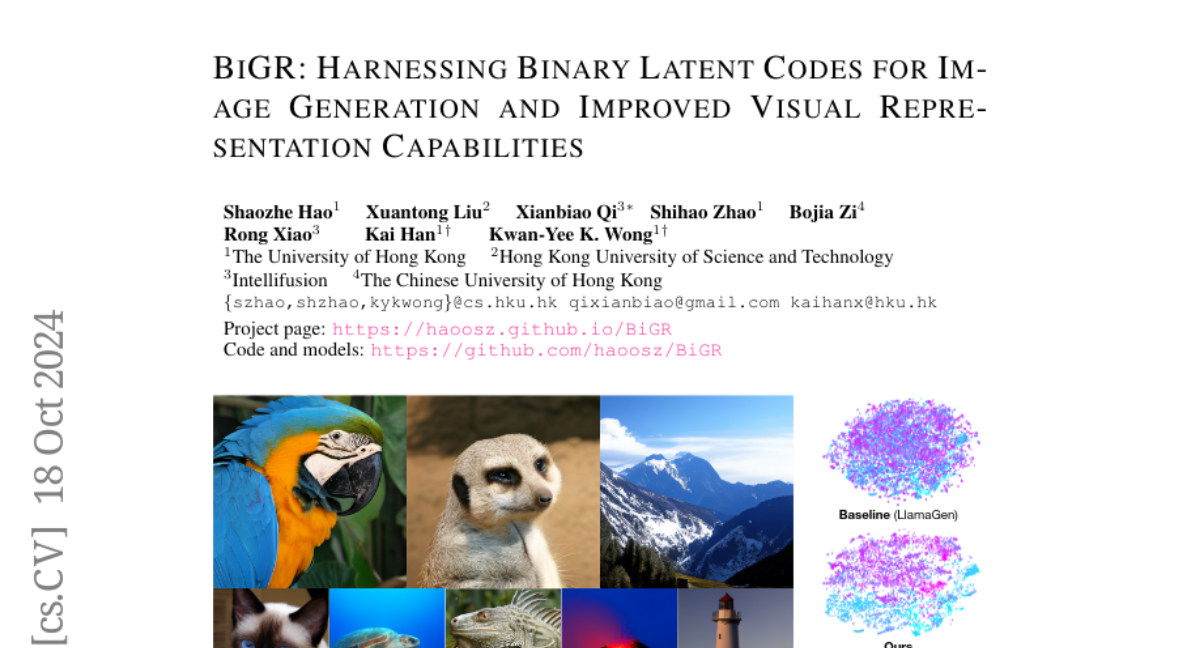

We introduce BiGR, a novel conditional image generation model using compact binary latent codes for generative training, focusing on enhancing both generation and representation capabilities. BiGR is the first conditional generative model that unifies generation and discrimination within the same framework. BiGR features a binary tokenizer, a masked modeling mechanism, and a binary transcoder for binary code prediction. Additionally, we introduce a novel entropy-ordered sampling method to enable efficient image generation. Extensive experiments validate BiGR's superior performance in generation quality, as measured by FID-50k, and representation capabilities, as evidenced by linear-probe accuracy. Moreover, BiGR showcases zero-shot generalization across various vision tasks, enabling applications such as image inpainting, outpainting, editing, interpolation, and enrichment, without the need for structural modifications. Our findings suggest that BiGR unifies generative and discriminative tasks effectively, paving the way for further advancements in the field.