Boosting Generative Image Modeling via Joint Image-Feature Synthesis

Theodoros Kouzelis, Efstathios Karypidis, Ioannis Kakogeorgiou, Spyros Gidaris, Nikos Komodakis

2025-04-25

Summary

This paper talks about a new way to train AI models to create images that look more realistic and detailed by having the model learn both the images and their important features at the same time.

What's the problem?

The problem is that most AI image generators either focus only on making the picture look good or only on matching certain features, but not both together. This can lead to images that are either pretty but not accurate, or accurate but not very high quality.

What's the solution?

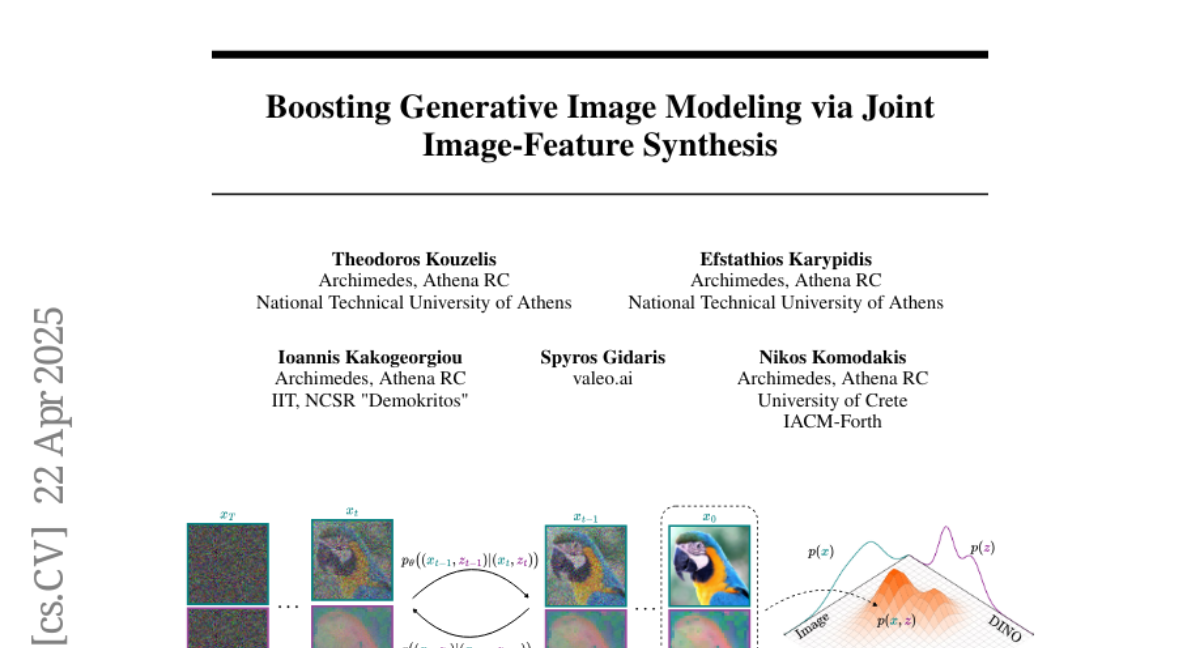

The researchers introduced a method called latent-semantic diffusion, which lets the AI model learn to generate images and their key features as a pair. They did this with only small changes to the usual way these models are built, making the training process more efficient while also improving the quality of the images.

Why it matters?

This matters because it means AI can make better, more useful images for things like art, design, and advertising, and it can do so faster and with less computer power than before.

Abstract

A novel generative image modeling framework uses latent-semantic diffusion to learn coherent image-feature pairs, enhancing generative quality and training efficiency with minimal modifications to standard architectures.