Breaking the Memory Barrier: Near Infinite Batch Size Scaling for Contrastive Loss

Zesen Cheng, Hang Zhang, Kehan Li, Sicong Leng, Zhiqiang Hu, Fei Wu, Deli Zhao, Xin Li, Lidong Bing

2024-10-25

Summary

This paper introduces a new method for scaling up the batch sizes used in contrastive loss training, allowing for much larger datasets without running into memory issues.

What's the problem?

When training models using contrastive loss, larger batch sizes are better because they provide more examples to learn from. However, as batch sizes increase, the amount of memory needed also grows significantly, making it hard to use large datasets effectively. This is mainly due to the need to create a similarity matrix that compares all data points, which takes up a lot of memory.

What's the solution?

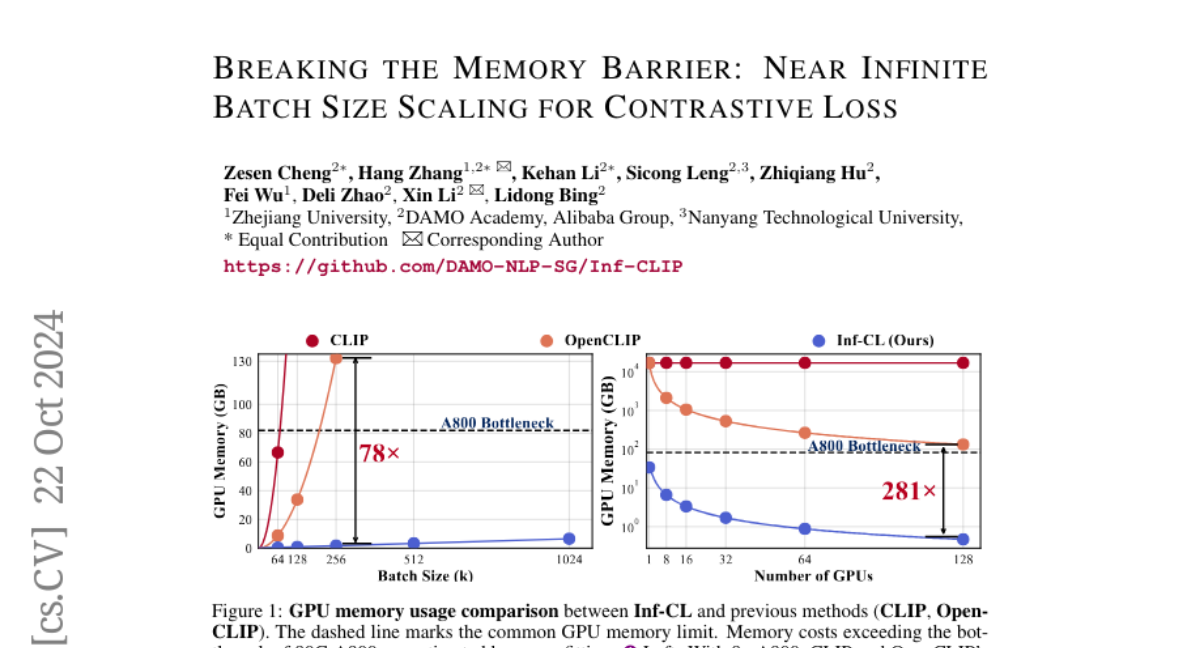

The authors propose a tile-based computation strategy that breaks down the contrastive loss calculation into smaller blocks, avoiding the need to create a full similarity matrix. They also introduce a multi-level tiling approach that optimizes how data is processed across multiple GPUs, improving efficiency. This allows them to train models with batch sizes as large as 12 million without losing accuracy and while using less memory compared to previous methods.

Why it matters?

This research is significant because it enables the training of models on much larger datasets, which can lead to better performance in tasks like image recognition and natural language processing. By breaking through memory limitations, this method opens up new possibilities for developing more powerful AI systems.

Abstract

Contrastive loss is a powerful approach for representation learning, where larger batch sizes enhance performance by providing more negative samples to better distinguish between similar and dissimilar data. However, scaling batch sizes is constrained by the quadratic growth in GPU memory consumption, primarily due to the full instantiation of the similarity matrix. To address this, we propose a tile-based computation strategy that partitions the contrastive loss calculation into arbitrary small blocks, avoiding full materialization of the similarity matrix. Furthermore, we introduce a multi-level tiling strategy to leverage the hierarchical structure of distributed systems, employing ring-based communication at the GPU level to optimize synchronization and fused kernels at the CUDA core level to reduce I/O overhead. Experimental results show that the proposed method scales batch sizes to unprecedented levels. For instance, it enables contrastive training of a CLIP-ViT-L/14 model with a batch size of 4M or 12M using 8 or 32 A800 80GB without sacrificing any accuracy. Compared to SOTA memory-efficient solutions, it achieves a two-order-of-magnitude reduction in memory while maintaining comparable speed. The code will be made publicly available.