Can Large Vision Language Models Read Maps Like a Human?

Shuo Xing, Zezhou Sun, Shuangyu Xie, Kaiyuan Chen, Yanjia Huang, Yuping Wang, Jiachen Li, Dezhen Song, Zhengzhong Tu

2025-03-24

Summary

This paper is about testing whether AI models can understand and use maps like humans do.

What's the problem?

AI models that can understand both images and language often struggle with tasks that require spatial reasoning, like reading maps and following directions.

What's the solution?

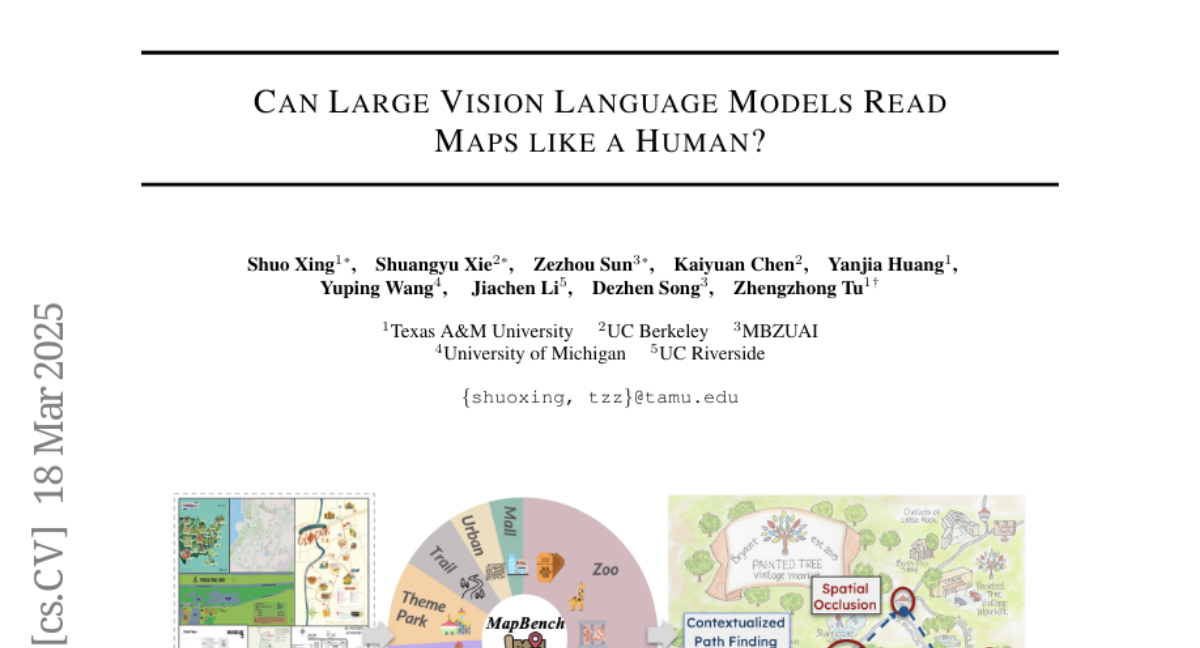

The researchers created a new test called MapBench that challenges AI models to navigate using maps and provide instructions in natural language.

Why it matters?

This work matters because it helps identify the limitations of current AI models in spatial reasoning and can lead to improvements in AI navigation systems.

Abstract

In this paper, we introduce MapBench-the first dataset specifically designed for human-readable, pixel-based map-based outdoor navigation, curated from complex path finding scenarios. MapBench comprises over 1600 pixel space map path finding problems from 100 diverse maps. In MapBench, LVLMs generate language-based navigation instructions given a map image and a query with beginning and end landmarks. For each map, MapBench provides Map Space Scene Graph (MSSG) as an indexing data structure to convert between natural language and evaluate LVLM-generated results. We demonstrate that MapBench significantly challenges state-of-the-art LVLMs both zero-shot prompting and a Chain-of-Thought (CoT) augmented reasoning framework that decomposes map navigation into sequential cognitive processes. Our evaluation of both open-source and closed-source LVLMs underscores the substantial difficulty posed by MapBench, revealing critical limitations in their spatial reasoning and structured decision-making capabilities. We release all the code and dataset in https://github.com/taco-group/MapBench.