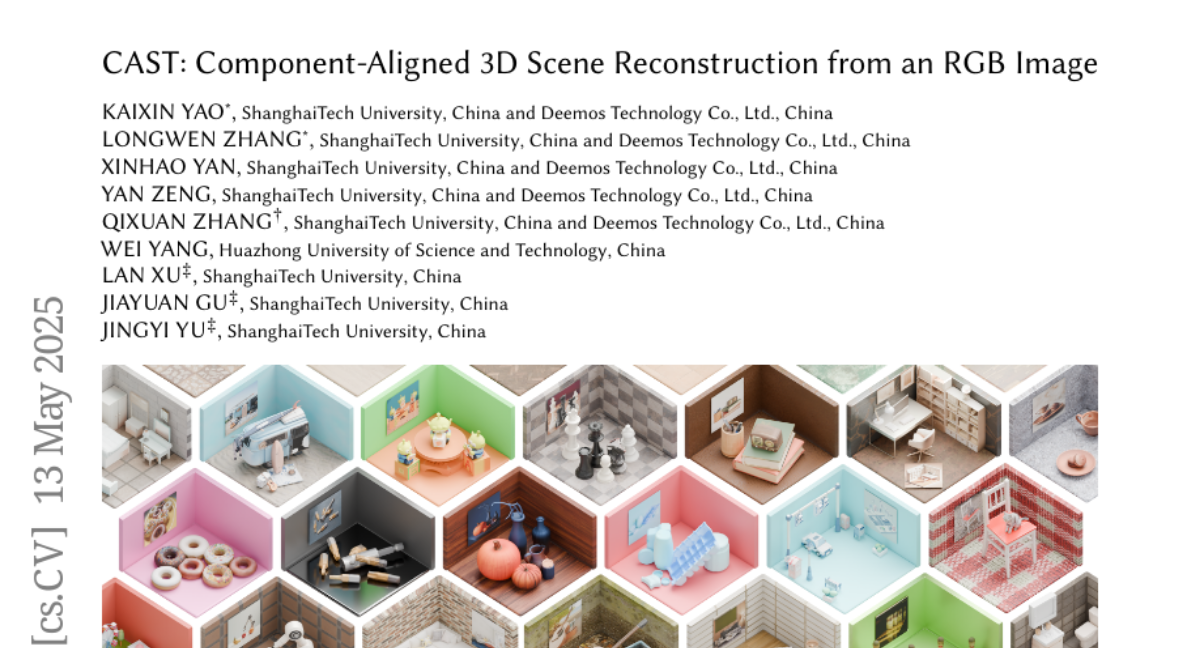

CAST: Component-Aligned 3D Scene Reconstruction from an RGB Image

Kaixin Yao, Longwen Zhang, Xinhao Yan, Yan Zeng, Qixuan Zhang, Lan Xu, Wei Yang, Jiayuan Gu, Jingyi Yu

2025-05-15

Summary

This paper talks about CAST, a new method that lets computers create detailed 3D models of a scene just from a single regular photo, using advanced AI and physics knowledge.

What's the problem?

The problem is that making accurate 3D models from just one 2D image is really hard, especially when some parts of the scene are hidden or blocked from view, and most existing methods can't handle these challenges very well.

What's the solution?

The researchers designed CAST to use AI models like GPT to understand the image, then generate 3D shapes that are aware of which parts are hidden, and finally apply physics-based corrections so the 3D model makes sense in the real world, using something called Signed Distance Fields.

Why it matters?

This matters because it makes it possible to quickly and accurately turn photos into 3D models, which is useful for things like video games, virtual reality, architecture, and robotics.

Abstract

CAST is a method for reconstructing high-quality 3D scenes from single RGB images using GPT models, occlusion-aware 3D generation, and physics-aware correction with Signed Distance Fields.