Chain-of-Knowledge: Integrating Knowledge Reasoning into Large Language Models by Learning from Knowledge Graphs

Yifei Zhang, Xintao Wang, Jiaqing Liang, Sirui Xia, Lida Chen, Yanghua Xiao

2024-07-02

Summary

This paper talks about Chain-of-Knowledge, a new framework designed to improve how large language models (LLMs) reason with knowledge. It focuses on using knowledge graphs to help these models learn and derive new information more effectively.

What's the problem?

While LLMs are good at various language tasks, they often struggle with knowledge reasoning, which involves using existing information to generate new insights. Although knowledge graphs (which organize information in a way that shows relationships between different pieces of data) have been studied, their potential for enhancing LLMs hasn't been fully explored. This gap means that LLMs might not be as effective as they could be in understanding and reasoning about complex information.

What's the solution?

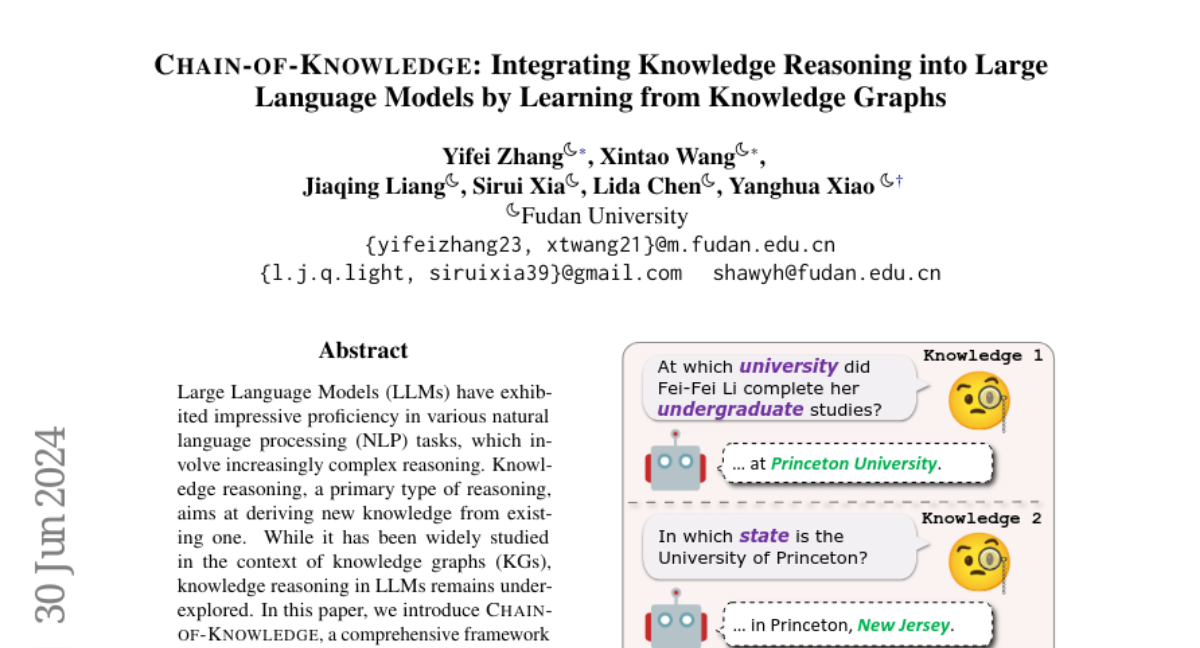

To address this issue, the authors developed the Chain-of-Knowledge framework, which includes methods for both creating datasets and training models. They created a dataset called KnowReason by mining rules from knowledge graphs. Additionally, they improved the training process by adding a trial-and-error mechanism that mimics how humans explore and learn from knowledge. This helps the models avoid common pitfalls like overfitting, where they perform well on training data but poorly on new data. Through extensive experiments, they showed that this framework significantly enhances the reasoning abilities of LLMs.

Why it matters?

This research is important because it provides a structured way to improve how AI models handle knowledge reasoning. By integrating knowledge graphs into the training process, Chain-of-Knowledge can help create more intelligent and capable AI systems. This advancement is crucial for applications that require deep understanding and reasoning, such as research, education, and decision-making in various fields.

Abstract

Large Language Models (LLMs) have exhibited impressive proficiency in various natural language processing (NLP) tasks, which involve increasingly complex reasoning. Knowledge reasoning, a primary type of reasoning, aims at deriving new knowledge from existing one.While it has been widely studied in the context of knowledge graphs (KGs), knowledge reasoning in LLMs remains underexplored. In this paper, we introduce Chain-of-Knowledge, a comprehensive framework for knowledge reasoning, including methodologies for both dataset construction and model learning. For dataset construction, we create KnowReason via rule mining on KGs. For model learning, we observe rule overfitting induced by naive training. Hence, we enhance CoK with a trial-and-error mechanism that simulates the human process of internal knowledge exploration. We conduct extensive experiments with KnowReason. Our results show the effectiveness of CoK in refining LLMs in not only knowledge reasoning, but also general reasoning benchmarkms.