CLAIR-A: Leveraging Large Language Models to Judge Audio Captions

Tsung-Han Wu, Joseph E. Gonzalez, Trevor Darrell, David M. Chan

2024-09-20

Summary

This paper discusses CLAIR-A, a new method that uses large language models (LLMs) to evaluate how well computer-generated audio captions describe sounds. It aims to improve the accuracy of these evaluations by better aligning them with human judgment.

What's the problem?

Evaluating audio captions generated by machines is complicated because it requires understanding many different factors, such as the context of the sounds and how they relate to each other. Current evaluation methods often focus on specific details but fail to give an overall score that matches what humans would think about the quality of the captions.

What's the solution?

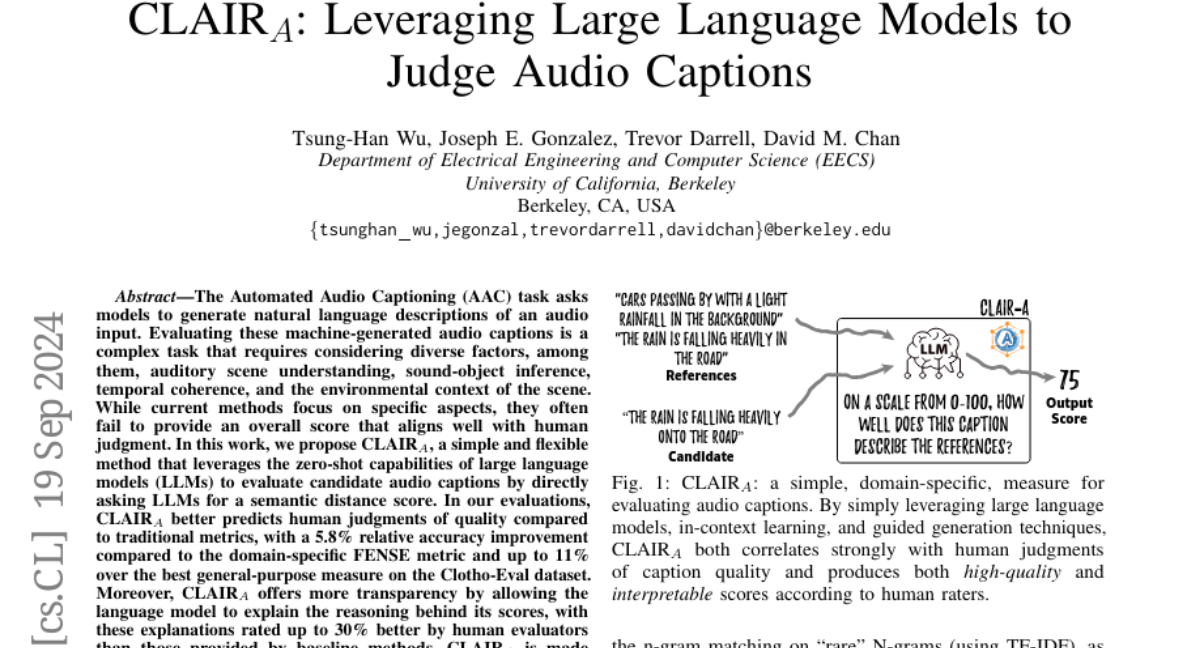

CLAIR-A addresses this problem by using LLMs to directly assess the quality of audio captions. Instead of relying on traditional scoring methods, CLAIR-A asks the LLM to provide a score based on how well the caption matches the meaning of the audio. This approach has shown to be more accurate than existing methods, with improvements in predicting human judgments. Additionally, CLAIR-A allows the LLM to explain its scoring, making it easier for people to understand why a certain score was given.

Why it matters?

This research is important because it enhances the way we evaluate audio captioning systems, which are used in various applications like accessibility tools and media indexing. By providing a more reliable and understandable evaluation method, CLAIR-A can help improve the quality of automated audio descriptions, benefiting both developers and users.

Abstract

The Automated Audio Captioning (AAC) task asks models to generate natural language descriptions of an audio input. Evaluating these machine-generated audio captions is a complex task that requires considering diverse factors, among them, auditory scene understanding, sound-object inference, temporal coherence, and the environmental context of the scene. While current methods focus on specific aspects, they often fail to provide an overall score that aligns well with human judgment. In this work, we propose CLAIR-A, a simple and flexible method that leverages the zero-shot capabilities of large language models (LLMs) to evaluate candidate audio captions by directly asking LLMs for a semantic distance score. In our evaluations, CLAIR-A better predicts human judgements of quality compared to traditional metrics, with a 5.8% relative accuracy improvement compared to the domain-specific FENSE metric and up to 11% over the best general-purpose measure on the Clotho-Eval dataset. Moreover, CLAIR-A offers more transparency by allowing the language model to explain the reasoning behind its scores, with these explanations rated up to 30% better by human evaluators than those provided by baseline methods. CLAIR-A is made publicly available at https://github.com/DavidMChan/clair-a.