CLEAR: Conv-Like Linearization Revs Pre-Trained Diffusion Transformers Up

Songhua Liu, Zhenxiong Tan, Xinchao Wang

2024-12-23

Summary

This paper talks about CLEAR, a new method that improves the efficiency of Diffusion Transformers (DiTs) used for generating images by changing how they handle attention, which is the process of focusing on different parts of the data.

What's the problem?

Diffusion Transformers are great for creating images, but they can be slow because their attention mechanisms become complicated as the size of the image increases. This complexity means that generating high-quality images takes a lot of time and computing power, which isn't ideal for practical applications.

What's the solution?

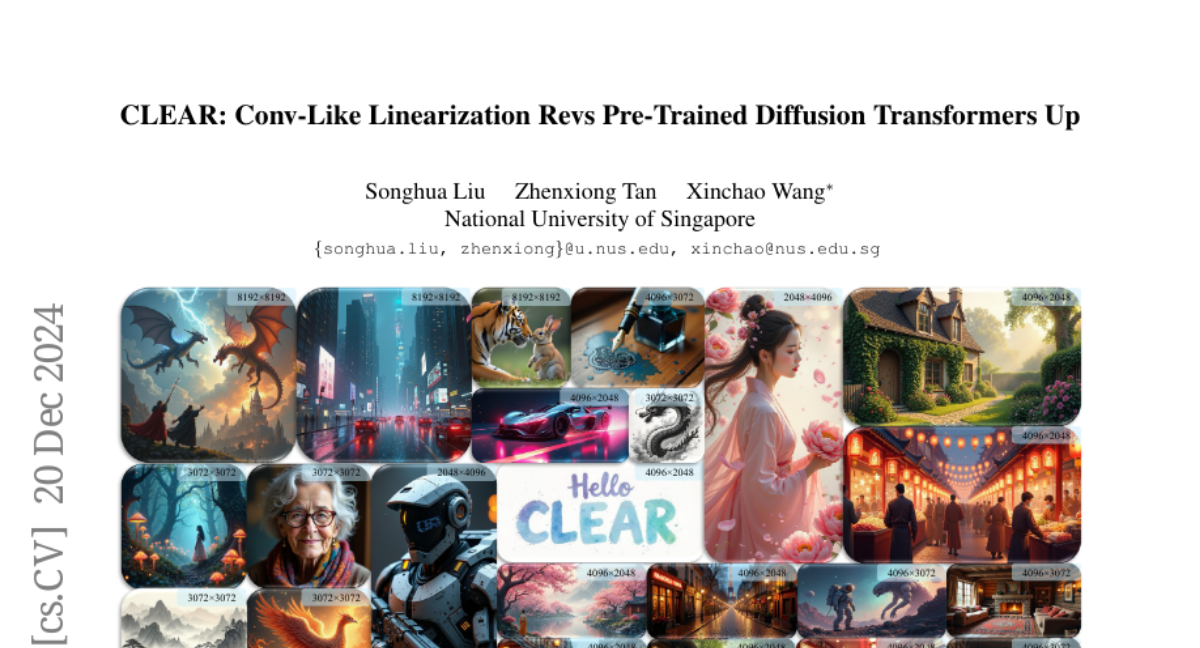

CLEAR introduces a new approach called convolution-like local attention that simplifies the attention process. Instead of looking at all parts of an image at once, it focuses on smaller sections around each point. This method reduces the computational complexity from quadratic to linear, making it much faster. The researchers tested this new method and found that it could generate high-resolution images significantly quicker while maintaining quality.

Why it matters?

This research is important because it makes it easier and faster to create high-quality images using AI. By improving the efficiency of image generation, CLEAR can benefit various fields such as graphic design, video game development, and virtual reality, where quick and high-quality visual content is essential.

Abstract

Diffusion Transformers (DiT) have become a leading architecture in image generation. However, the quadratic complexity of attention mechanisms, which are responsible for modeling token-wise relationships, results in significant latency when generating high-resolution images. To address this issue, we aim at a linear attention mechanism in this paper that reduces the complexity of pre-trained DiTs to linear. We begin our exploration with a comprehensive summary of existing efficient attention mechanisms and identify four key factors crucial for successful linearization of pre-trained DiTs: locality, formulation consistency, high-rank attention maps, and feature integrity. Based on these insights, we introduce a convolution-like local attention strategy termed CLEAR, which limits feature interactions to a local window around each query token, and thus achieves linear complexity. Our experiments indicate that, by fine-tuning the attention layer on merely 10K self-generated samples for 10K iterations, we can effectively transfer knowledge from a pre-trained DiT to a student model with linear complexity, yielding results comparable to the teacher model. Simultaneously, it reduces attention computations by 99.5% and accelerates generation by 6.3 times for generating 8K-resolution images. Furthermore, we investigate favorable properties in the distilled attention layers, such as zero-shot generalization cross various models and plugins, and improved support for multi-GPU parallel inference. Models and codes are available here: https://github.com/Huage001/CLEAR.