Click2Mask: Local Editing with Dynamic Mask Generation

Omer Regev, Omri Avrahami, Dani Lischinski

2024-09-16

Summary

This paper introduces Click2Mask, a new method that allows users to easily edit specific parts of images by simply clicking on them, making the editing process much simpler and more efficient.

What's the problem?

Traditional image editing methods often require users to create precise masks or give detailed descriptions of the areas they want to edit. This can be complicated and lead to mistakes, especially for people who are not experts in image editing.

What's the solution?

Click2Mask simplifies this process by allowing users to click on just one point in the image to start the editing. The system then automatically creates a mask around that point using a technique called Blended Latent Diffusion. This means that users can add or change content in the image without needing to worry about making exact selections or using complicated tools.

Why it matters?

This research is important because it makes image editing more accessible for everyone, even those without technical skills. By reducing the effort needed to edit images while still achieving high-quality results, Click2Mask can be useful for artists, designers, and anyone who wants to enhance their photos easily.

Abstract

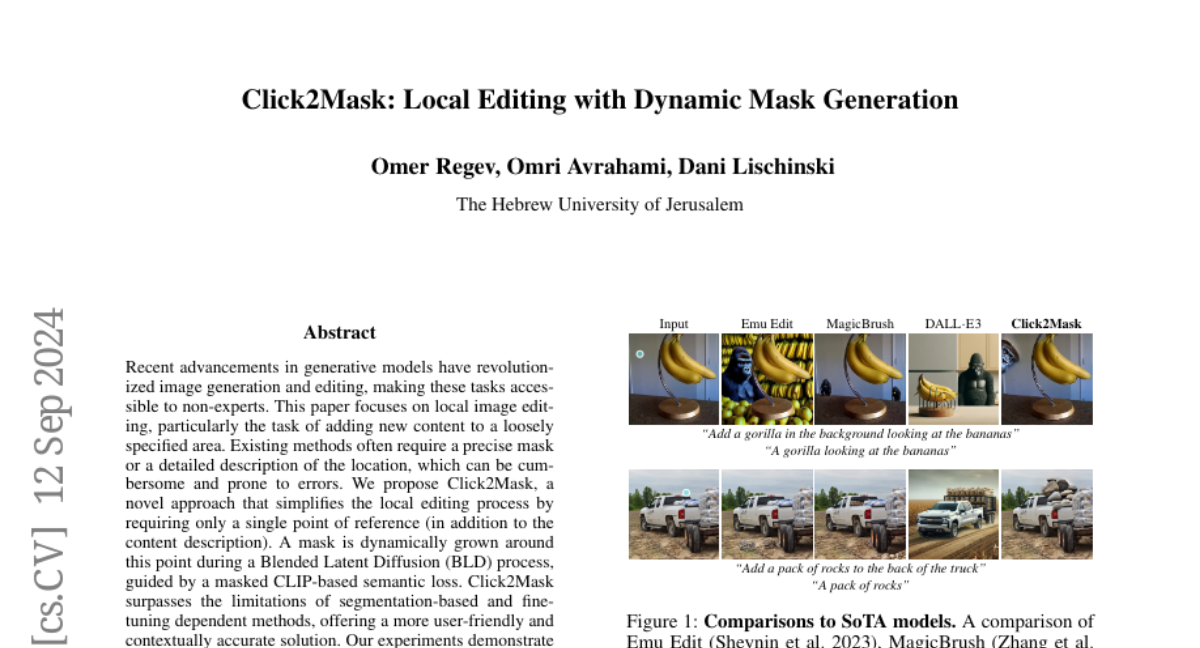

Recent advancements in generative models have revolutionized image generation and editing, making these tasks accessible to non-experts. This paper focuses on local image editing, particularly the task of adding new content to a loosely specified area. Existing methods often require a precise mask or a detailed description of the location, which can be cumbersome and prone to errors. We propose Click2Mask, a novel approach that simplifies the local editing process by requiring only a single point of reference (in addition to the content description). A mask is dynamically grown around this point during a Blended Latent Diffusion (BLD) process, guided by a masked CLIP-based semantic loss. Click2Mask surpasses the limitations of segmentation-based and fine-tuning dependent methods, offering a more user-friendly and contextually accurate solution. Our experiments demonstrate that Click2Mask not only minimizes user effort but also delivers competitive or superior local image manipulation results compared to SoTA methods, according to both human judgement and automatic metrics. Key contributions include the simplification of user input, the ability to freely add objects unconstrained by existing segments, and the integration potential of our dynamic mask approach within other editing methods.