Coffee-Gym: An Environment for Evaluating and Improving Natural Language Feedback on Erroneous Code

Hyungjoo Chae, Taeyoon Kwon, Seungjun Moon, Yongho Song, Dongjin Kang, Kai Tzu-iunn Ong, Beong-woo Kwak, Seonghyeon Bae, Seung-won Hwang, Jinyoung Yeo

2024-10-01

Summary

This paper introduces Coffee-Gym, a new environment designed to help train models that provide feedback on coding errors, improving how these models assist with code editing.

What's the problem?

Many existing methods for training models to give feedback on code edits lack high-quality datasets and effective ways to measure how helpful the feedback is. This makes it difficult to create models that can accurately help programmers fix their code.

What's the solution?

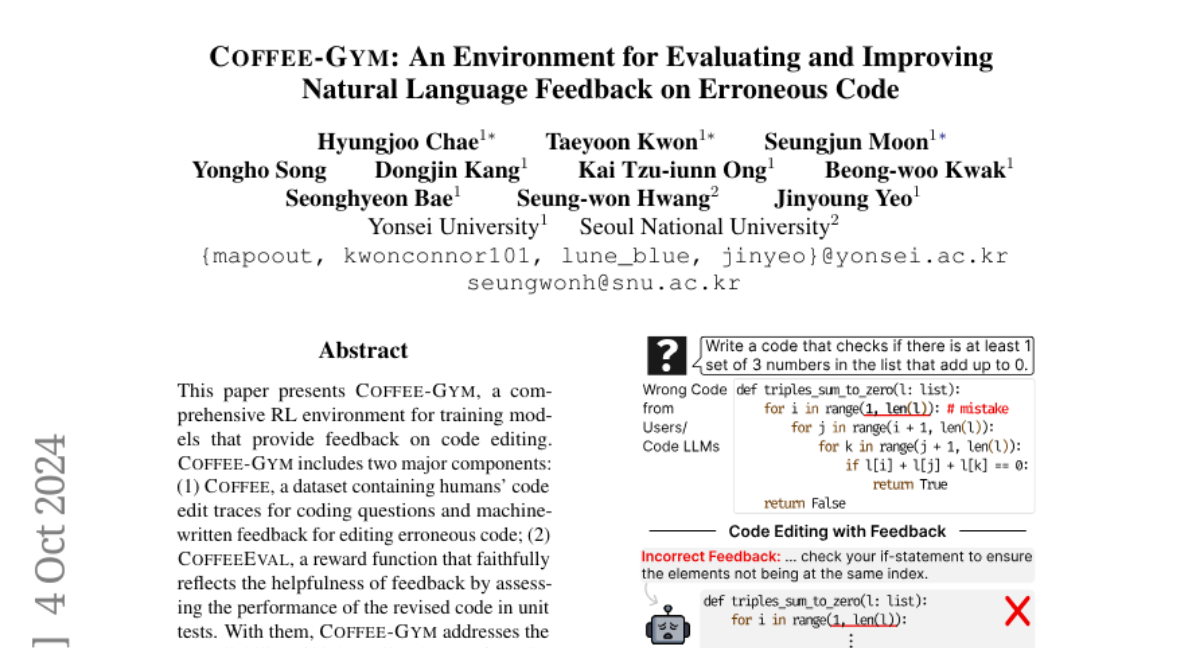

Coffee-Gym consists of two main parts: a dataset called Coffee, which contains real examples of code edits made by humans along with machine-generated feedback, and CoffeeEval, a reward system that measures how useful the feedback is based on how well the revised code performs in tests. By using this environment, the authors were able to train models that give better feedback and improve the performance of existing coding tools.

Why it matters?

This research is important because it enhances the ability of AI systems to assist developers in fixing code errors. By providing more accurate and helpful feedback, Coffee-Gym can lead to better coding practices and more efficient software development.

Abstract

This paper presents Coffee-Gym, a comprehensive RL environment for training models that provide feedback on code editing. Coffee-Gym includes two major components: (1) Coffee, a dataset containing humans' code edit traces for coding questions and machine-written feedback for editing erroneous code; (2) CoffeeEval, a reward function that faithfully reflects the helpfulness of feedback by assessing the performance of the revised code in unit tests. With them, Coffee-Gym addresses the unavailability of high-quality datasets for training feedback models with RL, and provides more accurate rewards than the SOTA reward model (i.e., GPT-4). By applying Coffee-Gym, we elicit feedback models that outperform baselines in enhancing open-source code LLMs' code editing, making them comparable with closed-source LLMs. We make the dataset and the model checkpoint publicly available.