Collaborative Instance Navigation: Leveraging Agent Self-Dialogue to Minimize User Input

Francesco Taioli, Edoardo Zorzi, Gianni Franchi, Alberto Castellini, Alessandro Farinelli, Marco Cristani, Yiming Wang

2024-12-03

Summary

This paper introduces Collaborative Instance Navigation (CoIN), a new approach that allows AI agents to interact with humans in real-time during navigation tasks, reducing the need for detailed human instructions.

What's the problem?

In existing navigation tasks, AI systems require humans to provide complete and clear descriptions of the objects they need to find. However, in real life, these instructions can often be vague or incomplete, making it difficult for the AI to understand exactly what is needed. This can lead to confusion and inefficiency during navigation.

What's the solution?

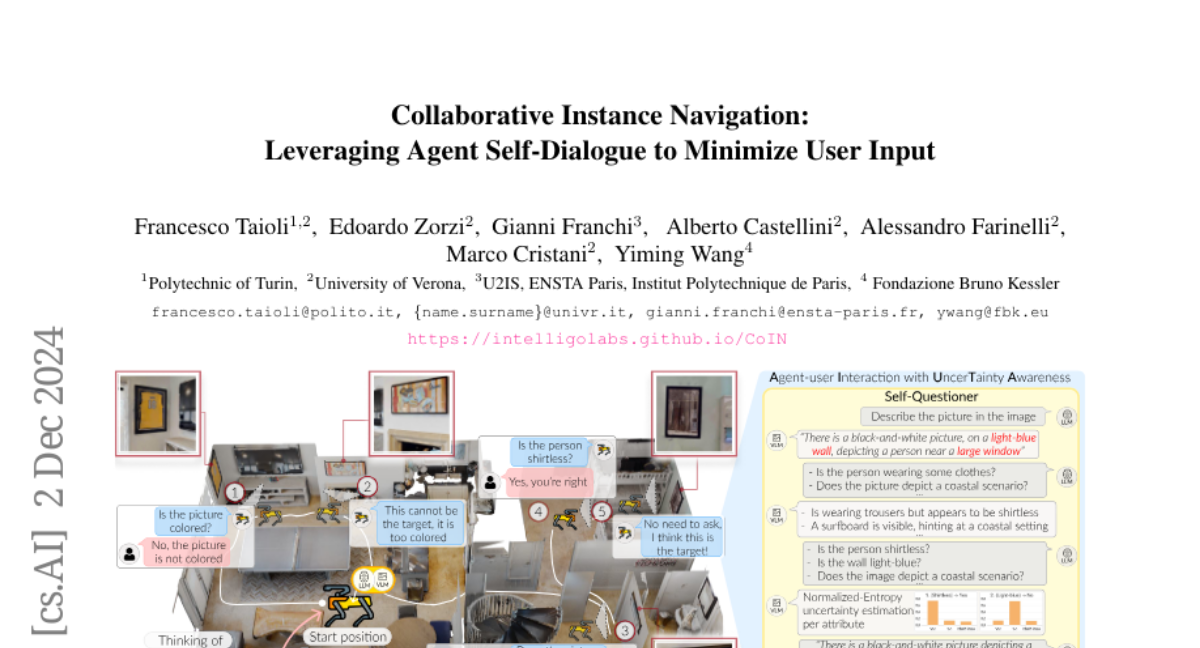

To solve this problem, the researchers developed CoIN, which enables dynamic interactions between the AI agent and the human user while navigating. They introduced a method called Agent-user Interaction with UncerTainty Awareness (AIUTA) that allows the AI to ask questions and clarify uncertainties as it goes along. The AI uses Vision Language Models (VLMs) to detect objects and a Self-Questioner model to generate questions for itself based on what it sees. This way, it can minimize the amount of input needed from the user while still effectively navigating to the target object.

Why it matters?

This research is important because it improves how AI systems can work alongside humans in navigation tasks. By allowing for more natural and open-ended conversations between the AI and the user, CoIN makes it easier for people to interact with technology in everyday situations, such as finding objects in a room or navigating through complex environments. This could lead to better user experiences in robotics, virtual assistants, and other applications where navigation is key.

Abstract

Existing embodied instance goal navigation tasks, driven by natural language, assume human users to provide complete and nuanced instance descriptions prior to the navigation, which can be impractical in the real world as human instructions might be brief and ambiguous. To bridge this gap, we propose a new task, Collaborative Instance Navigation (CoIN), with dynamic agent-human interaction during navigation to actively resolve uncertainties about the target instance in natural, template-free, open-ended dialogues. To address CoIN, we propose a novel method, Agent-user Interaction with UncerTainty Awareness (AIUTA), leveraging the perception capability of Vision Language Models (VLMs) and the capability of Large Language Models (LLMs). First, upon object detection, a Self-Questioner model initiates a self-dialogue to obtain a complete and accurate observation description, while a novel uncertainty estimation technique mitigates inaccurate VLM perception. Then, an Interaction Trigger module determines whether to ask a question to the user, continue or halt navigation, minimizing user input. For evaluation, we introduce CoIN-Bench, a benchmark supporting both real and simulated humans. AIUTA achieves competitive performance in instance navigation against state-of-the-art methods, demonstrating great flexibility in handling user inputs.