ColPali: Efficient Document Retrieval with Vision Language Models

Manuel Faysse, Hugues Sibille, Tony Wu, Gautier Viaud, Céline Hudelot, Pierre Colombo

2024-07-02

Summary

This paper talks about ColPali, a new system designed to improve how we retrieve information from documents by using advanced Vision Language Models (VLMs). It focuses on making document retrieval more efficient, especially for documents that contain a mix of text and visuals, like graphs and tables.

What's the problem?

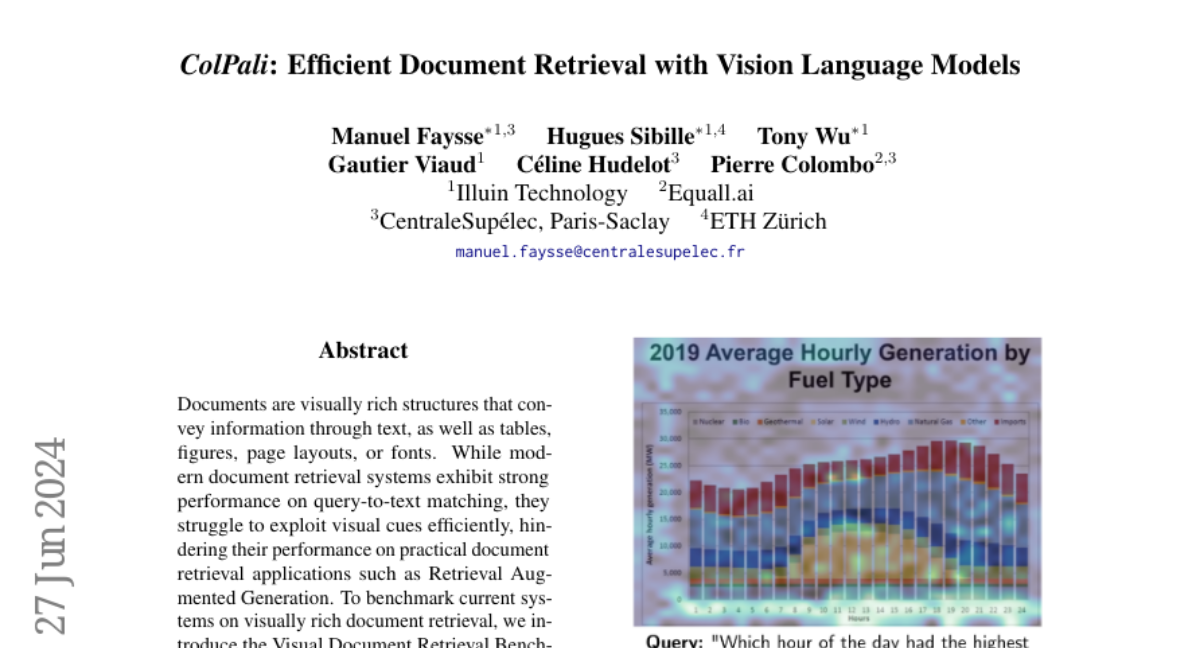

Current document retrieval systems are good at matching queries to text but often fail to effectively use visual information found in documents. This limitation makes it hard to retrieve useful information from visually complex documents, which is important for tasks like Retrieval Augmented Generation where accurate data extraction is crucial.

What's the solution?

To address this issue, the authors introduced ColPali, a model that can understand and retrieve information from document images directly. Instead of relying on traditional text extraction methods, ColPali uses VLMs to create high-quality representations of the entire document page. It employs a late interaction mechanism that allows the system to compare both visual and textual elements effectively, leading to better retrieval performance. This approach makes the process faster and more efficient than existing methods.

Why it matters?

This research is important because it enhances our ability to retrieve information from complex documents, which can improve various applications in fields like education, research, and business. By leveraging visual cues alongside text, ColPali can provide more accurate and relevant information quickly, making it easier for users to find what they need in documents that contain rich visual content.

Abstract

Documents are visually rich structures that convey information through text, as well as tables, figures, page layouts, or fonts. While modern document retrieval systems exhibit strong performance on query-to-text matching, they struggle to exploit visual cues efficiently, hindering their performance on practical document retrieval applications such as Retrieval Augmented Generation. To benchmark current systems on visually rich document retrieval, we introduce the Visual Document Retrieval Benchmark ViDoRe, composed of various page-level retrieving tasks spanning multiple domains, languages, and settings. The inherent shortcomings of modern systems motivate the introduction of a new retrieval model architecture, ColPali, which leverages the document understanding capabilities of recent Vision Language Models to produce high-quality contextualized embeddings solely from images of document pages. Combined with a late interaction matching mechanism, ColPali largely outperforms modern document retrieval pipelines while being drastically faster and end-to-end trainable.