CustomCrafter: Customized Video Generation with Preserving Motion and Concept Composition Abilities

Tao Wu, Yong Zhang, Xintao Wang, Xianpan Zhou, Guangcong Zheng, Zhongang Qi, Ying Shan, Xi Li

2024-08-26

Summary

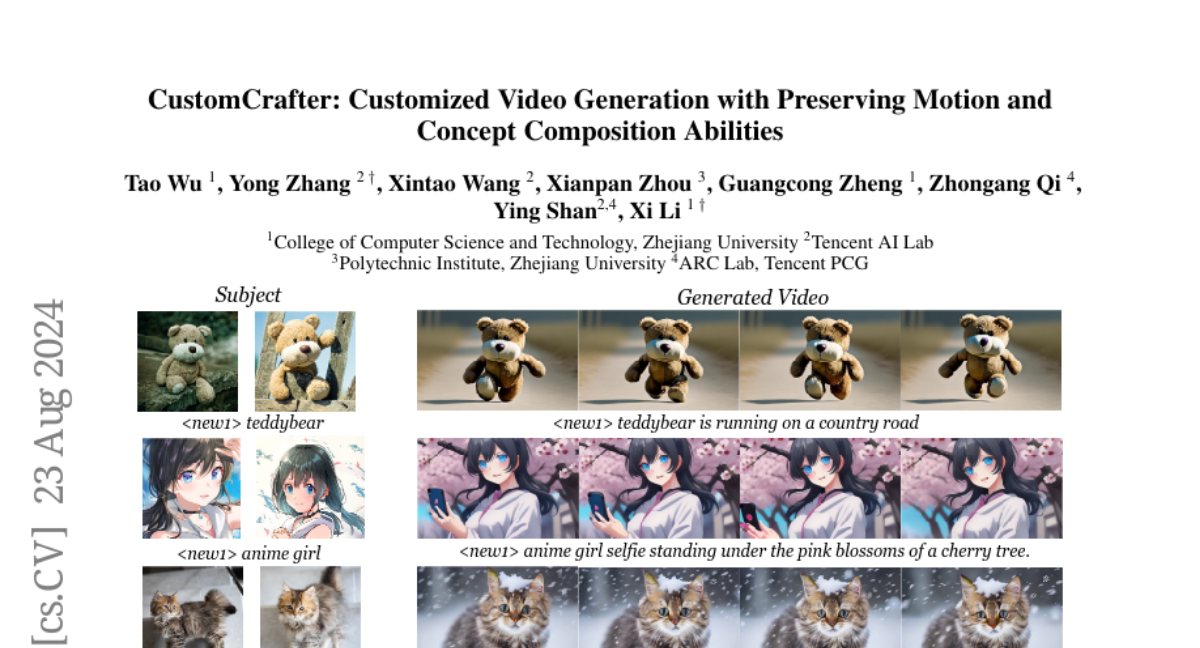

This paper introduces CustomCrafter, a new method for generating customized videos based on text prompts and reference images while maintaining the ability to create motion and combine different concepts.

What's the problem?

Current video generation methods often struggle because they are trained only on still images. When trying to create videos, they lose their ability to generate realistic motion and effectively combine different ideas. Additionally, existing methods require users to frequently change guiding videos and retrain the model, which is inconvenient.

What's the solution?

The authors propose CustomCrafter, which allows for high-quality video generation without needing additional videos or retraining. They developed a special module that updates only a few parameters in the video diffusion models (VDMs) to enhance their ability to capture details and combine concepts. They also introduced a Dynamic Weighted Video Sampling Strategy, which helps preserve motion generation in the early stages of creating the video while focusing on refining details later.

Why it matters?

This research is important because it simplifies the process of creating customized videos, making it more user-friendly and efficient. By improving how videos are generated without losing motion or detail, CustomCrafter can benefit various applications, such as filmmaking, advertising, and content creation.

Abstract

Customized video generation aims to generate high-quality videos guided by text prompts and subject's reference images. However, since it is only trained on static images, the fine-tuning process of subject learning disrupts abilities of video diffusion models (VDMs) to combine concepts and generate motions. To restore these abilities, some methods use additional video similar to the prompt to fine-tune or guide the model. This requires frequent changes of guiding videos and even re-tuning of the model when generating different motions, which is very inconvenient for users. In this paper, we propose CustomCrafter, a novel framework that preserves the model's motion generation and conceptual combination abilities without additional video and fine-tuning to recovery. For preserving conceptual combination ability, we design a plug-and-play module to update few parameters in VDMs, enhancing the model's ability to capture the appearance details and the ability of concept combinations for new subjects. For motion generation, we observed that VDMs tend to restore the motion of video in the early stage of denoising, while focusing on the recovery of subject details in the later stage. Therefore, we propose Dynamic Weighted Video Sampling Strategy. Using the pluggability of our subject learning modules, we reduce the impact of this module on motion generation in the early stage of denoising, preserving the ability to generate motion of VDMs. In the later stage of denoising, we restore this module to repair the appearance details of the specified subject, thereby ensuring the fidelity of the subject's appearance. Experimental results show that our method has a significant improvement compared to previous methods.