Cycle3D: High-quality and Consistent Image-to-3D Generation via Generation-Reconstruction Cycle

Zhenyu Tang, Junwu Zhang, Xinhua Cheng, Wangbo Yu, Chaoran Feng, Yatian Pang, Bin Lin, Li Yuan

2024-07-30

Summary

This paper introduces Cycle3D, a new method for generating high-quality and consistent 3D models from 2D images. It uses a unique process that combines generating images and reconstructing 3D content in a cycle to improve the final results.

What's the problem?

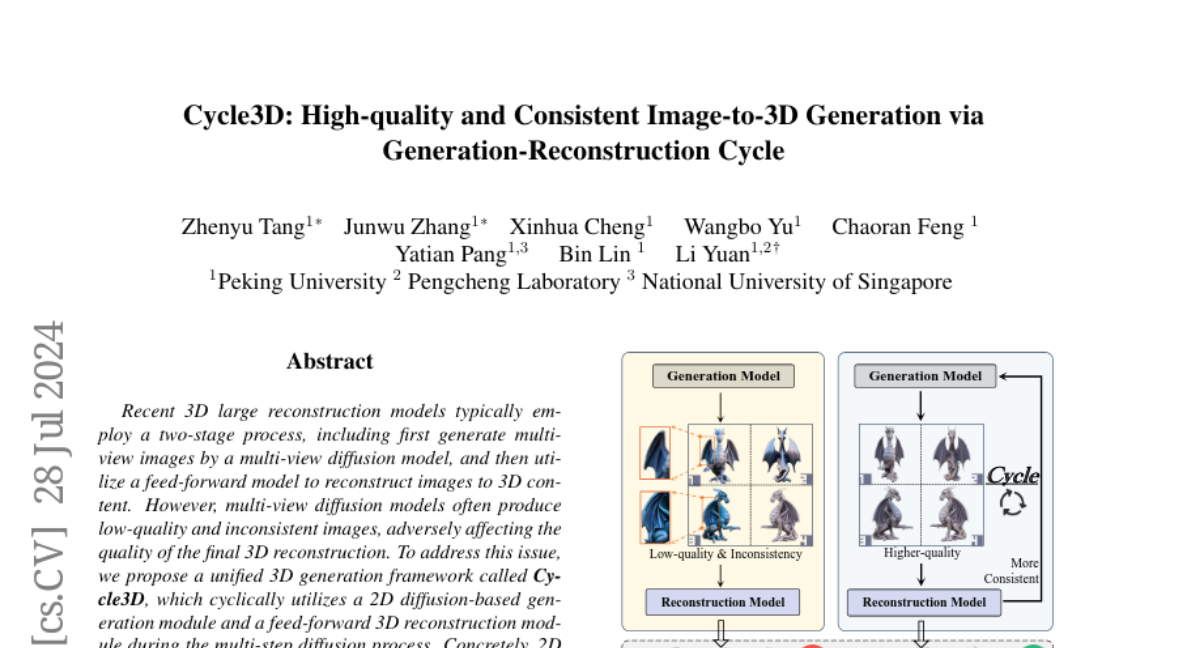

Most current methods for creating 3D models from images involve two main steps: first generating multiple views of an image and then reconstructing those views into a 3D model. However, the initial images produced can often be low-quality and inconsistent, which negatively affects the quality of the final 3D model. This inconsistency makes it difficult to create accurate representations of objects.

What's the solution?

To solve this problem, the authors developed Cycle3D, which uses a two-part system where a 2D diffusion model generates high-quality images, and a 3D reconstruction model ensures that these images are consistent when viewed from different angles. The process works in cycles: the system generates images, checks for consistency, and adjusts as needed to improve quality. This method allows for better control over the generated content and enhances the overall texture and diversity of the 3D models.

Why it matters?

This research is important because it significantly improves how we can create realistic 3D models from simple 2D images. By ensuring high quality and consistency in the generated models, Cycle3D can be applied in various fields such as gaming, virtual reality, and design, making it easier to create lifelike representations of objects for different applications.

Abstract

Recent 3D large reconstruction models typically employ a two-stage process, including first generate multi-view images by a multi-view diffusion model, and then utilize a feed-forward model to reconstruct images to 3D content.However, multi-view diffusion models often produce low-quality and inconsistent images, adversely affecting the quality of the final 3D reconstruction. To address this issue, we propose a unified 3D generation framework called Cycle3D, which cyclically utilizes a 2D diffusion-based generation module and a feed-forward 3D reconstruction module during the multi-step diffusion process. Concretely, 2D diffusion model is applied for generating high-quality texture, and the reconstruction model guarantees multi-view consistency.Moreover, 2D diffusion model can further control the generated content and inject reference-view information for unseen views, thereby enhancing the diversity and texture consistency of 3D generation during the denoising process. Extensive experiments demonstrate the superior ability of our method to create 3D content with high-quality and consistency compared with state-of-the-art baselines.