DepthCrafter: Generating Consistent Long Depth Sequences for Open-world Videos

Wenbo Hu, Xiangjun Gao, Xiaoyu Li, Sijie Zhao, Xiaodong Cun, Yong Zhang, Long Quan, Ying Shan

2024-09-04

Summary

This paper talks about DepthCrafter, a new method for generating accurate depth information from videos, which is important for understanding the 3D structure of scenes.

What's the problem?

Estimating depth in videos is difficult because videos can vary greatly in content and motion. Traditional methods often require extra information, like camera angles or movement data, which can be hard to obtain. This makes it challenging to create accurate depth maps for open-world videos.

What's the solution?

DepthCrafter solves this problem by using a model that generates depth sequences directly from video data without needing additional information. It trains on paired datasets of videos and their corresponding depth information, allowing it to understand how to create depth maps for long video sequences. The method can handle up to 110 frames at once and uses a strategy to process long videos in segments, ensuring smooth and accurate depth estimation.

Why it matters?

This research is important because it improves our ability to analyze and interpret video content in three dimensions. Accurate depth information can enhance various applications, such as creating realistic visual effects in movies, improving virtual reality experiences, and aiding in medical imaging techniques.

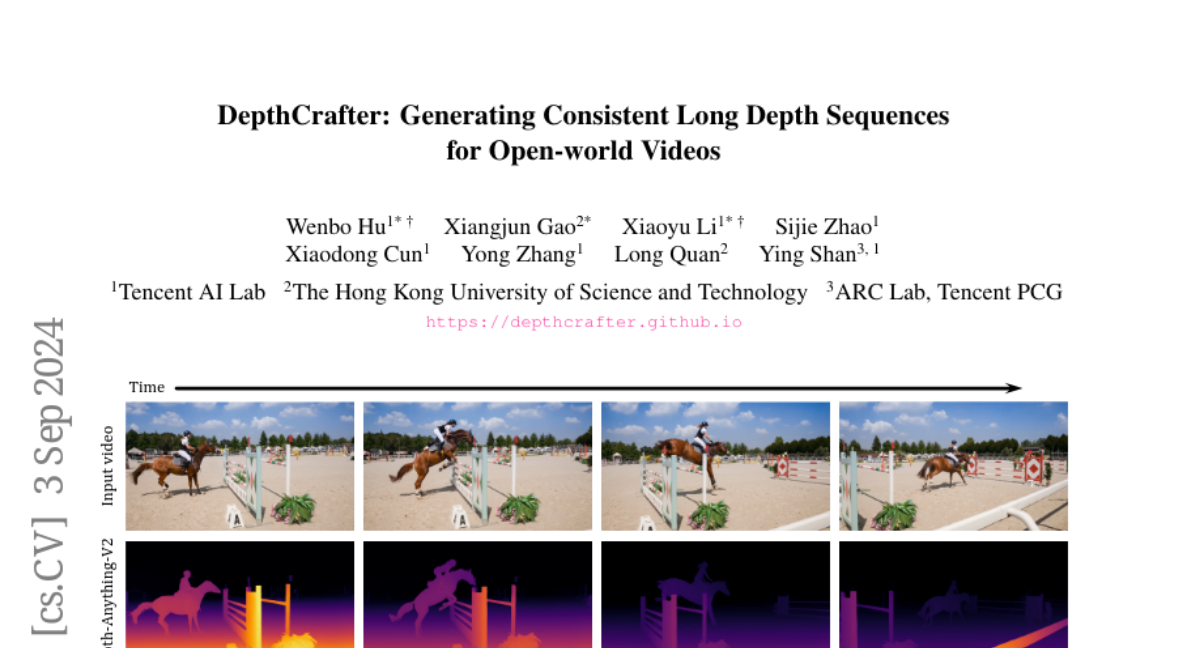

Abstract

Despite significant advancements in monocular depth estimation for static images, estimating video depth in the open world remains challenging, since open-world videos are extremely diverse in content, motion, camera movement, and length. We present DepthCrafter, an innovative method for generating temporally consistent long depth sequences with intricate details for open-world videos, without requiring any supplementary information such as camera poses or optical flow. DepthCrafter achieves generalization ability to open-world videos by training a video-to-depth model from a pre-trained image-to-video diffusion model, through our meticulously designed three-stage training strategy with the compiled paired video-depth datasets. Our training approach enables the model to generate depth sequences with variable lengths at one time, up to 110 frames, and harvest both precise depth details and rich content diversity from realistic and synthetic datasets. We also propose an inference strategy that processes extremely long videos through segment-wise estimation and seamless stitching. Comprehensive evaluations on multiple datasets reveal that DepthCrafter achieves state-of-the-art performance in open-world video depth estimation under zero-shot settings. Furthermore, DepthCrafter facilitates various downstream applications, including depth-based visual effects and conditional video generation.