DiET-GS: Diffusion Prior and Event Stream-Assisted Motion Deblurring 3D Gaussian Splatting

Seungjun Lee, Gim Hee Lee

2025-04-02

Summary

This paper explores how to create sharper 3D images from blurry videos by using special cameras and AI techniques.

What's the problem?

It's hard to create clear 3D models from videos that have motion blur.

What's the solution?

The researchers developed a method called DiET-GS that combines data from event-based cameras (which don't blur) with a type of AI called diffusion models to sharpen the images.

Why it matters?

This work matters because it can lead to better 3D reconstructions from videos, which could be used in areas like robotics and virtual reality.

Abstract

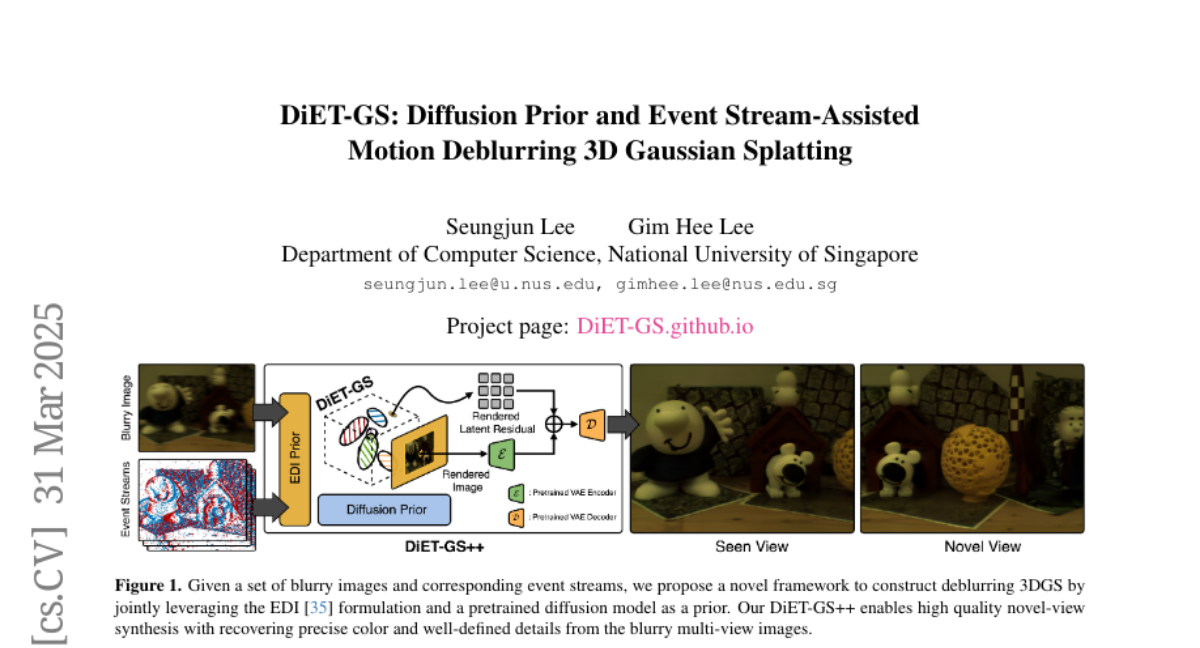

Reconstructing sharp 3D representations from blurry multi-view images are long-standing problem in computer vision. Recent works attempt to enhance high-quality novel view synthesis from the motion blur by leveraging event-based cameras, benefiting from high dynamic range and microsecond temporal resolution. However, they often reach sub-optimal visual quality in either restoring inaccurate color or losing fine-grained details. In this paper, we present DiET-GS, a diffusion prior and event stream-assisted motion deblurring 3DGS. Our framework effectively leverages both blur-free event streams and diffusion prior in a two-stage training strategy. Specifically, we introduce the novel framework to constraint 3DGS with event double integral, achieving both accurate color and well-defined details. Additionally, we propose a simple technique to leverage diffusion prior to further enhance the edge details. Qualitative and quantitative results on both synthetic and real-world data demonstrate that our DiET-GS is capable of producing significantly better quality of novel views compared to the existing baselines. Our project page is https://diet-gs.github.io