DINO-X: A Unified Vision Model for Open-World Object Detection and Understanding

Tianhe Ren, Yihao Chen, Qing Jiang, Zhaoyang Zeng, Yuda Xiong, Wenlong Liu, Zhengyu Ma, Junyi Shen, Yuan Gao, Xiaoke Jiang, Xingyu Chen, Zhuheng Song, Yuhong Zhang, Hongjie Huang, Han Gao, Shilong Liu, Hao Zhang, Feng Li, Kent Yu, Lei Zhang

2024-11-22

Summary

This paper introduces DINO-X, a new vision model designed to improve the detection and understanding of objects in images, especially in open-world scenarios where not all objects are known beforehand.

What's the problem?

Traditional object detection models often struggle with recognizing and classifying objects that they haven't been explicitly trained on, especially those that occur less frequently (known as long-tailed objects). Additionally, these models usually require specific prompts from users to identify objects, which can limit their flexibility and usability.

What's the solution?

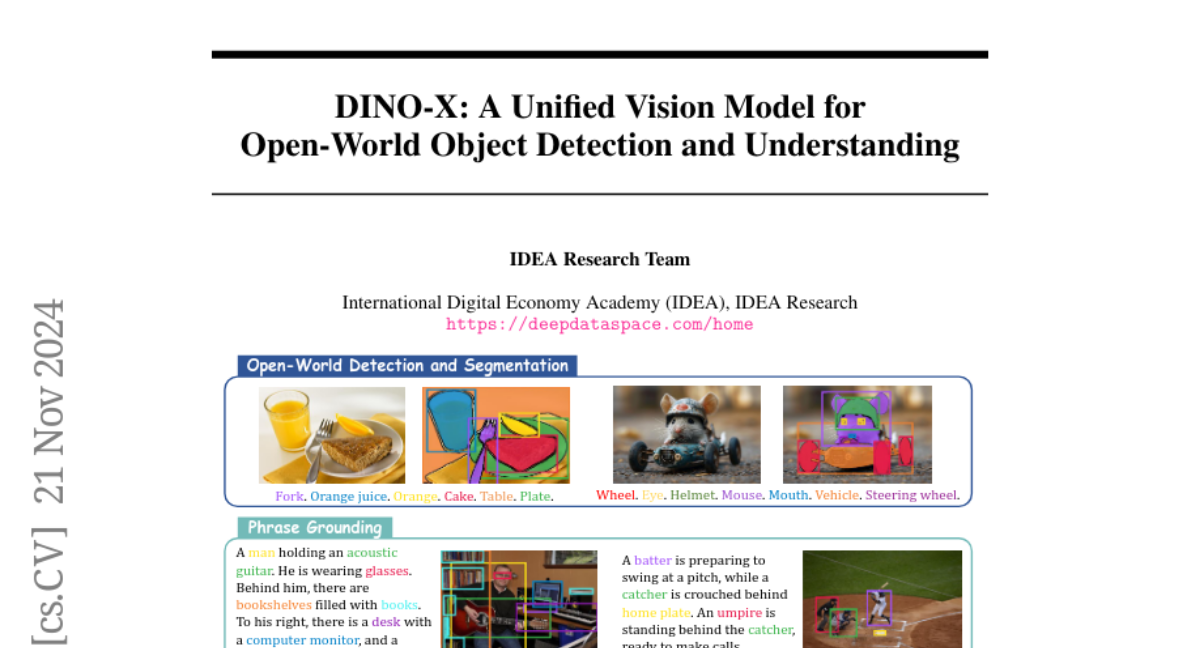

DINO-X addresses these issues by using a unified architecture that allows for various input types, including text and visual prompts, as well as a universal object prompt that enables detection without any user input. The model is trained on a massive dataset called Grounding-100M, which contains over 100 million high-quality samples to enhance its ability to recognize a wide range of objects. DINO-X can perform multiple tasks simultaneously, such as detecting, segmenting, and estimating the pose of objects. It has shown impressive results in benchmark tests, outperforming previous models in recognizing rare objects.

Why it matters?

This research is significant because it enhances the capabilities of AI in understanding complex visual information in real-world applications. By improving the detection of long-tailed objects and allowing for prompt-free detection, DINO-X can be used in various fields like robotics, autonomous vehicles, and security systems, making AI more effective and versatile in everyday scenarios.

Abstract

In this paper, we introduce DINO-X, which is a unified object-centric vision model developed by IDEA Research with the best open-world object detection performance to date. DINO-X employs the same Transformer-based encoder-decoder architecture as Grounding DINO 1.5 to pursue an object-level representation for open-world object understanding. To make long-tailed object detection easy, DINO-X extends its input options to support text prompt, visual prompt, and customized prompt. With such flexible prompt options, we develop a universal object prompt to support prompt-free open-world detection, making it possible to detect anything in an image without requiring users to provide any prompt. To enhance the model's core grounding capability, we have constructed a large-scale dataset with over 100 million high-quality grounding samples, referred to as Grounding-100M, for advancing the model's open-vocabulary detection performance. Pre-training on such a large-scale grounding dataset leads to a foundational object-level representation, which enables DINO-X to integrate multiple perception heads to simultaneously support multiple object perception and understanding tasks, including detection, segmentation, pose estimation, object captioning, object-based QA, etc. Experimental results demonstrate the superior performance of DINO-X. Specifically, the DINO-X Pro model achieves 56.0 AP, 59.8 AP, and 52.4 AP on the COCO, LVIS-minival, and LVIS-val zero-shot object detection benchmarks, respectively. Notably, it scores 63.3 AP and 56.5 AP on the rare classes of LVIS-minival and LVIS-val benchmarks, both improving the previous SOTA performance by 5.8 AP. Such a result underscores its significantly improved capacity for recognizing long-tailed objects.