DreamBench++: A Human-Aligned Benchmark for Personalized Image Generation

Yuang Peng, Yuxin Cui, Haomiao Tang, Zekun Qi, Runpei Dong, Jing Bai, Chunrui Han, Zheng Ge, Xiangyu Zhang, Shu-Tao Xia

2024-06-25

Summary

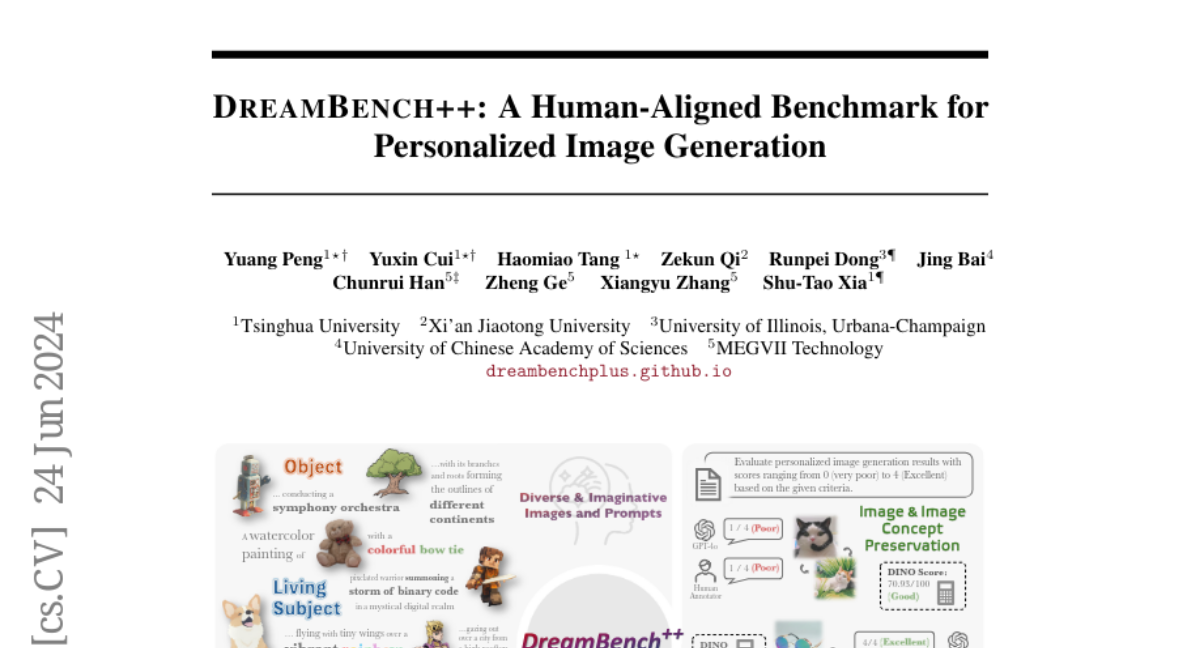

This paper introduces DreamBench++, a new benchmark designed to evaluate how well AI models can generate personalized images. It focuses on aligning the evaluation process with human preferences to improve the quality of generated content.

What's the problem?

Current methods for evaluating personalized image generation often fall short. Automated evaluations may not match human opinions, while human evaluations can be slow and costly. This makes it hard to determine how well these models truly perform in generating images that people find appealing.

What's the solution?

The authors created DreamBench++ to address these issues by using advanced multimodal GPT models to automate the evaluation process. They designed specific prompts that help ensure the evaluations are aligned with human preferences. Additionally, they built a diverse dataset that includes various images and prompts, allowing for a more comprehensive assessment of different generative models. By testing seven modern models, they showed that DreamBench++ provides a better understanding of how well these models meet human expectations.

Why it matters?

This research is significant because it aims to improve the way we assess AI-generated images, making sure they align more closely with what people actually want. By developing a more effective benchmark, it can help drive advancements in personalized image generation, leading to better tools for creative work and everyday applications.

Abstract

Personalized image generation holds great promise in assisting humans in everyday work and life due to its impressive function in creatively generating personalized content. However, current evaluations either are automated but misalign with humans or require human evaluations that are time-consuming and expensive. In this work, we present DreamBench++, a human-aligned benchmark automated by advanced multimodal GPT models. Specifically, we systematically design the prompts to let GPT be both human-aligned and self-aligned, empowered with task reinforcement. Further, we construct a comprehensive dataset comprising diverse images and prompts. By benchmarking 7 modern generative models, we demonstrate that DreamBench++ results in significantly more human-aligned evaluation, helping boost the community with innovative findings.