Drowning in Documents: Consequences of Scaling Reranker Inference

Mathew Jacob, Erik Lindgren, Matei Zaharia, Michael Carbin, Omar Khattab, Andrew Drozdov

2024-11-19

Summary

This paper discusses the challenges and findings related to rerankers in information retrieval systems, revealing that increasing the number of documents scored by rerankers can actually lead to worse results.

What's the problem?

Rerankers are tools used to improve the quality of document retrieval by re-scoring documents that have been initially retrieved. While they are supposed to enhance performance, there is a common assumption that scoring more documents will always lead to better results. However, this paper investigates whether that assumption is true, especially as the number of documents increases.

What's the solution?

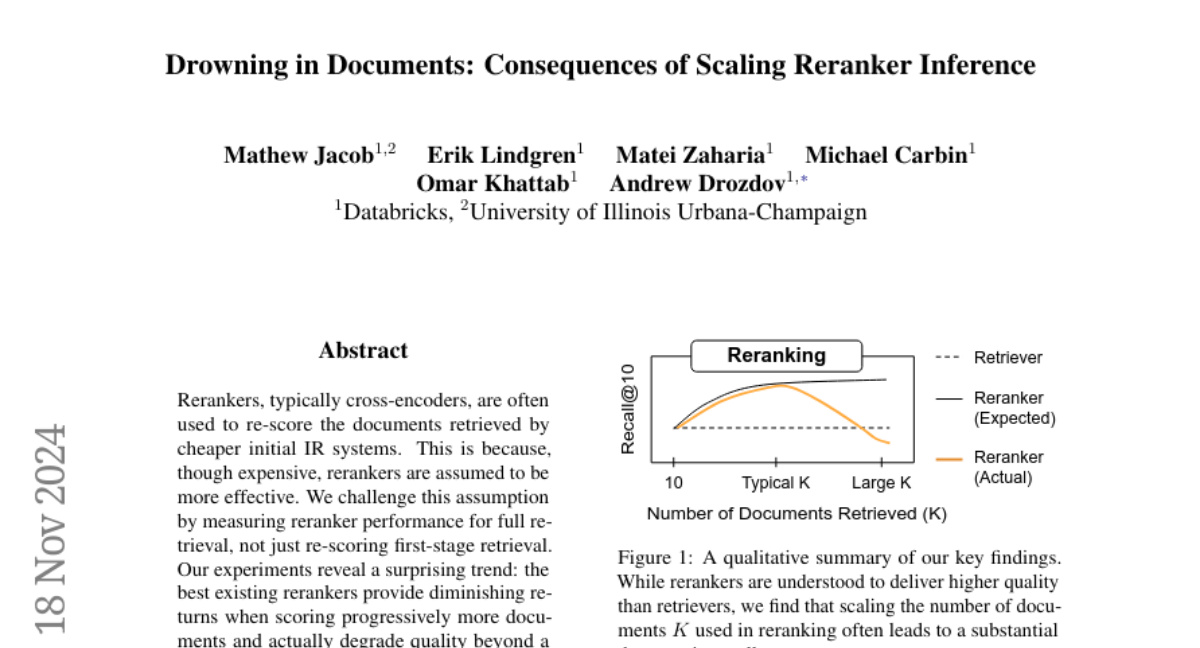

The authors conducted experiments to measure how well rerankers perform when they are asked to score more documents. They found that the best existing rerankers often provide diminishing returns, meaning that after a certain point, adding more documents leads to poorer quality scores. In some cases, rerankers assigned high scores to irrelevant documents, which indicates a significant flaw in their effectiveness when scaling up.

Why it matters?

This research is important because it challenges the widely held belief that more scoring always equals better results in information retrieval. By highlighting the limitations of current reranking methods, the authors encourage further research into improving these systems, which could lead to better search engines and information retrieval technologies.

Abstract

Rerankers, typically cross-encoders, are often used to re-score the documents retrieved by cheaper initial IR systems. This is because, though expensive, rerankers are assumed to be more effective. We challenge this assumption by measuring reranker performance for full retrieval, not just re-scoring first-stage retrieval. Our experiments reveal a surprising trend: the best existing rerankers provide diminishing returns when scoring progressively more documents and actually degrade quality beyond a certain limit. In fact, in this setting, rerankers can frequently assign high scores to documents with no lexical or semantic overlap with the query. We hope that our findings will spur future research to improve reranking.