DynamicCity: Large-Scale LiDAR Generation from Dynamic Scenes

Hengwei Bian, Lingdong Kong, Haozhe Xie, Liang Pan, Yu Qiao, Ziwei Liu

2024-10-24

Summary

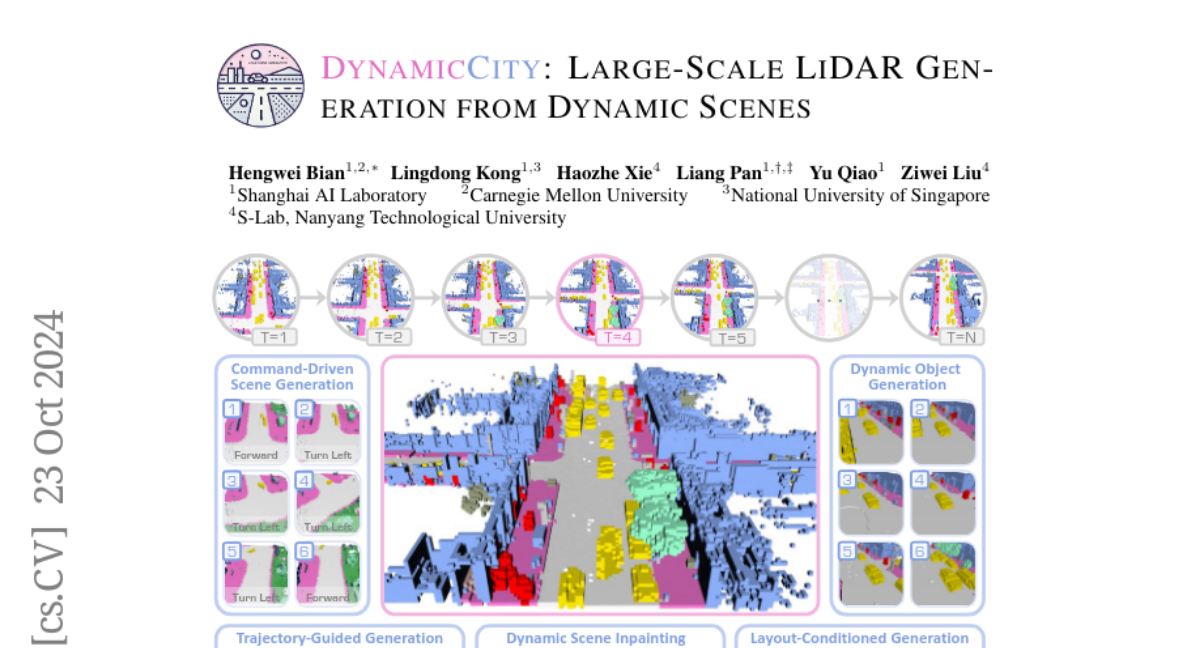

This paper introduces DynamicCity, a new framework for generating large-scale LiDAR scenes that captures the dynamic nature of real-world environments, improving how we create 3D maps from moving scenes.

What's the problem?

Most current methods for generating LiDAR scenes focus only on static images, meaning they can't effectively represent the changing conditions of real-world driving environments. This limitation makes it difficult to create accurate and useful 3D maps for applications like autonomous driving.

What's the solution?

DynamicCity uses a two-part system to improve LiDAR generation. First, it employs a Variational Autoencoder (VAE) to create a compact 4D representation of the scene, which helps in fitting the data better. Then, it uses a diffusion model to generate these 4D representations into high-quality LiDAR scenes. This framework allows for capturing changes over time and improves both the efficiency and accuracy of the generated scenes.

Why it matters?

This research is important because it enhances our ability to create detailed and accurate 3D maps from dynamic environments. Such improvements can significantly benefit fields like autonomous driving and urban planning, where understanding real-time changes in the environment is crucial.

Abstract

LiDAR scene generation has been developing rapidly recently. However, existing methods primarily focus on generating static and single-frame scenes, overlooking the inherently dynamic nature of real-world driving environments. In this work, we introduce DynamicCity, a novel 4D LiDAR generation framework capable of generating large-scale, high-quality LiDAR scenes that capture the temporal evolution of dynamic environments. DynamicCity mainly consists of two key models. 1) A VAE model for learning HexPlane as the compact 4D representation. Instead of using naive averaging operations, DynamicCity employs a novel Projection Module to effectively compress 4D LiDAR features into six 2D feature maps for HexPlane construction, which significantly enhances HexPlane fitting quality (up to 12.56 mIoU gain). Furthermore, we utilize an Expansion & Squeeze Strategy to reconstruct 3D feature volumes in parallel, which improves both network training efficiency and reconstruction accuracy than naively querying each 3D point (up to 7.05 mIoU gain, 2.06x training speedup, and 70.84% memory reduction). 2) A DiT-based diffusion model for HexPlane generation. To make HexPlane feasible for DiT generation, a Padded Rollout Operation is proposed to reorganize all six feature planes of the HexPlane as a squared 2D feature map. In particular, various conditions could be introduced in the diffusion or sampling process, supporting versatile 4D generation applications, such as trajectory- and command-driven generation, inpainting, and layout-conditioned generation. Extensive experiments on the CarlaSC and Waymo datasets demonstrate that DynamicCity significantly outperforms existing state-of-the-art 4D LiDAR generation methods across multiple metrics. The code will be released to facilitate future research.