Eagle: Exploring The Design Space for Multimodal LLMs with Mixture of Encoders

Min Shi, Fuxiao Liu, Shihao Wang, Shijia Liao, Subhashree Radhakrishnan, De-An Huang, Hongxu Yin, Karan Sapra, Yaser Yacoob, Humphrey Shi, Bryan Catanzaro, Andrew Tao, Jan Kautz, Zhiding Yu, Guilin Liu

2024-08-29

Summary

This paper discusses Eagle, a new approach for improving multimodal large language models (MLLMs) by using a combination of different vision encoders to better understand complex visual information.

What's the problem?

Interpreting visual information accurately is important for MLLMs, but existing methods often struggle with this task. Many models use a single type of vision encoder, which can limit their ability to recognize and process different types of images effectively. Additionally, there hasn’t been enough research comparing different approaches to see which works best.

What's the solution?

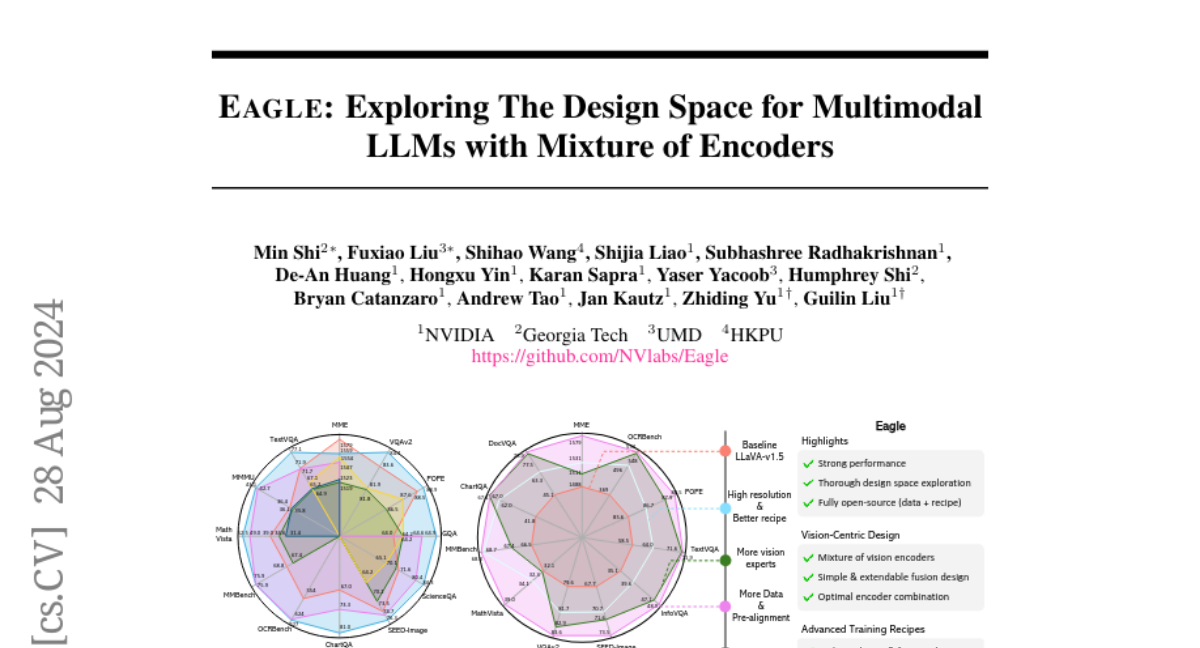

The authors propose using a mixture of vision encoders in a systematic way to enhance the performance of MLLMs. They explore how combining outputs from various encoders can improve the model's understanding of visual data. They also introduce a technique called Pre-Alignment, which helps connect visual data with language, making the model's responses more coherent. Their experiments show that this new approach leads to better performance than existing models on important benchmarks.

Why it matters?

This research is significant because it enhances how AI models understand and interpret images alongside text. By improving the capabilities of MLLMs, Eagle can lead to better applications in fields like computer vision, natural language processing, and human-computer interaction, ultimately making technology more effective and user-friendly.

Abstract

The ability to accurately interpret complex visual information is a crucial topic of multimodal large language models (MLLMs). Recent work indicates that enhanced visual perception significantly reduces hallucinations and improves performance on resolution-sensitive tasks, such as optical character recognition and document analysis. A number of recent MLLMs achieve this goal using a mixture of vision encoders. Despite their success, there is a lack of systematic comparisons and detailed ablation studies addressing critical aspects, such as expert selection and the integration of multiple vision experts. This study provides an extensive exploration of the design space for MLLMs using a mixture of vision encoders and resolutions. Our findings reveal several underlying principles common to various existing strategies, leading to a streamlined yet effective design approach. We discover that simply concatenating visual tokens from a set of complementary vision encoders is as effective as more complex mixing architectures or strategies. We additionally introduce Pre-Alignment to bridge the gap between vision-focused encoders and language tokens, enhancing model coherence. The resulting family of MLLMs, Eagle, surpasses other leading open-source models on major MLLM benchmarks. Models and code: https://github.com/NVlabs/Eagle