EchoVideo: Identity-Preserving Human Video Generation by Multimodal Feature Fusion

Jiangchuan Wei, Shiyue Yan, Wenfeng Lin, Boyuan Liu, Renjie Chen, Mingyu Guo

2025-01-24

Summary

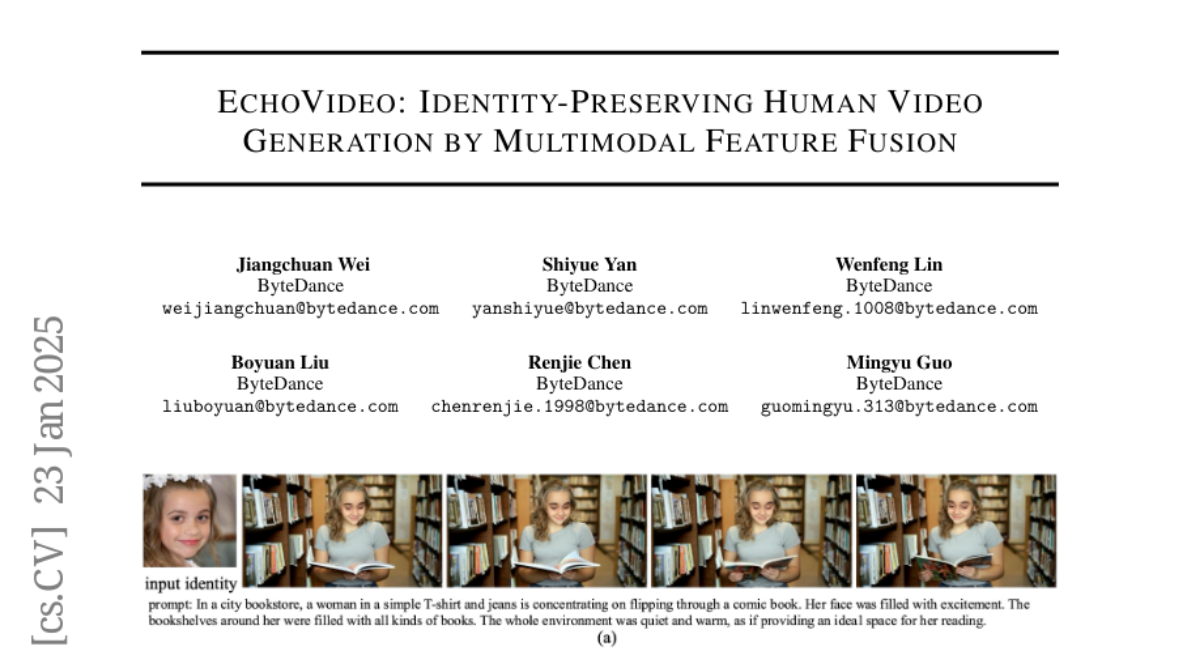

This paper talks about EchoVideo, a new way to create videos of people that look like specific individuals while keeping their appearance consistent and natural. It's like having a super-smart computer artist that can draw animated videos of you doing different things, but always making sure it looks just like you.

What's the problem?

Current methods for making videos of specific people using AI often have issues. Sometimes they just copy and paste parts of a person's face, which looks weird, or they make videos that don't really look like the person they're supposed to. This happens because these methods focus too much on small details of a person's face, which can make the face look stiff or include things that shouldn't be there, like shadows or weird angles.

What's the solution?

The researchers created EchoVideo, which does two main things differently. First, it uses something called an Identity Image-Text Fusion Module (IITF) that looks at both pictures and text descriptions to understand what makes a person look like themselves, ignoring things like lighting or the way they're posed. Second, they use a special two-step training process that helps the AI balance between using detailed facial information and more general features. This helps the AI create videos that look more natural and consistent.

Why it matters?

This matters because it could make it much easier to create realistic videos of specific people for things like movies, video games, or even personalized educational content. Imagine being able to see yourself as a character in a movie or having a video tutor that looks just like you. It's also important for developing better AI that can understand and recreate human appearances more accurately, which could have applications in fields like security, entertainment, and virtual reality.

Abstract

Recent advancements in video generation have significantly impacted various downstream applications, particularly in identity-preserving video generation (IPT2V). However, existing methods struggle with "copy-paste" artifacts and low similarity issues, primarily due to their reliance on low-level facial image information. This dependence can result in rigid facial appearances and artifacts reflecting irrelevant details. To address these challenges, we propose EchoVideo, which employs two key strategies: (1) an Identity Image-Text Fusion Module (IITF) that integrates high-level semantic features from text, capturing clean facial identity representations while discarding occlusions, poses, and lighting variations to avoid the introduction of artifacts; (2) a two-stage training strategy, incorporating a stochastic method in the second phase to randomly utilize shallow facial information. The objective is to balance the enhancements in fidelity provided by shallow features while mitigating excessive reliance on them. This strategy encourages the model to utilize high-level features during training, ultimately fostering a more robust representation of facial identities. EchoVideo effectively preserves facial identities and maintains full-body integrity. Extensive experiments demonstrate that it achieves excellent results in generating high-quality, controllability and fidelity videos.