Efficient Audio Captioning with Encoder-Level Knowledge Distillation

Xuenan Xu, Haohe Liu, Mengyue Wu, Wenwu Wang, Mark D. Plumbley

2024-07-22

Summary

This paper discusses a new method called Efficient Audio Captioning (AAC) that improves how machines generate text descriptions for audio using a technique called knowledge distillation. The focus is on making the models smaller and faster while still maintaining high performance.

What's the problem?

Automated audio captioning models have become very large as they improve in performance, which makes them slower and harder to use. This increase in size can lead to difficulties in deploying these models in real-world applications where speed and efficiency are important. Additionally, existing methods of training these models often do not effectively utilize the available data, leading to less optimal performance.

What's the solution?

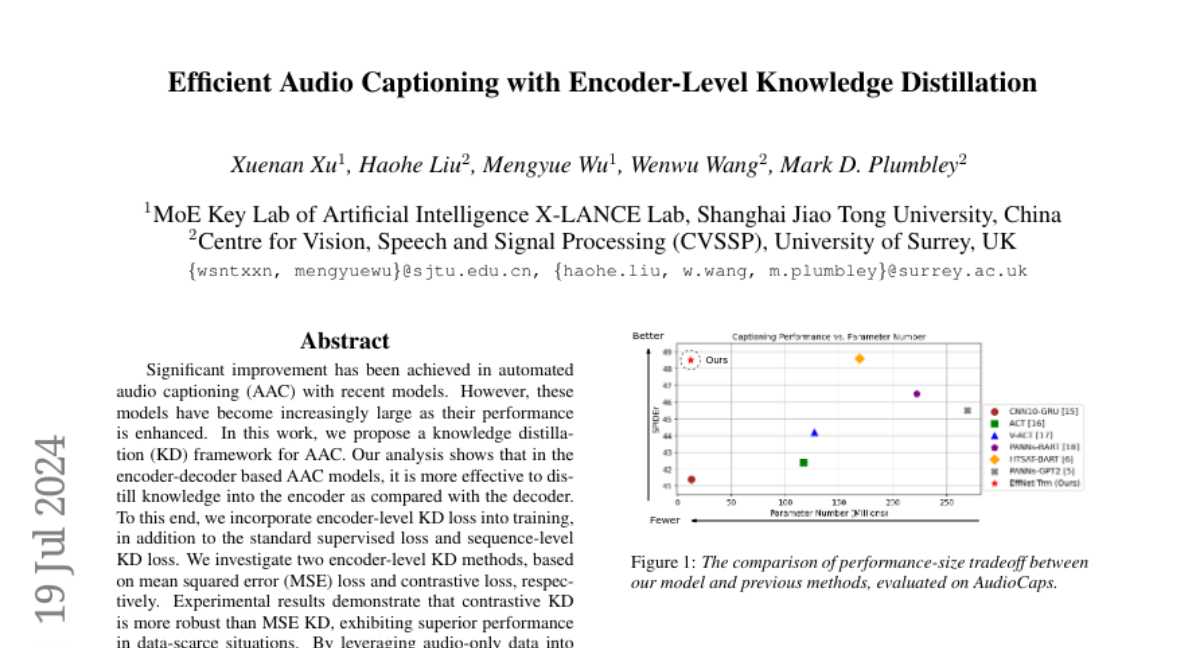

The authors propose a knowledge distillation framework specifically for audio captioning. They found that it's more effective to transfer knowledge to the encoder part of the model rather than the decoder. They introduced two methods for this: one using mean squared error (MSE) loss and another using contrastive loss. Their experiments showed that the contrastive method performed better, especially when there wasn't much training data available. By focusing on the encoder and using audio-only data for training, their model achieved competitive results while being 19 times faster than previous models.

Why it matters?

This research is important because it helps create more efficient audio captioning systems that can work well even with limited data. By improving the speed and performance of these models, they can be used in various applications, such as assisting people with hearing impairments or enhancing multimedia content with accurate descriptions.

Abstract

Significant improvement has been achieved in automated audio captioning (AAC) with recent models. However, these models have become increasingly large as their performance is enhanced. In this work, we propose a knowledge distillation (KD) framework for AAC. Our analysis shows that in the encoder-decoder based AAC models, it is more effective to distill knowledge into the encoder as compared with the decoder. To this end, we incorporate encoder-level KD loss into training, in addition to the standard supervised loss and sequence-level KD loss. We investigate two encoder-level KD methods, based on mean squared error (MSE) loss and contrastive loss, respectively. Experimental results demonstrate that contrastive KD is more robust than MSE KD, exhibiting superior performance in data-scarce situations. By leveraging audio-only data into training in the KD framework, our student model achieves competitive performance, with an inference speed that is 19 times fasterAn online demo is available at \url{https://huggingface.co/spaces/wsntxxn/efficient_audio_captioning}.