EMMA: Your Text-to-Image Diffusion Model Can Secretly Accept Multi-Modal Prompts

Yucheng Han, Rui Wang, Chi Zhang, Juntao Hu, Pei Cheng, Bin Fu, Hanwang Zhang

2024-06-14

Summary

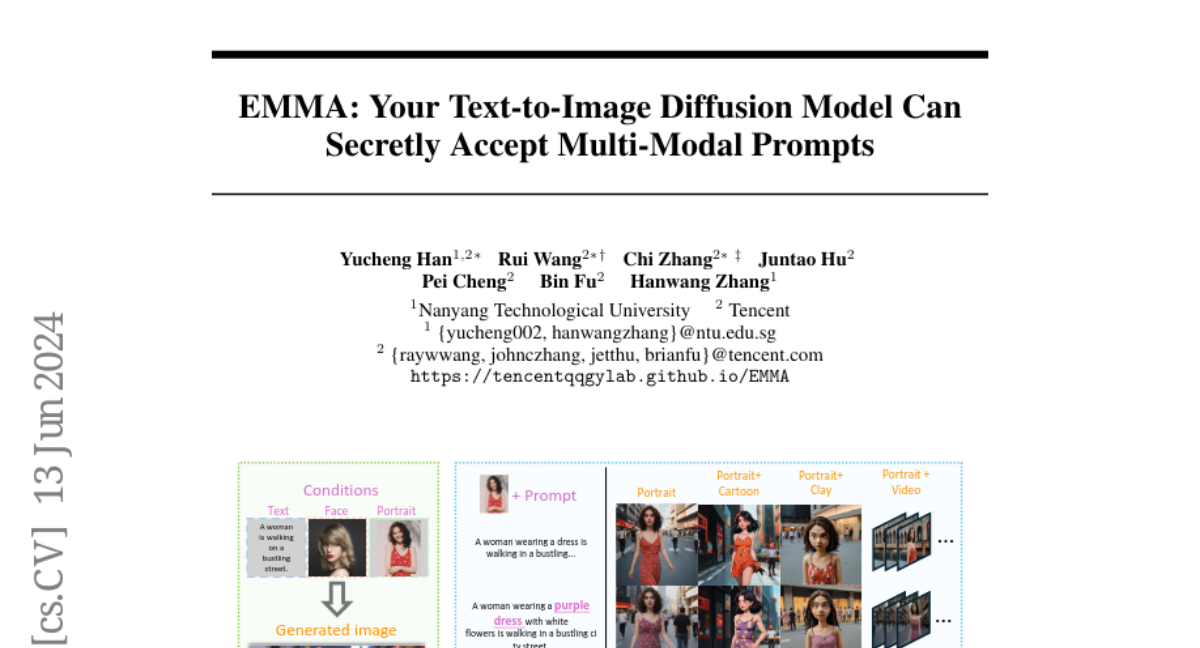

This paper presents EMMA, a new image generation model that allows users to input both text and images (multi-modal prompts) to create high-quality images. EMMA enhances the capabilities of existing models by effectively combining information from different types of inputs.

What's the problem?

Current image generation models often struggle when asked to work with multiple types of inputs, like text combined with images. They usually favor one type of input over the other, which can lead to lower quality or less accurate images. This limitation makes it difficult for these models to fully utilize the information provided by both text and images.

What's the solution?

To solve this problem, the authors developed EMMA, which is based on an advanced text-to-image diffusion model called ELLA. EMMA uses a special design called the Multi-modal Feature Connector that allows it to blend text and image information during the image creation process. By keeping most of the original model's parameters unchanged and only adjusting a few layers, EMMA can accept multi-modal prompts without needing extensive retraining. This flexibility enables it to generate personalized and context-aware images more effectively.

Why it matters?

This research is important because it improves how AI can generate images by allowing it to consider multiple types of input simultaneously. By enhancing the ability of models like EMMA to work with both text and images, we can create more accurate and detailed visual content, which has applications in fields like art, advertising, and education. The advancements made by EMMA could lead to better tools for creative expression and communication.

Abstract

Recent advancements in image generation have enabled the creation of high-quality images from text conditions. However, when facing multi-modal conditions, such as text combined with reference appearances, existing methods struggle to balance multiple conditions effectively, typically showing a preference for one modality over others. To address this challenge, we introduce EMMA, a novel image generation model accepting multi-modal prompts built upon the state-of-the-art text-to-image (T2I) diffusion model, ELLA. EMMA seamlessly incorporates additional modalities alongside text to guide image generation through an innovative Multi-modal Feature Connector design, which effectively integrates textual and supplementary modal information using a special attention mechanism. By freezing all parameters in the original T2I diffusion model and only adjusting some additional layers, we reveal an interesting finding that the pre-trained T2I diffusion model can secretly accept multi-modal prompts. This interesting property facilitates easy adaptation to different existing frameworks, making EMMA a flexible and effective tool for producing personalized and context-aware images and even videos. Additionally, we introduce a strategy to assemble learned EMMA modules to produce images conditioned on multiple modalities simultaneously, eliminating the need for additional training with mixed multi-modal prompts. Extensive experiments demonstrate the effectiveness of EMMA in maintaining high fidelity and detail in generated images, showcasing its potential as a robust solution for advanced multi-modal conditional image generation tasks.