Emu3: Next-Token Prediction is All You Need

Xinlong Wang, Xiaosong Zhang, Zhengxiong Luo, Quan Sun, Yufeng Cui, Jinsheng Wang, Fan Zhang, Yueze Wang, Zhen Li, Qiying Yu, Yingli Zhao, Yulong Ao, Xuebin Min, Tao Li, Boya Wu, Bo Zhao, Bowen Zhang, Liangdong Wang, Guang Liu, Zheqi He, Xi Yang, Jingjing Liu

2024-09-30

Summary

This paper talks about Emu3, a new model that uses a method called next-token prediction to handle multiple types of data like text, images, and videos all at once. This approach simplifies how machines learn and generate content across different formats.

What's the problem?

While next-token prediction is a promising method for developing artificial intelligence, it has struggled with multimodal tasks (tasks that involve multiple types of data, like text and images) because existing methods often rely on complex models like diffusion models. These models can be inefficient and difficult to use when trying to integrate different data types effectively.

What's the solution?

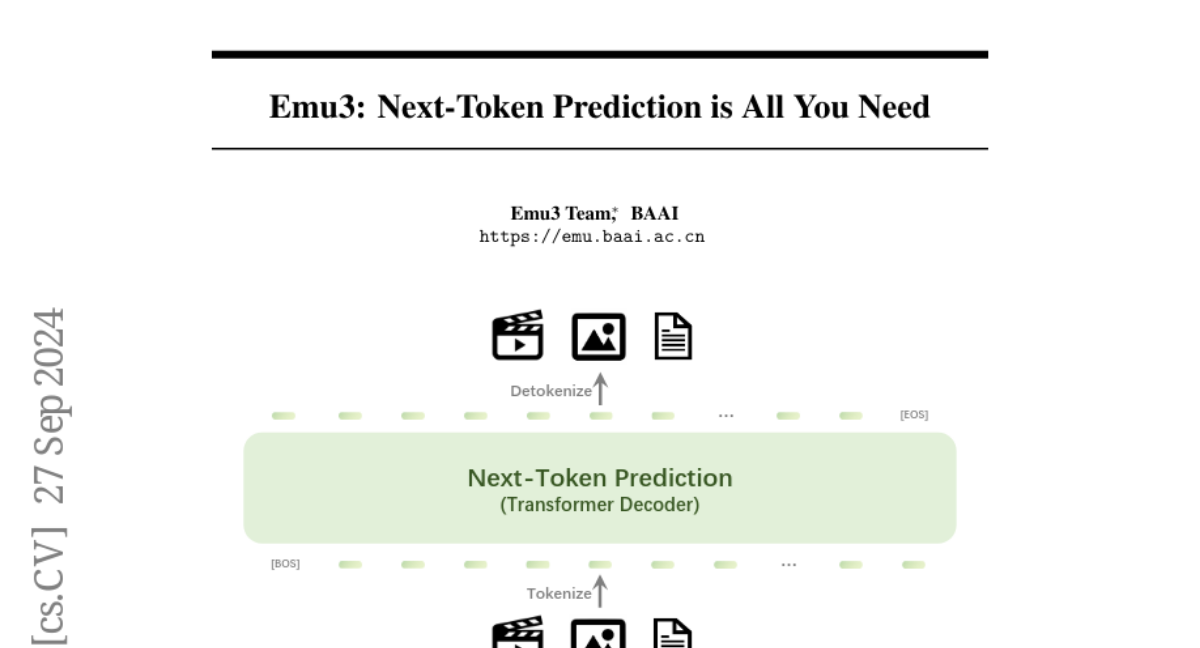

Emu3 addresses this issue by training a single model to predict the next token in a sequence, whether that token represents a word in text, a part of an image, or a frame in a video. By tokenizing all these different data types into a discrete format, Emu3 can learn from a mixture of multimodal sequences without needing the complicated structures of previous models. This allows it to outperform established models in generating and understanding various forms of content while being simpler and more efficient.

Why it matters?

This research is significant because it shows that focusing on next-token prediction can lead to advancements in creating more versatile AI systems that can understand and generate multiple types of media. By simplifying the process and achieving high performance across various tasks, Emu3 opens up new possibilities for developing general artificial intelligence that can work seamlessly with different data formats.

Abstract

While next-token prediction is considered a promising path towards artificial general intelligence, it has struggled to excel in multimodal tasks, which are still dominated by diffusion models (e.g., Stable Diffusion) and compositional approaches (e.g., CLIP combined with LLMs). In this paper, we introduce Emu3, a new suite of state-of-the-art multimodal models trained solely with next-token prediction. By tokenizing images, text, and videos into a discrete space, we train a single transformer from scratch on a mixture of multimodal sequences. Emu3 outperforms several well-established task-specific models in both generation and perception tasks, surpassing flagship models such as SDXL and LLaVA-1.6, while eliminating the need for diffusion or compositional architectures. Emu3 is also capable of generating high-fidelity video via predicting the next token in a video sequence. We simplify complex multimodal model designs by converging on a singular focus: tokens, unlocking great potential for scaling both during training and inference. Our results demonstrate that next-token prediction is a promising path towards building general multimodal intelligence beyond language. We open-source key techniques and models to support further research in this direction.