Ensembling Large Language Models with Process Reward-Guided Tree Search for Better Complex Reasoning

Sungjin Park, Xiao Liu, Yeyun Gong, Edward Choi

2024-12-25

Summary

This paper talks about LE-MCTS, a new method that combines large language models (LLMs) with a tree search approach to improve their ability to solve complex reasoning tasks.

What's the problem?

Even though large language models have made great progress, they often struggle with complex reasoning tasks that require multiple steps. Existing methods for improving their performance either focus on single outputs or do not effectively guide the models through the reasoning process, leading to inconsistent results.

What's the solution?

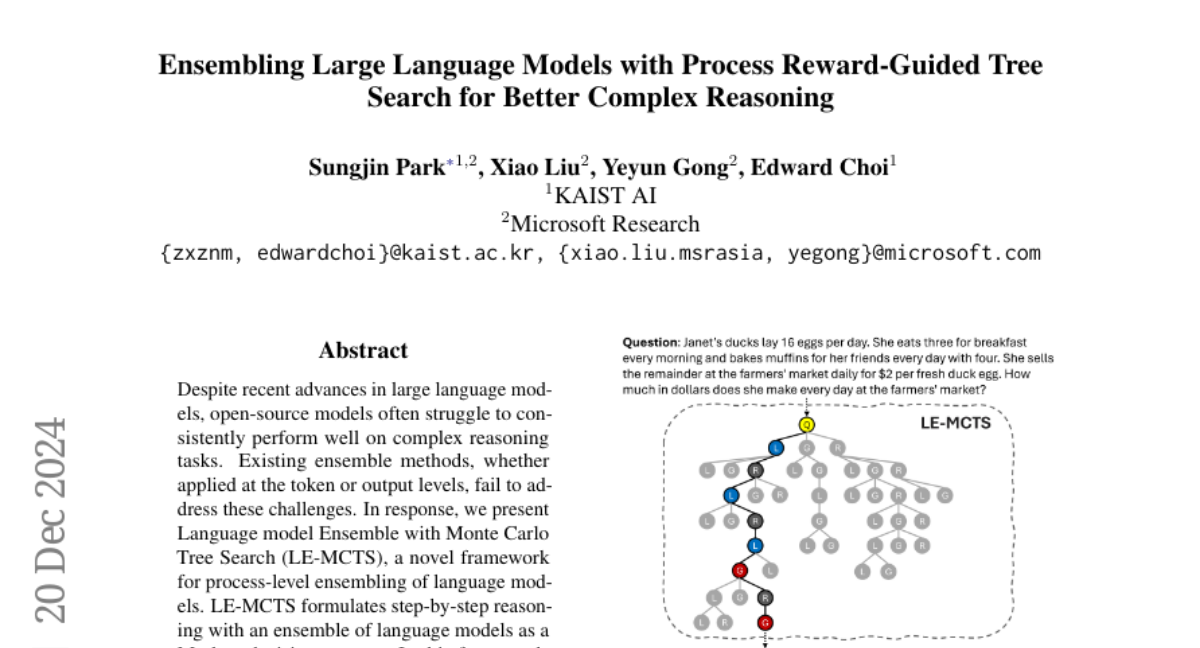

The authors introduce LE-MCTS, which stands for Language model Ensemble with Monte Carlo Tree Search. This method treats the reasoning process as a series of decisions, where each decision is based on the previous steps taken by the model. By using a tree search approach, LE-MCTS evaluates different reasoning paths and selects the most accurate one based on feedback from a reward model. This allows the model to generate better responses by considering multiple possible reasoning chains during the decision-making process.

Why it matters?

This research is important because it enhances how AI systems can tackle complex problems that require deep reasoning. By improving the performance of LLMs in these areas, LE-MCTS could lead to more reliable AI applications in fields like education, science, and technology, where accurate reasoning is crucial.

Abstract

Despite recent advances in large language models, open-source models often struggle to consistently perform well on complex reasoning tasks. Existing ensemble methods, whether applied at the token or output levels, fail to address these challenges. In response, we present Language model Ensemble with Monte Carlo Tree Search (LE-MCTS), a novel framework for process-level ensembling of language models. LE-MCTS formulates step-by-step reasoning with an ensemble of language models as a Markov decision process. In this framework, states represent intermediate reasoning paths, while actions consist of generating the next reasoning step using one of the language models selected from a predefined pool. Guided by a process-based reward model, LE-MCTS performs a tree search over the reasoning steps generated by different language models, identifying the most accurate reasoning chain. Experimental results on five mathematical reasoning benchmarks demonstrate that our approach outperforms both single language model decoding algorithms and language model ensemble methods. Notably, LE-MCTS improves performance by 3.6% and 4.3% on the MATH and MQA datasets, respectively, highlighting its effectiveness in solving complex reasoning problems.