Estimating Knowledge in Large Language Models Without Generating a Single Token

Daniela Gottesman, Mor Geva

2024-06-19

Summary

This paper discusses a new method for evaluating how knowledgeable large language models (LLMs) are about specific topics without having to generate any text. The authors introduce a tool called KEEN that examines the model's internal workings to predict its ability to answer questions and the accuracy of its responses.

What's the problem?

Typically, to assess a language model's knowledge, researchers ask it questions and evaluate the answers it generates. However, this process can be inefficient and may not accurately reflect the model's true understanding before it produces any text. There is a need for a way to evaluate the model's knowledge based on its internal computations rather than its output, which could provide quicker and more reliable insights into its capabilities.

What's the solution?

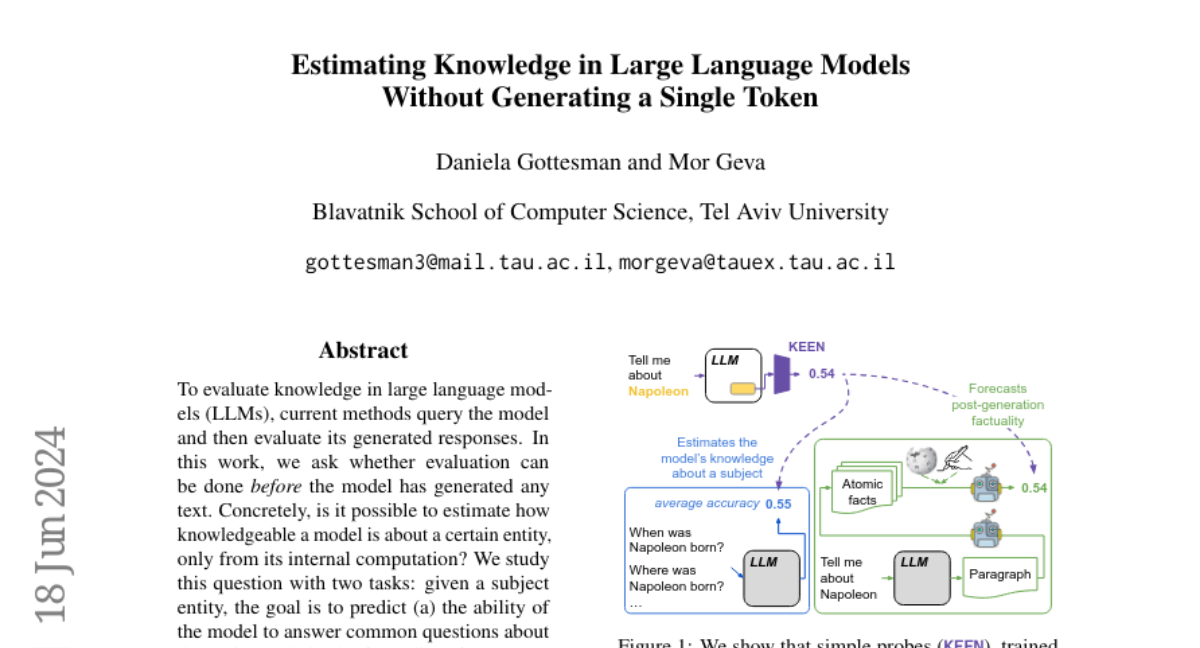

The authors propose KEEN, a simple probing tool that analyzes the internal representations of the model related to specific subjects. They designed experiments to test whether KEEN could predict two things: (1) how well the model can answer common questions about a topic and (2) how factual its generated responses are. The results showed that KEEN effectively correlates with both the accuracy of the model's answers and a recent metric for checking factual correctness called FActScore. Additionally, KEEN can highlight specific tokens that indicate where the model lacks knowledge, making it easier to identify areas needing improvement.

Why it matters?

This research is important because it offers a new way to evaluate language models that is faster and does not rely on their output. By using KEEN, researchers can better understand what these models know and where they might need more training or information. This could lead to improvements in how AI systems are developed and used, making them more reliable for applications like education, customer service, and healthcare.

Abstract

To evaluate knowledge in large language models (LLMs), current methods query the model and then evaluate its generated responses. In this work, we ask whether evaluation can be done before the model has generated any text. Concretely, is it possible to estimate how knowledgeable a model is about a certain entity, only from its internal computation? We study this question with two tasks: given a subject entity, the goal is to predict (a) the ability of the model to answer common questions about the entity, and (b) the factuality of responses generated by the model about the entity. Experiments with a variety of LLMs show that KEEN, a simple probe trained over internal subject representations, succeeds at both tasks - strongly correlating with both the QA accuracy of the model per-subject and FActScore, a recent factuality metric in open-ended generation. Moreover, KEEN naturally aligns with the model's hedging behavior and faithfully reflects changes in the model's knowledge after fine-tuning. Lastly, we show a more interpretable yet equally performant variant of KEEN, which highlights a small set of tokens that correlates with the model's lack of knowledge. Being simple and lightweight, KEEN can be leveraged to identify gaps and clusters of entity knowledge in LLMs, and guide decisions such as augmenting queries with retrieval.