Experience is the Best Teacher: Grounding VLMs for Robotics through Self-Generated Memory

Guowei Lan, Kaixian Qu, René Zurbrügg, Changan Chen, Christopher E. Mower, Haitham Bou-Ammar, Marco Hutter

2025-07-23

Summary

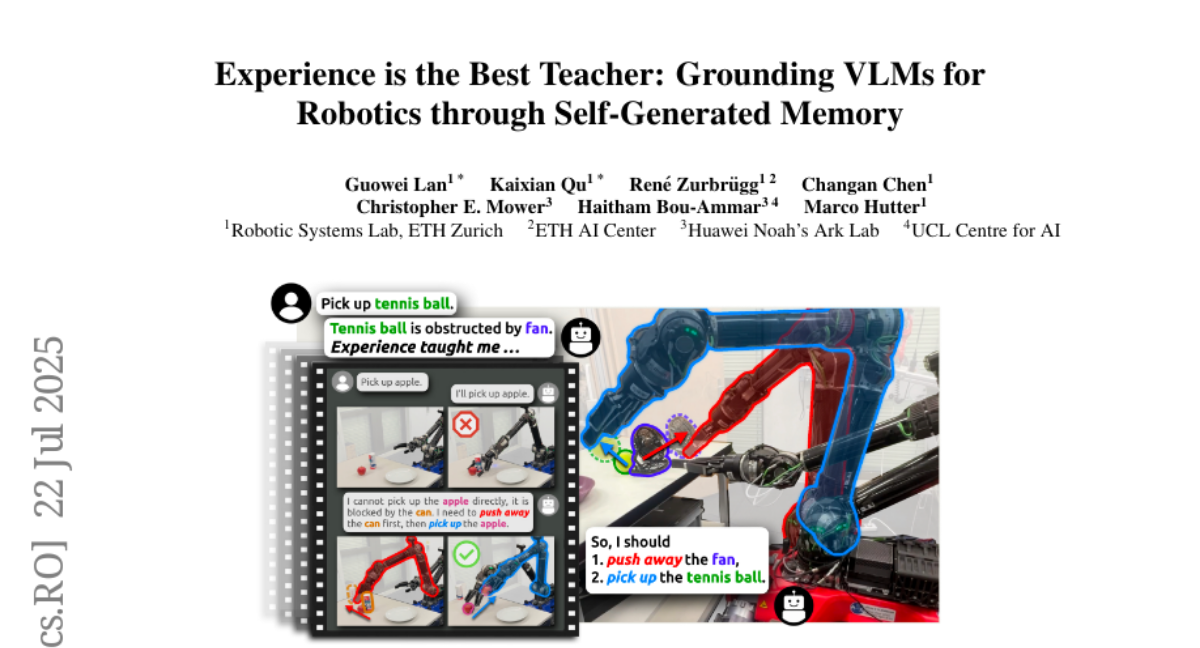

This paper talks about ExpTeach, a method that helps AI models understand and interact with the physical world better by letting robots learn from their own experiences and memories.

What's the problem?

Vision-language models often struggle to connect what they see and understand with real-world actions when controlling robots, making it hard for robots to perform tasks accurately.

What's the solution?

The researchers introduced a way for robots to generate their own memories of past experiences and use those memories to guide the AI model’s decisions. This memory plus retrieval system helps the robot plan and interact with objects more intelligently.

Why it matters?

This matters because it improves how robots learn and act in the real world, making them more capable of performing complex tasks, which is important for automation and helping humans with everyday jobs.

Abstract

ExpTeach grounds vision-language models to physical robots through self-generated memory and retrieval-augmented generation, improving success rates and enabling intelligent object interactions.