Failing Forward: Improving Generative Error Correction for ASR with Synthetic Data and Retrieval Augmentation

Sreyan Ghosh, Mohammad Sadegh Rasooli, Michael Levit, Peidong Wang, Jian Xue, Dinesh Manocha, Jinyu Li

2024-10-18

Summary

This paper discusses a new approach called DARAG, which improves the ability of Automatic Speech Recognition (ASR) systems to correct errors by using synthetic data and retrieval methods.

What's the problem?

Automatic Speech Recognition systems can make mistakes when they misinterpret spoken words, especially when faced with new types of errors that the system hasn't learned from before. This is particularly challenging for named entities (like people's names or places) because new ones keep appearing, and the models often lack enough context to understand them correctly. As a result, existing error correction methods struggle to fix these mistakes effectively.

What's the solution?

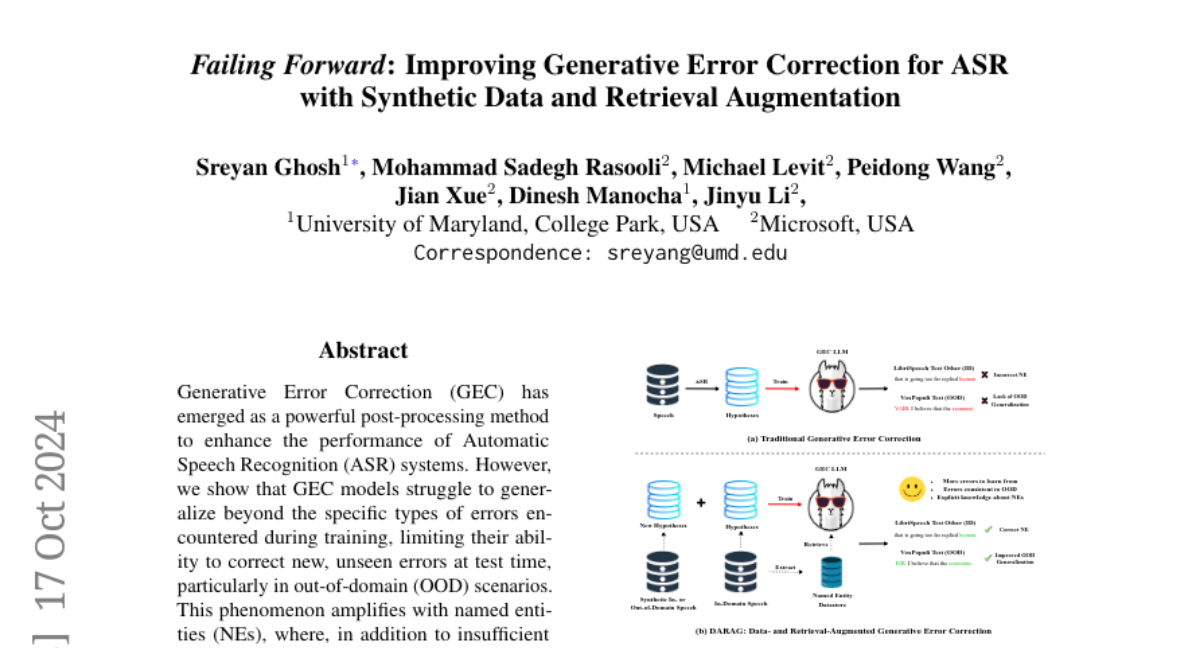

To tackle these issues, the authors propose DARAG (Data- and Retrieval-Augmented Generative Error Correction). This method enhances the training data for error correction by generating synthetic examples that simulate different types of errors. It also uses a retrieval system to pull in relevant named entities from a database, which helps the model better understand and correct its mistakes. By training on this augmented dataset, DARAG can handle both familiar and unfamiliar errors more effectively.

Why it matters?

This research is important because it helps improve the accuracy of ASR systems, making them more reliable for users. With better error correction capabilities, these systems can provide clearer transcriptions and enhance communication in various applications, such as voice assistants, customer service, and accessibility tools for people with hearing impairments. Overall, DARAG represents a significant step forward in making speech recognition technology more robust.

Abstract

Generative Error Correction (GEC) has emerged as a powerful post-processing method to enhance the performance of Automatic Speech Recognition (ASR) systems. However, we show that GEC models struggle to generalize beyond the specific types of errors encountered during training, limiting their ability to correct new, unseen errors at test time, particularly in out-of-domain (OOD) scenarios. This phenomenon amplifies with named entities (NEs), where, in addition to insufficient contextual information or knowledge about the NEs, novel NEs keep emerging. To address these issues, we propose DARAG (Data- and Retrieval-Augmented Generative Error Correction), a novel approach designed to improve GEC for ASR in in-domain (ID) and OOD scenarios. We augment the GEC training dataset with synthetic data generated by prompting LLMs and text-to-speech models, thereby simulating additional errors from which the model can learn. For OOD scenarios, we simulate test-time errors from new domains similarly and in an unsupervised fashion. Additionally, to better handle named entities, we introduce retrieval-augmented correction by augmenting the input with entities retrieved from a database. Our approach is simple, scalable, and both domain- and language-agnostic. We experiment on multiple datasets and settings, showing that DARAG outperforms all our baselines, achieving 8\% -- 30\% relative WER improvements in ID and 10\% -- 33\% improvements in OOD settings.