FastVLM: Efficient Vision Encoding for Vision Language Models

Pavan Kumar Anasosalu Vasu, Fartash Faghri, Chun-Liang Li, Cem Koc, Nate True, Albert Antony, Gokul Santhanam, James Gabriel, Peter Grasch, Oncel Tuzel, Hadi Pouransari

2024-12-19

Summary

This paper talks about FastVLM, a new method for efficiently processing images in Vision Language Models (VLMs) to improve performance in understanding images with text.

What's the problem?

When using Vision Language Models, increasing the resolution of input images is important for better understanding and performance. However, traditional methods struggle at high resolutions because they create too many tokens (data pieces) and take a long time to process them. This inefficiency can slow down the model and make it harder to generate accurate results.

What's the solution?

FastVLM addresses this issue by introducing a new hybrid vision encoder called FastViTHD, which reduces the number of tokens and speeds up the encoding time for high-resolution images. Instead of relying on complex methods that require extra processing, FastVLM simply scales the input image size to achieve a better balance between image quality and processing speed. This allows the model to maintain high performance while being much faster and using less memory.

Why it matters?

This research is important because it enhances how AI models can understand and generate responses based on images and text. By improving efficiency in processing high-resolution images, FastVLM can lead to faster and more accurate applications in areas like image recognition, automated content generation, and other tasks that require a combination of visual and textual information.

Abstract

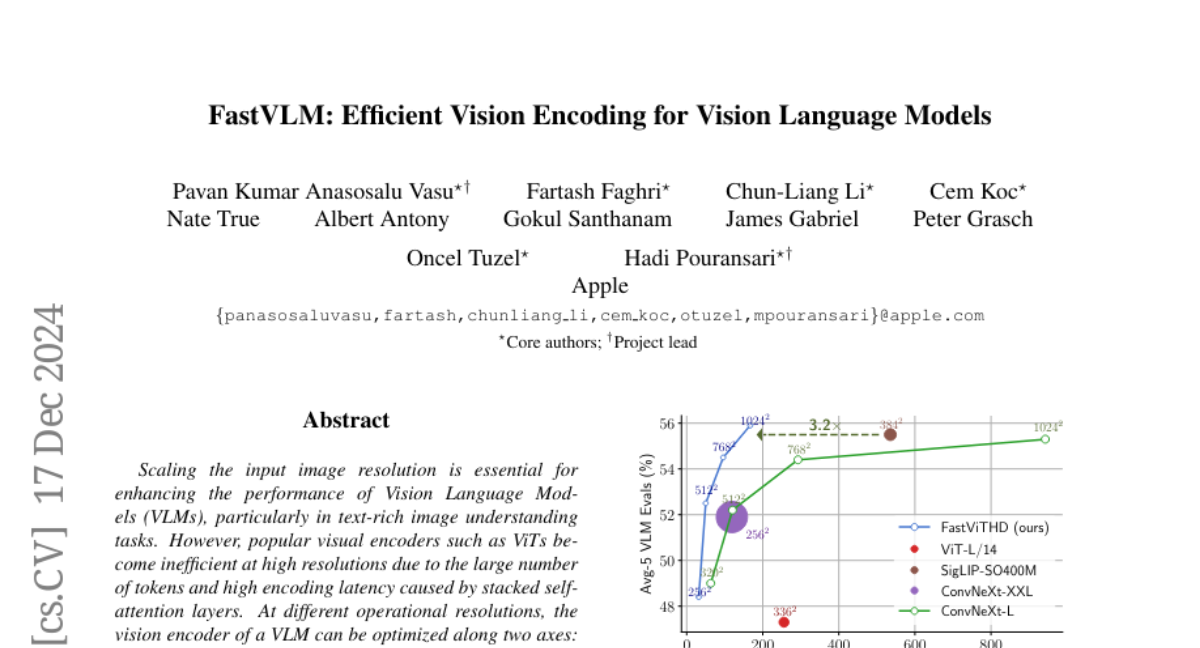

Scaling the input image resolution is essential for enhancing the performance of Vision Language Models (VLMs), particularly in text-rich image understanding tasks. However, popular visual encoders such as ViTs become inefficient at high resolutions due to the large number of tokens and high encoding latency caused by stacked self-attention layers. At different operational resolutions, the vision encoder of a VLM can be optimized along two axes: reducing encoding latency and minimizing the number of visual tokens passed to the LLM, thereby lowering overall latency. Based on a comprehensive efficiency analysis of the interplay between image resolution, vision latency, token count, and LLM size, we introduce FastVLM, a model that achieves an optimized trade-off between latency, model size and accuracy. FastVLM incorporates FastViTHD, a novel hybrid vision encoder designed to output fewer tokens and significantly reduce encoding time for high-resolution images. Unlike previous methods, FastVLM achieves the optimal balance between visual token count and image resolution solely by scaling the input image, eliminating the need for additional token pruning and simplifying the model design. In the LLaVA-1.5 setup, FastVLM achieves 3.2times improvement in time-to-first-token (TTFT) while maintaining similar performance on VLM benchmarks compared to prior works. Compared to LLaVa-OneVision at the highest resolution (1152times1152), FastVLM achieves comparable performance on key benchmarks like SeedBench and MMMU, using the same 0.5B LLM, but with 85times faster TTFT and a vision encoder that is 3.4times smaller.