Found in the Middle: Calibrating Positional Attention Bias Improves Long Context Utilization

Cheng-Yu Hsieh, Yung-Sung Chuang, Chun-Liang Li, Zifeng Wang, Long T. Le, Abhishek Kumar, James Glass, Alexander Ratner, Chen-Yu Lee, Ranjay Krishna, Tomas Pfister

2024-06-25

Summary

This paper discusses a new method called 'found-in-the-middle' that helps large language models (LLMs) better understand and utilize information located in the middle of long input texts. It addresses a common issue known as the 'lost-in-the-middle' problem, where models tend to overlook important details that are not at the beginning or end of the text.

What's the problem?

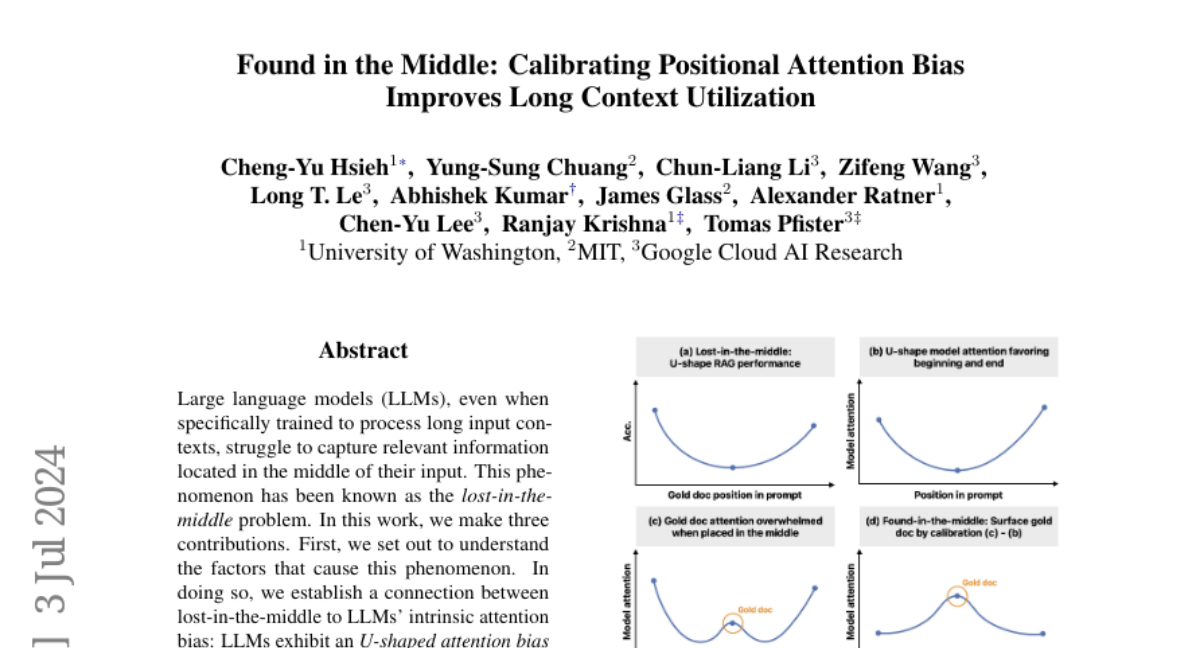

LLMs often struggle to capture relevant information that appears in the middle of long inputs. This happens because they have a built-in attention bias, meaning they focus more on the first and last parts of the input rather than treating all parts equally. This bias can lead to poor performance in tasks that require understanding the entire context, especially when using retrieval-augmented generation (RAG) techniques.

What's the solution?

The authors introduce a calibration mechanism called 'found-in-the-middle' that adjusts how attention is given to different parts of the input. This method helps the model focus more on relevant information, regardless of where it appears in the text. By applying this calibration, they demonstrate that models can significantly improve their ability to locate important details in long contexts, achieving better performance across various tasks and outperforming existing methods by up to 15 percentage points.

Why it matters?

This research is important because it enhances how LLMs process long texts, making them more effective for real-world applications like question answering and document retrieval. By addressing the lost-in-the-middle problem, this work can lead to more accurate and reliable AI systems that understand context better, ultimately improving user experiences.

Abstract

Large language models (LLMs), even when specifically trained to process long input contexts, struggle to capture relevant information located in the middle of their input. This phenomenon has been known as the lost-in-the-middle problem. In this work, we make three contributions. First, we set out to understand the factors that cause this phenomenon. In doing so, we establish a connection between lost-in-the-middle to LLMs' intrinsic attention bias: LLMs exhibit a U-shaped attention bias where the tokens at the beginning and at the end of its input receive higher attention, regardless of their relevance. Second, we mitigate this positional bias through a calibration mechanism, found-in-the-middle, that allows the model to attend to contexts faithfully according to their relevance, even though when they are in the middle. Third, we show found-in-the-middle not only achieves better performance in locating relevant information within a long context, but also eventually leads to improved retrieval-augmented generation (RAG) performance across various tasks, outperforming existing methods by up to 15 percentage points. These findings open up future directions in understanding LLM attention bias and its potential consequences.