FullFront: Benchmarking MLLMs Across the Full Front-End Engineering Workflow

Haoyu Sun, Huichen Will Wang, Jiawei Gu, Linjie Li, Yu Cheng

2025-05-26

Summary

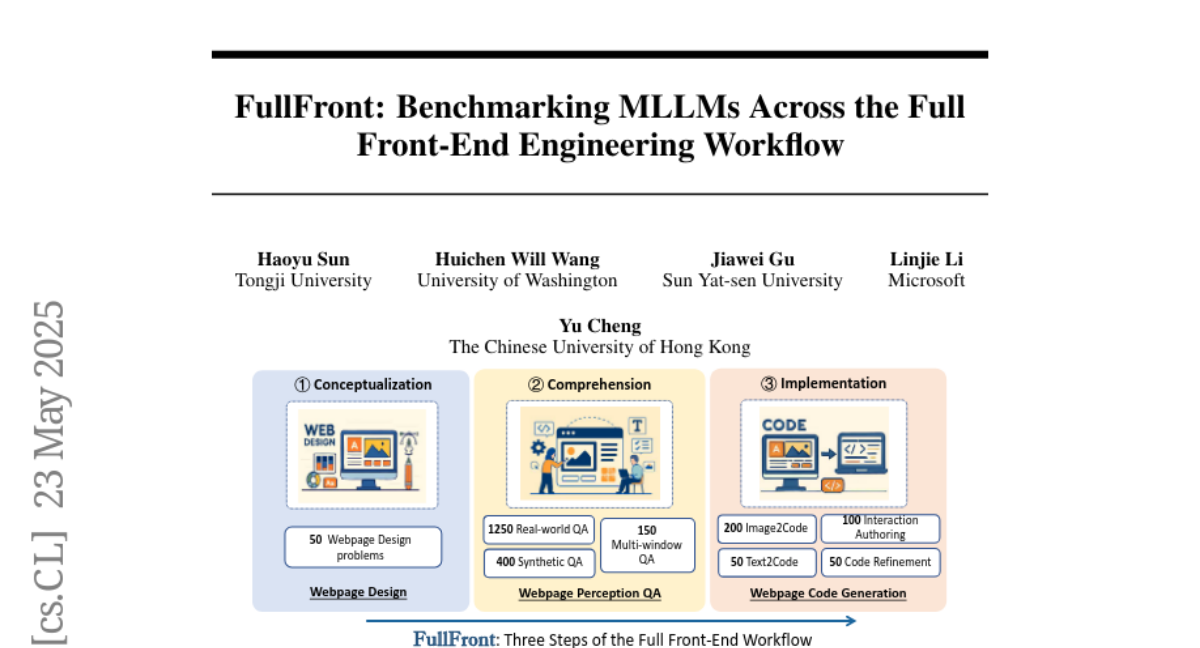

This paper talks about FullFront, a new benchmark that tests how well Multimodal Large Language Models can help with all the main steps in front-end engineering, from coming up with ideas to understanding and actually building user interfaces.

What's the problem?

The problem is that while these advanced AI models are getting better at handling code and design, there hasn't been a complete way to measure how well they do across the whole process of front-end development, which includes planning, understanding, and creating web interfaces.

What's the solution?

The researchers created FullFront, a set of challenges that cover every stage of front-end engineering. This benchmark checks if the models can take an idea, understand what needs to be done, and then actually turn it into working code or design, giving a full picture of their abilities.

Why it matters?

This is important because it helps developers and researchers see where AI models are strong or need improvement in real-world web development tasks, making it easier to build better tools for creating websites and apps.

Abstract

FullFront is a benchmark evaluating Multimodal Large Language Models across conceptualization, comprehension, and implementation phases in front-end engineering.