Game4Loc: A UAV Geo-Localization Benchmark from Game Data

Yuxiang Ji, Boyong He, Zhuoyue Tan, Liaoni Wu

2024-09-26

Summary

This paper introduces Game4Loc, a new benchmark and dataset designed to improve the process of geo-localization for unmanned aerial vehicles (UAVs) using data from video games. It aims to help UAVs determine their location without relying solely on GPS.

What's the problem?

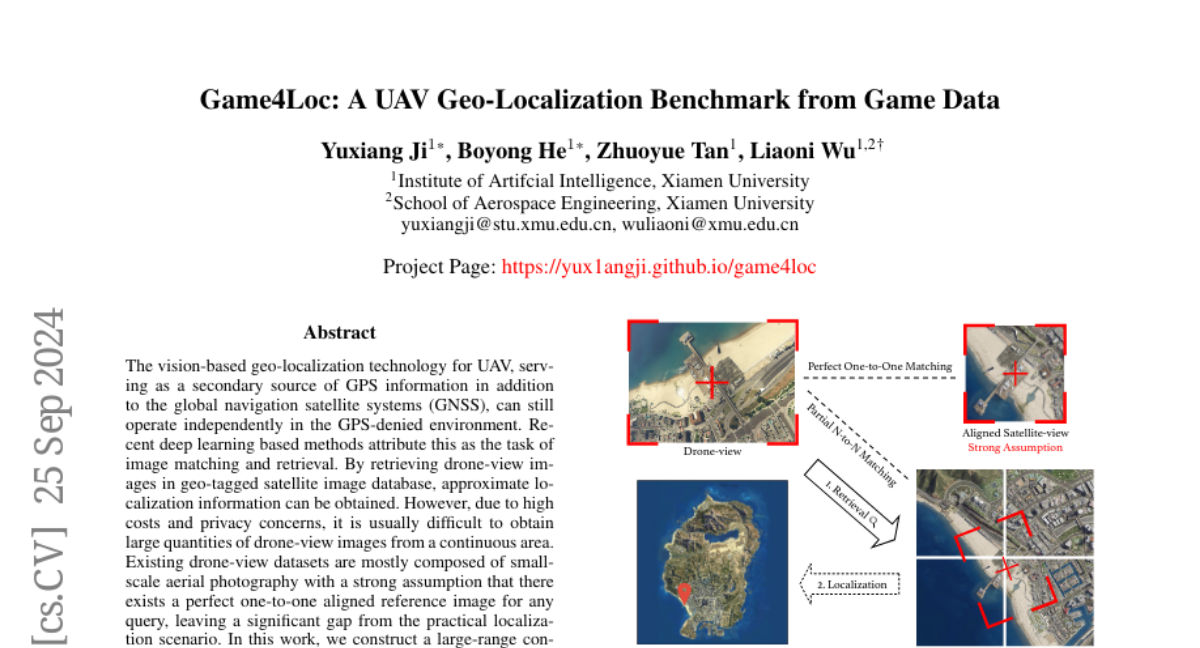

Geo-localization for UAVs is challenging, especially in areas where GPS signals are weak or unavailable. Traditional methods often require large amounts of drone-view images, which can be expensive and difficult to collect. Most existing datasets assume that there is a perfect one-to-one match between drone images and satellite images, which is not realistic in practical situations.

What's the solution?

To address these issues, the researchers created a new dataset called GTA-UAV, which includes a wide range of drone images collected from various flight conditions using modern computer games. This dataset features multiple scenes and flight altitudes, allowing for a more realistic approach to geo-localization. The researchers also developed a new method that allows for partial matches between drone and satellite images, making it easier to find the UAV's location even when the images do not perfectly align. They used a technique called weighted contrastive learning to effectively train their model on this data.

Why it matters?

This research is important because it provides a more practical and effective way to help UAVs determine their location in real-world scenarios. By using data from video games, the researchers have created a large and diverse dataset that can improve the accuracy of geo-localization methods. This advancement could enhance the capabilities of drones in various applications, such as search and rescue missions, environmental monitoring, and agricultural management.

Abstract

The vision-based geo-localization technology for UAV, serving as a secondary source of GPS information in addition to the global navigation satellite systems (GNSS), can still operate independently in the GPS-denied environment. Recent deep learning based methods attribute this as the task of image matching and retrieval. By retrieving drone-view images in geo-tagged satellite image database, approximate localization information can be obtained. However, due to high costs and privacy concerns, it is usually difficult to obtain large quantities of drone-view images from a continuous area. Existing drone-view datasets are mostly composed of small-scale aerial photography with a strong assumption that there exists a perfect one-to-one aligned reference image for any query, leaving a significant gap from the practical localization scenario. In this work, we construct a large-range contiguous area UAV geo-localization dataset named GTA-UAV, featuring multiple flight altitudes, attitudes, scenes, and targets using modern computer games. Based on this dataset, we introduce a more practical UAV geo-localization task including partial matches of cross-view paired data, and expand the image-level retrieval to the actual localization in terms of distance (meters). For the construction of drone-view and satellite-view pairs, we adopt a weight-based contrastive learning approach, which allows for effective learning while avoiding additional post-processing matching steps. Experiments demonstrate the effectiveness of our data and training method for UAV geo-localization, as well as the generalization capabilities to real-world scenarios.