GazeGen: Gaze-Driven User Interaction for Visual Content Generation

He-Yen Hsieh, Ziyun Li, Sai Qian Zhang, Wei-Te Mark Ting, Kao-Den Chang, Barbara De Salvo, Chiao Liu, H. T. Kung

2024-11-08

Summary

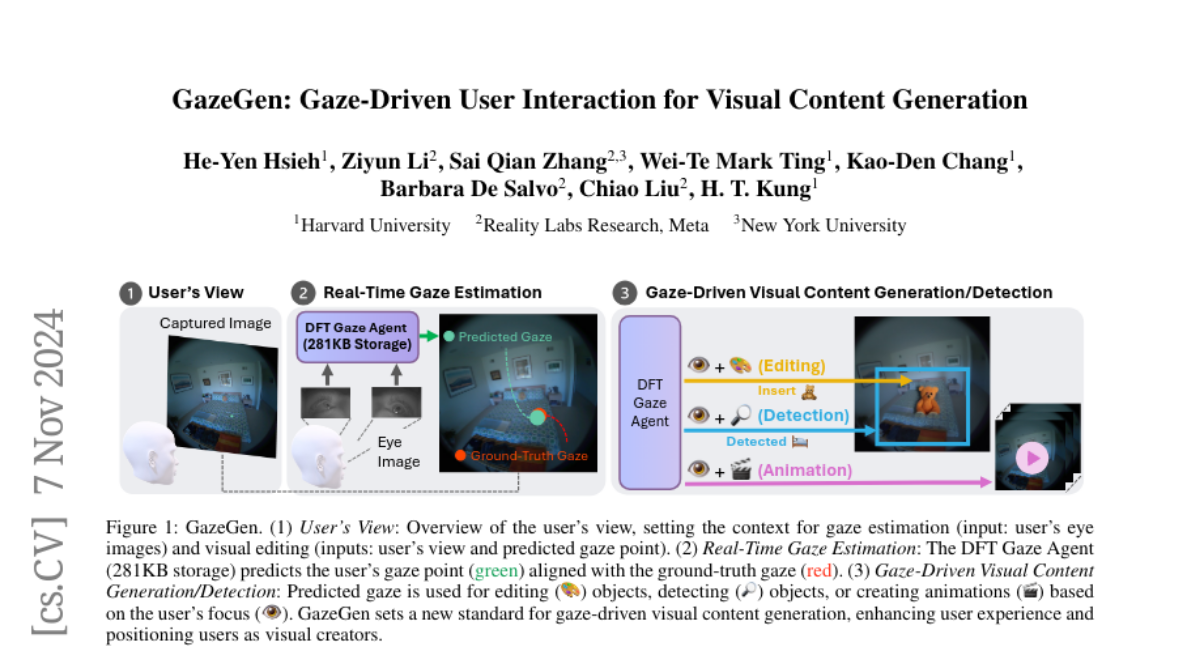

This paper introduces GazeGen, a system that allows users to create and edit images and videos using their eye gaze, making the process more intuitive and hands-free.

What's the problem?

Many traditional methods for manipulating visual content require physical interaction, which can be difficult for some people, especially those with disabilities. Additionally, existing systems often do not allow for easy and natural ways to interact with digital content without using hands or controllers.

What's the solution?

GazeGen uses a lightweight model called DFT Gaze to track where a user is looking in real-time. This system allows users to control visual content by simply looking at different areas on the screen. Users can add or delete objects in images, change their positions, or even turn static images into videos—all by directing their gaze. The DFT Gaze model is efficient and accurate, designed to work on small devices like Raspberry Pi, making it accessible for various applications.

Why it matters?

This research is significant because it enhances user interaction with digital content in a way that is more natural and accessible. By allowing people to manipulate images and videos using just their eyes, GazeGen opens up new possibilities for creative expression and usability in technology, especially for individuals who may have difficulty using traditional input methods.

Abstract

We present GazeGen, a user interaction system that generates visual content (images and videos) for locations indicated by the user's eye gaze. GazeGen allows intuitive manipulation of visual content by targeting regions of interest with gaze. Using advanced techniques in object detection and generative AI, GazeGen performs gaze-controlled image adding/deleting, repositioning, and surface material changes of image objects, and converts static images into videos. Central to GazeGen is the DFT Gaze (Distilled and Fine-Tuned Gaze) agent, an ultra-lightweight model with only 281K parameters, performing accurate real-time gaze predictions tailored to individual users' eyes on small edge devices. GazeGen is the first system to combine visual content generation with real-time gaze estimation, made possible exclusively by DFT Gaze. This real-time gaze estimation enables various visual content generation tasks, all controlled by the user's gaze. The input for DFT Gaze is the user's eye images, while the inputs for visual content generation are the user's view and the predicted gaze point from DFT Gaze. To achieve efficient gaze predictions, we derive the small model from a large model (10x larger) via novel knowledge distillation and personal adaptation techniques. We integrate knowledge distillation with a masked autoencoder, developing a compact yet powerful gaze estimation model. This model is further fine-tuned with Adapters, enabling highly accurate and personalized gaze predictions with minimal user input. DFT Gaze ensures low-latency and precise gaze tracking, supporting a wide range of gaze-driven tasks. We validate the performance of DFT Gaze on AEA and OpenEDS2020 benchmarks, demonstrating low angular gaze error and low latency on the edge device (Raspberry Pi 4). Furthermore, we describe applications of GazeGen, illustrating its versatility and effectiveness in various usage scenarios.