Gen2Act: Human Video Generation in Novel Scenarios enables Generalizable Robot Manipulation

Homanga Bharadhwaj, Debidatta Dwibedi, Abhinav Gupta, Shubham Tulsiani, Carl Doersch, Ted Xiao, Dhruv Shah, Fei Xia, Dorsa Sadigh, Sean Kirmani

2024-09-25

Summary

This paper presents Gen2Act, a new approach for teaching robots how to perform tasks with objects they haven't seen before by generating human-like videos that demonstrate the actions. This method allows robots to learn from video examples instead of needing extensive real-world training.

What's the problem?

Training robots to manipulate objects can be expensive and time-consuming, especially when it comes to new tasks involving unfamiliar objects or motions. Traditional methods require collecting a lot of data from real robot interactions, which is not only costly but also limits the robot's ability to adapt to new situations.

What's the solution?

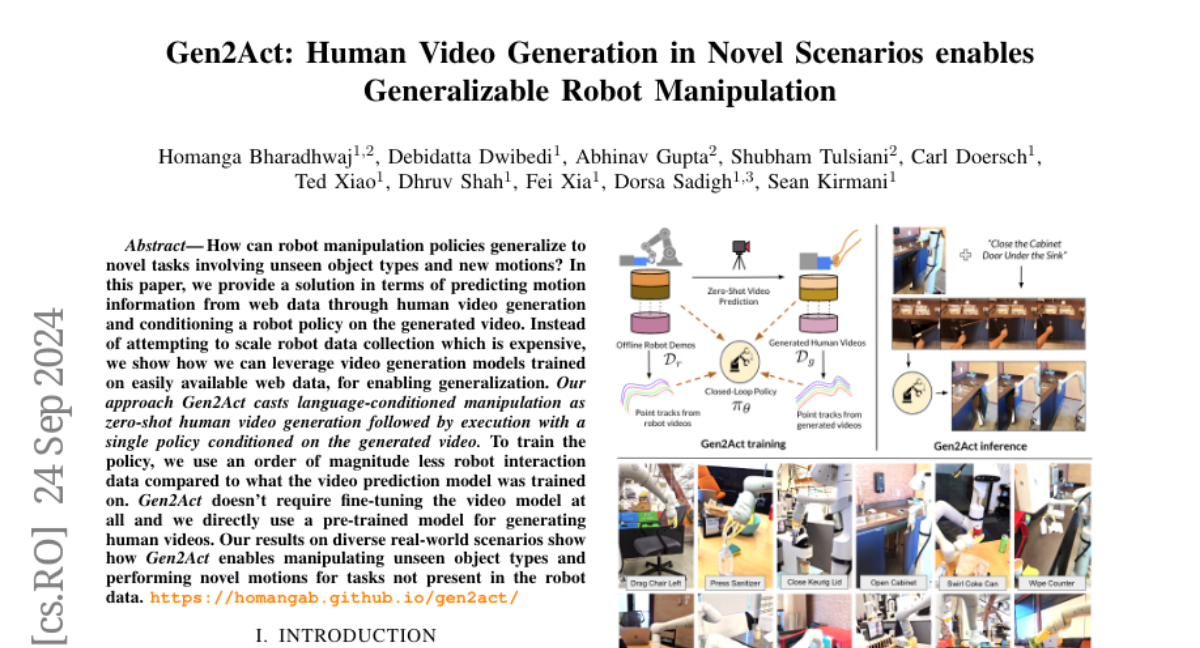

To solve this problem, the researchers developed Gen2Act, which uses human video generation to create examples of how to handle various tasks. Instead of relying on a large amount of robot data, Gen2Act generates videos based on web data that show how humans perform these tasks. The robot then uses these videos as guides to learn how to act in new scenarios without needing to be retrained. This approach allows the robot to generalize its skills to unseen objects and motions by simply watching how a human would do it.

Why it matters?

This research is important because it represents a significant advancement in robotics by making it easier and cheaper for robots to learn new tasks. By using human-generated videos instead of requiring extensive real-world training, Gen2Act opens up possibilities for robots to be more adaptable and effective in various environments, such as homes or workplaces. This could lead to more practical applications of robots in everyday life.

Abstract

How can robot manipulation policies generalize to novel tasks involving unseen object types and new motions? In this paper, we provide a solution in terms of predicting motion information from web data through human video generation and conditioning a robot policy on the generated video. Instead of attempting to scale robot data collection which is expensive, we show how we can leverage video generation models trained on easily available web data, for enabling generalization. Our approach Gen2Act casts language-conditioned manipulation as zero-shot human video generation followed by execution with a single policy conditioned on the generated video. To train the policy, we use an order of magnitude less robot interaction data compared to what the video prediction model was trained on. Gen2Act doesn't require fine-tuning the video model at all and we directly use a pre-trained model for generating human videos. Our results on diverse real-world scenarios show how Gen2Act enables manipulating unseen object types and performing novel motions for tasks not present in the robot data. Videos are at https://homangab.github.io/gen2act/