Generate, but Verify: Reducing Hallucination in Vision-Language Models with Retrospective Resampling

Tsung-Han Wu, Heekyung Lee, Jiaxin Ge, Joseph E. Gonzalez, Trevor Darrell, David M. Chan

2025-04-18

Summary

This paper talks about REVERSE, a new system that helps AI models that understand both pictures and text avoid making things up or 'hallucinating' by double-checking their own answers as they generate them.

What's the problem?

The problem is that vision-language models, which are supposed to describe images or answer questions about them, sometimes give answers that sound convincing but are actually wrong or made up. This is called hallucination, and it can be a big issue when people rely on these models for important information.

What's the solution?

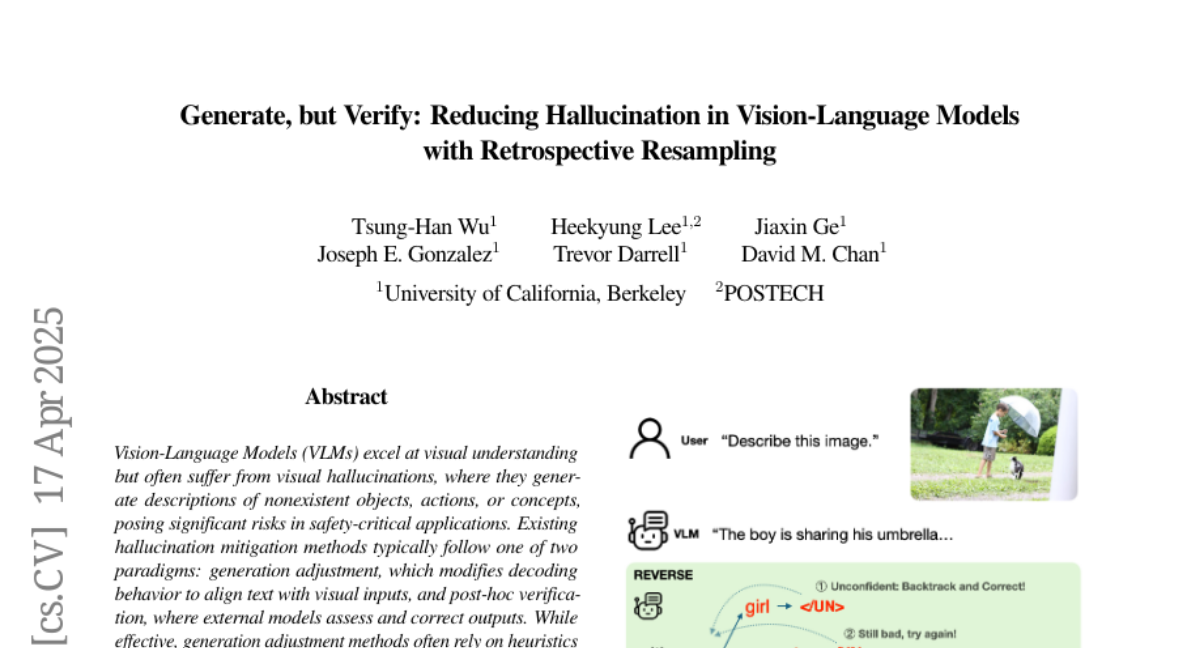

The researchers created REVERSE, which trains the model to be aware of when it might be hallucinating and adds a step where the model checks its own answers as it goes, using a new technique called retrospective resampling. This helps the model catch mistakes and correct them before giving a final answer.

Why it matters?

This matters because it makes AI systems more trustworthy and accurate, especially in situations where getting the facts right is really important, like in education, healthcare, or news reporting.

Abstract

REVERSE is a unified framework that reduces hallucinations in Vision-Language Models by integrating hallucination-aware training and on-the-fly self-verification using a novel retrospective resampling technique.