GET-Zero: Graph Embodiment Transformer for Zero-shot Embodiment Generalization

Austin Patel, Shuran Song

2024-07-23

Summary

This paper introduces GET-Zero, a new model designed to help robots adapt to different hardware setups without needing to retrain. It uses a special architecture called the Graph Embodiment Transformer to improve how robots learn to control themselves based on their physical configurations.

What's the problem?

When robots are built with different parts or designs, they often need to be retrained to learn how to move and operate correctly. This process can be time-consuming and inefficient, especially if the robot's hardware changes frequently. Traditional methods struggle with this adaptability, making it hard for robots to quickly adjust to new setups or configurations.

What's the solution?

GET-Zero addresses this challenge by using a unique model that combines two neural networks. These networks work together to predict how the robot should move based on its current hardware setup without needing any prior training on that specific configuration. The model learns from demonstration data provided by expert policies and can generalize its knowledge to new situations, allowing it to perform tasks like rotating objects with a robotic hand even when the hand's design changes.

Why it matters?

This research is important because it allows robots to be more flexible and efficient in their operations. By reducing the need for retraining when hardware changes, GET-Zero can help speed up the development and deployment of robotic systems in various fields, such as manufacturing, healthcare, and service industries. This adaptability could lead to more advanced and capable robots that can handle a wider range of tasks.

Abstract

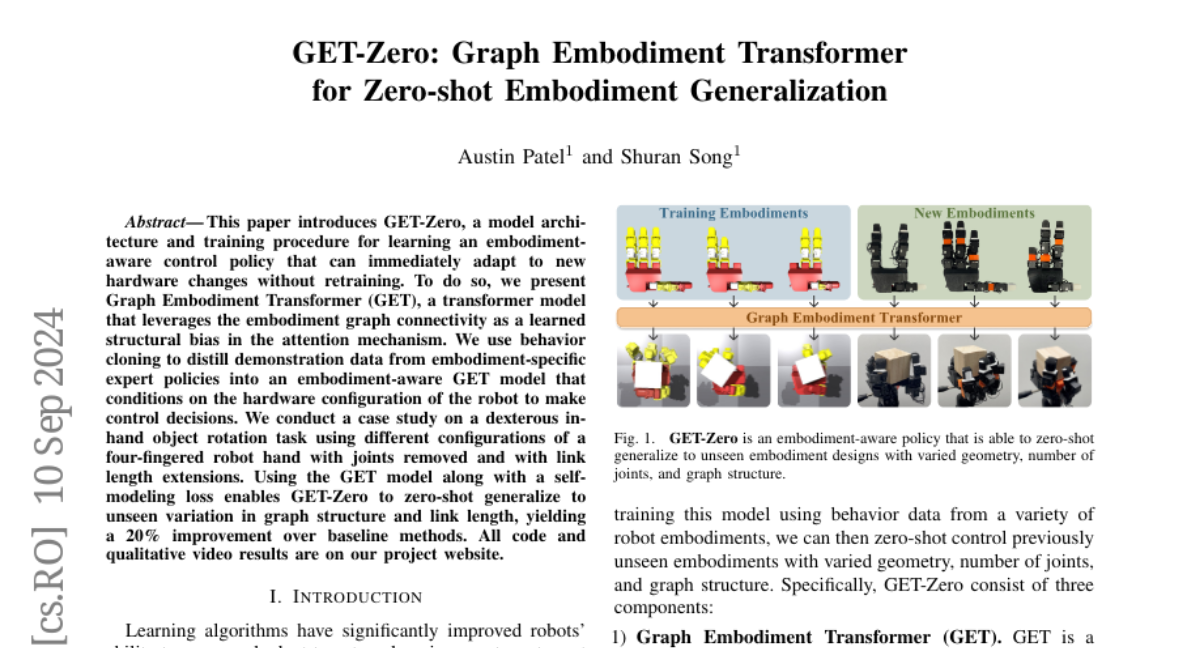

This paper introduces GET-Zero, a model architecture and training procedure for learning an embodiment-aware control policy that can immediately adapt to new hardware changes without retraining. To do so, we present Graph Embodiment Transformer (GET), a transformer model that leverages the embodiment graph connectivity as a learned structural bias in the attention mechanism. We use behavior cloning to distill demonstration data from embodiment-specific expert policies into an embodiment-aware GET model that conditions on the hardware configuration of the robot to make control decisions. We conduct a case study on a dexterous in-hand object rotation task using different configurations of a four-fingered robot hand with joints removed and with link length extensions. Using the GET model along with a self-modeling loss enables GET-Zero to zero-shot generalize to unseen variation in graph structure and link length, yielding a 20% improvement over baseline methods. All code and qualitative video results are on https://get-zero-paper.github.io