GoT: Unleashing Reasoning Capability of Multimodal Large Language Model for Visual Generation and Editing

Rongyao Fang, Chengqi Duan, Kun Wang, Linjiang Huang, Hao Li, Shilin Yan, Hao Tian, Xingyu Zeng, Rui Zhao, Jifeng Dai, Xihui Liu, Hongsheng Li

2025-03-14

Summary

This paper talks about GoT, a new AI tool that creates or edits images by first writing out its 'thought process' in words, making sure the final picture matches what users want by planning object placements and relationships step-by-step.

What's the problem?

Current image-making AI tools often struggle to follow complex instructions precisely, like placing objects in specific spots or understanding how different parts of a scene should relate to each other.

What's the solution?

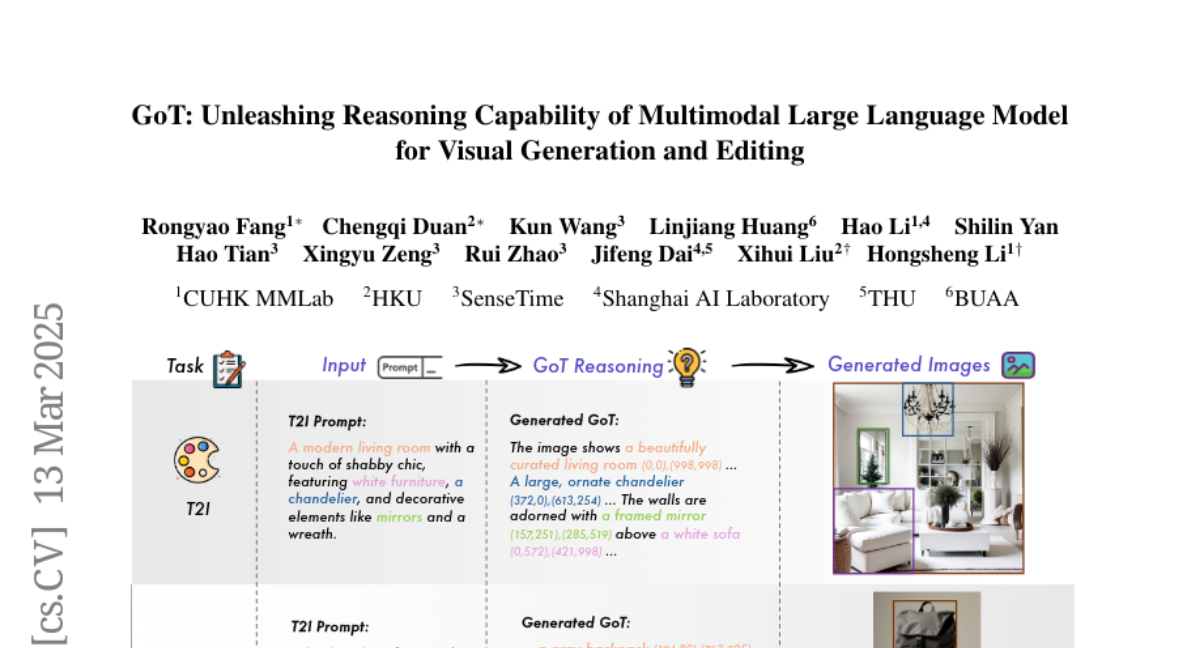

GoT adds a reasoning step where the AI writes a detailed plan about where things go and how they connect before drawing anything, using a special guidance system to turn these plans into accurate images.

Why it matters?

This helps artists, designers, and content creators make images that better match their vision, reducing guesswork and making AI image tools more reliable for professional use.

Abstract

Current image generation and editing methods primarily process textual prompts as direct inputs without reasoning about visual composition and explicit operations. We present Generation Chain-of-Thought (GoT), a novel paradigm that enables generation and editing through an explicit language reasoning process before outputting images. This approach transforms conventional text-to-image generation and editing into a reasoning-guided framework that analyzes semantic relationships and spatial arrangements. We define the formulation of GoT and construct large-scale GoT datasets containing over 9M samples with detailed reasoning chains capturing semantic-spatial relationships. To leverage the advantages of GoT, we implement a unified framework that integrates Qwen2.5-VL for reasoning chain generation with an end-to-end diffusion model enhanced by our novel Semantic-Spatial Guidance Module. Experiments show our GoT framework achieves excellent performance on both generation and editing tasks, with significant improvements over baselines. Additionally, our approach enables interactive visual generation, allowing users to explicitly modify reasoning steps for precise image adjustments. GoT pioneers a new direction for reasoning-driven visual generation and editing, producing images that better align with human intent. To facilitate future research, we make our datasets, code, and pretrained models publicly available at https://github.com/rongyaofang/GoT.