GRIT: Teaching MLLMs to Think with Images

Yue Fan, Xuehai He, Diji Yang, Kaizhi Zheng, Ching-Chen Kuo, Yuting Zheng, Sravana Jyothi Narayanaraju, Xinze Guan, Xin Eric Wang

2025-05-23

Summary

This paper talks about GRIT, a new technique that helps AI models, which work with both language and images, get better at thinking through visual problems by combining words and specific locations in images.

What's the problem?

The problem is that most AI models that handle both text and images aren't very good at explaining their reasoning when it comes to visual tasks. They might give the right answer, but they can't show how they figured it out, especially when they need to point to exact spots in an image.

What's the solution?

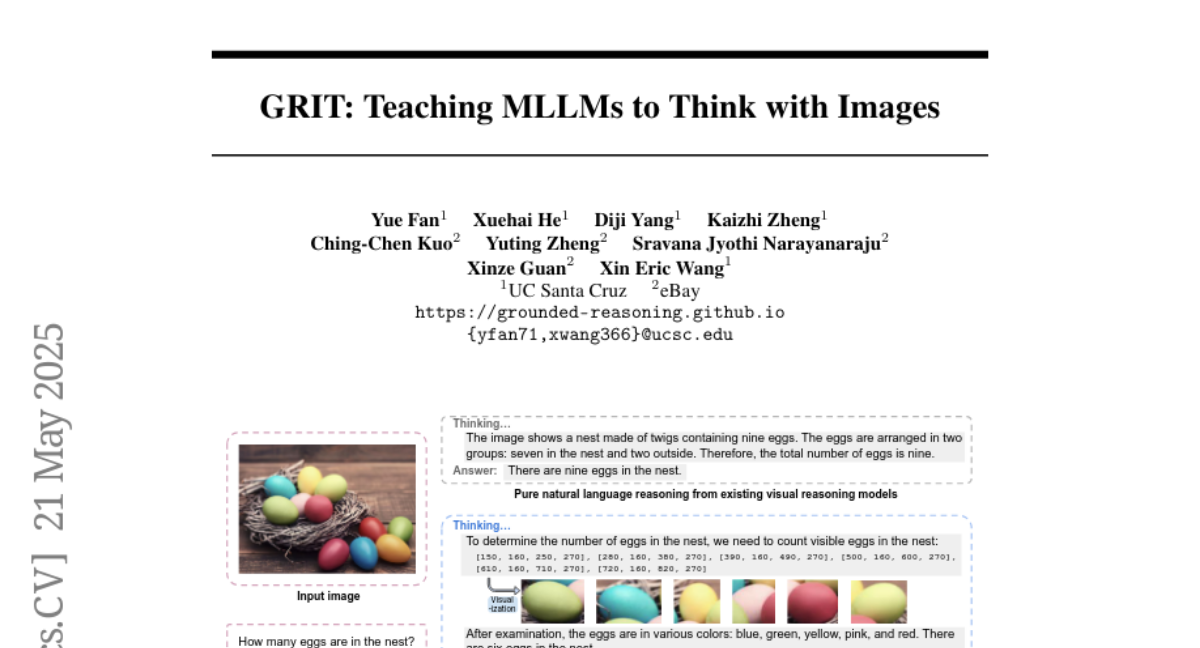

The researchers created GRIT, a method that teaches these models to build step-by-step explanations that mix regular sentences with precise coordinates in the image, like drawing boxes around important parts. They used reinforcement learning, which is a way for the model to learn from feedback, to make this process efficient and not require tons of extra data.

Why it matters?

This matters because it makes AI models much better at solving and explaining complex visual problems. If an AI can show both what it is thinking and exactly where it's looking in an image, it's more trustworthy and useful for things like education, medicine, or any job where understanding and explaining images is important.

Abstract

A novel method called GRIT enhances visual reasoning in MLLMs by generating reasoning chains that integrate both natural language and bounding box coordinates, guided by a reinforcement learning approach for high data efficiency.