Grounded Reinforcement Learning for Visual Reasoning

Gabriel Sarch, Snigdha Saha, Naitik Khandelwal, Ayush Jain, Michael J. Tarr, Aviral Kumar, Katerina Fragkiadaki

2025-05-30

Summary

This paper talks about ViGoRL, an AI model that gets better at solving problems involving both pictures and words by learning to pay attention to the right parts of an image and using that visual information to reason more accurately.

What's the problem?

The problem is that many AI models struggle with visual reasoning tasks because they don't always know which parts of an image are important, so their answers can be off or not well supported by what they actually see.

What's the solution?

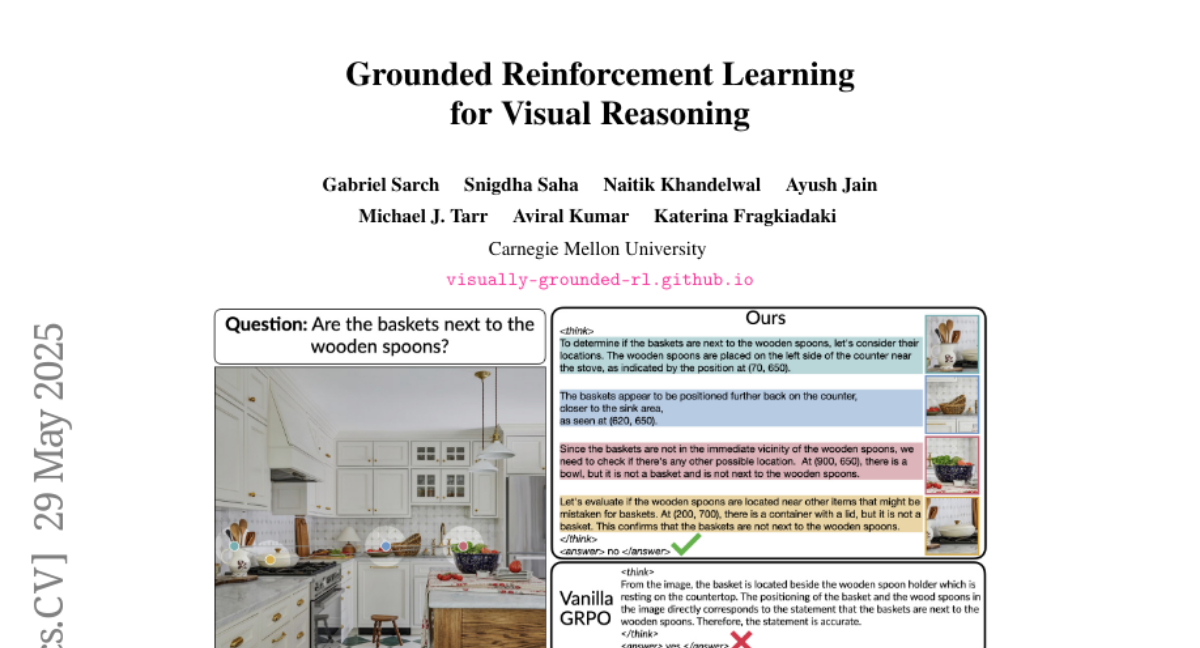

The researchers improved the model by using a special kind of training called visually grounded reinforcement learning, which teaches the AI to focus its attention on the most relevant parts of the image and base its reasoning on real visual clues and spatial evidence.

Why it matters?

This is important because it means AI can now understand and explain visual information more like a human would, making it more reliable for things like answering questions about pictures, helping with homework, or supporting scientific research.

Abstract

ViGoRL, a vision-language model enhanced with visually grounded reinforcement learning, achieves superior performance across various visual reasoning tasks by dynamically focusing visual attention and grounding reasoning in spatial evidence.