HARE: HumAn pRiors, a key to small language model Efficiency

Lingyun Zhang, Bin jin, Gaojian Ge, Lunhui Liu, Xuewen Shen, Mingyong Wu, Houqian Zhang, Yongneng Jiang, Shiqi Chen, Shi Pu

2024-06-21

Summary

This paper discusses a new approach called HARE, which focuses on using human knowledge to improve the efficiency of small language models (SLMs) in deep learning.

What's the problem?

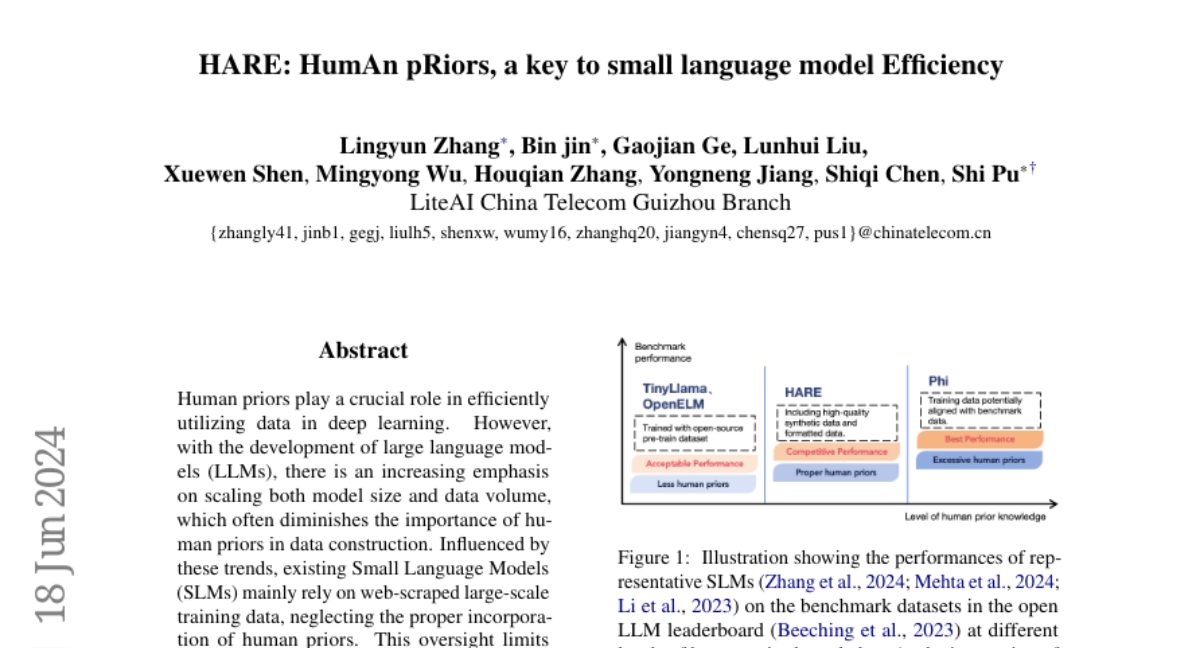

As large language models (LLMs) have become more popular, the focus has shifted to making them bigger and using more data. However, this often leads to ignoring the valuable insights that human knowledge can provide when creating training data. Many existing small language models rely heavily on large amounts of data scraped from the web, which can be inefficient and may not perform well in situations where resources are limited.

What's the solution?

The researchers propose a new principle that emphasizes using human priors—essentially, knowledge and insights from humans—to build better training datasets. They trained a small language model called HARE-1.1B using a focused dataset that balances diversity and quality while avoiding issues like data leakage. Their experiments showed that HARE-1.1B performs well compared to other leading small language models, demonstrating the effectiveness of incorporating human knowledge into training.

Why it matters?

This research is important because it highlights a way to make small language models more efficient and effective, especially in environments where resources are limited. By leveraging human insights, developers can create models that require less data while still achieving high performance, which can lead to advancements in various applications like chatbots, translation services, and more.

Abstract

Human priors play a crucial role in efficiently utilizing data in deep learning. However, with the development of large language models (LLMs), there is an increasing emphasis on scaling both model size and data volume, which often diminishes the importance of human priors in data construction. Influenced by these trends, existing Small Language Models (SLMs) mainly rely on web-scraped large-scale training data, neglecting the proper incorporation of human priors. This oversight limits the training efficiency of language models in resource-constrained settings. In this paper, we propose a principle to leverage human priors for data construction. This principle emphasizes achieving high-performance SLMs by training on a concise dataset that accommodates both semantic diversity and data quality consistency, while avoiding benchmark data leakage. Following this principle, we train an SLM named HARE-1.1B. Extensive experiments on large-scale benchmark datasets demonstrate that HARE-1.1B performs favorably against state-of-the-art SLMs, validating the effectiveness of the proposed principle. Additionally, this provides new insights into efficient language model training in resource-constrained environments from the view of human priors.