HART: Efficient Visual Generation with Hybrid Autoregressive Transformer

Haotian Tang, Yecheng Wu, Shang Yang, Enze Xie, Junsong Chen, Junyu Chen, Zhuoyang Zhang, Han Cai, Yao Lu, Song Han

2024-10-21

Summary

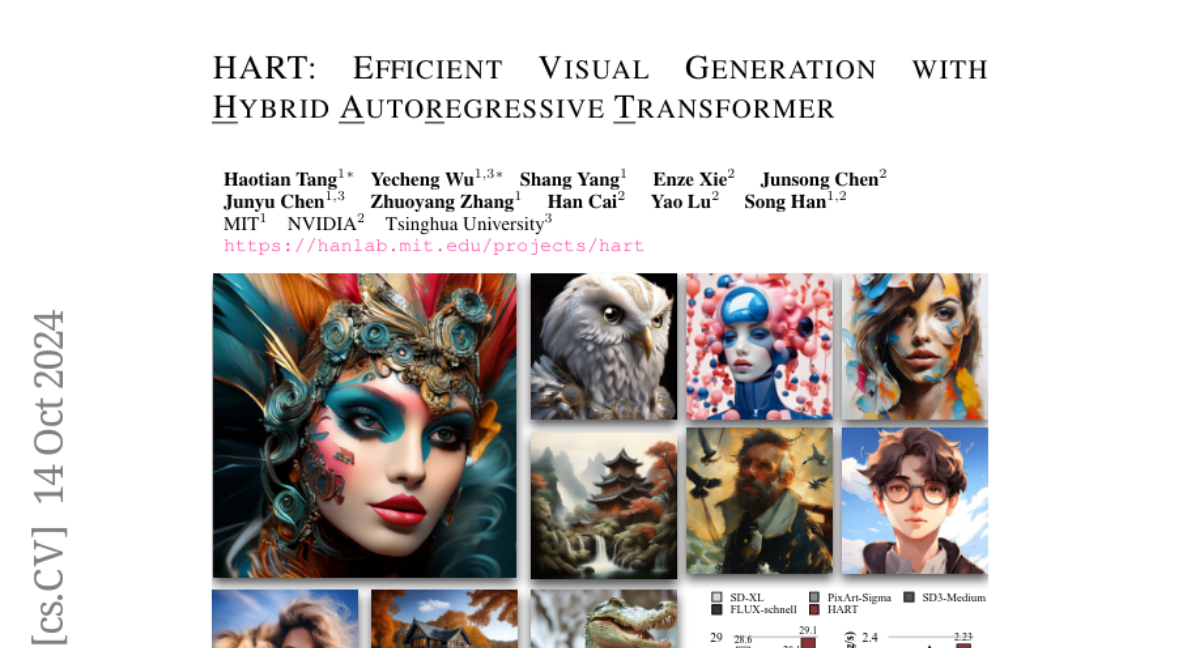

This paper introduces HART, a new model called the Hybrid Autoregressive Transformer, which can generate high-quality images at a resolution of 1024x1024 pixels more efficiently than previous models.

What's the problem?

Existing autoregressive (AR) models for image generation often struggle with producing high-quality images because they rely on discrete tokenizers that don't reconstruct images well. Additionally, generating high-resolution images can be very costly in terms of computational resources and time, making it challenging to create detailed visuals.

What's the solution?

To overcome these challenges, the authors developed HART, which uses a hybrid tokenizer that combines two types of tokens: discrete tokens for the overall image and continuous tokens for finer details. This approach allows HART to generate images that are not only high-quality but also produced more quickly. The model also includes a lightweight residual diffusion module to help improve the quality of the generated images while keeping the computational costs down. HART has shown significant improvements in image generation metrics compared to traditional methods.

Why it matters?

This research is important because it advances the field of visual generation by making it easier and faster to create high-resolution images. With HART's improved efficiency and quality, it can be used in various applications such as video game design, graphic art, and virtual reality, where high-quality visuals are essential.

Abstract

We introduce Hybrid Autoregressive Transformer (HART), an autoregressive (AR) visual generation model capable of directly generating 1024x1024 images, rivaling diffusion models in image generation quality. Existing AR models face limitations due to the poor image reconstruction quality of their discrete tokenizers and the prohibitive training costs associated with generating 1024px images. To address these challenges, we present the hybrid tokenizer, which decomposes the continuous latents from the autoencoder into two components: discrete tokens representing the big picture and continuous tokens representing the residual components that cannot be represented by the discrete tokens. The discrete component is modeled by a scalable-resolution discrete AR model, while the continuous component is learned with a lightweight residual diffusion module with only 37M parameters. Compared with the discrete-only VAR tokenizer, our hybrid approach improves reconstruction FID from 2.11 to 0.30 on MJHQ-30K, leading to a 31% generation FID improvement from 7.85 to 5.38. HART also outperforms state-of-the-art diffusion models in both FID and CLIP score, with 4.5-7.7x higher throughput and 6.9-13.4x lower MACs. Our code is open sourced at https://github.com/mit-han-lab/hart.